Introduction

The increase in cell-only households over time has led to the widespread use of dual-frame surveys that combine landline and cellphone samples. In fact, cellphones now make up a majority of interviews conducted in many dual-frame telephone surveys (e.g., McGeeney, Kennedy, and Weisel 2015). Although this shift has facilitated contact with hard-to-reach populations, such as young adults, it has also increased opportunities for respondents to multitask. It has been argued that completing surveys on cellphones increase the likelihood of being in non-private situations and exposure to more distracting stimuli such as in-store announcements and traffic (Kennedy and Everett 2011). Recent studies of online surveys have supported the idea that environmental distractions are greater when interviews are completed on smartphones rather than PCs. Respondents more often report being away from home and being distracted when answering questions on their smartphones when compared to PCs (Antoun, Couper, and Conrad 2017).

Previous research has shown the high frequency of multitasking during telephone surveys. In one of the first examinations of this question, Lavrakas, Tompson, and Benford (2010) found that 51% of cellphone respondents reported some form of multitasking. More recently, Heiden et al. (2017) reported similar results in a dual-frame telephone survey showing that 55% of respondents engaged in one or more secondary activities while responding to the survey.

Previous studies using online surveys have shown that multitasking tends to be more common among younger respondents (Ansolabehere and Schaffner 2015; Zwarun and Hall 2014). Examinations of both online and telephone surveys have documented that non-white respondents and parents are more likely to report multitasking (Ansolabehere and Schaffner 2015; Heiden et al. 2017). This study expands on these findings by including several indicators at the respondent and interview level.

Although, in principle, multitasking might negatively affect task performance and lead to reduced data quality, most studies have found that multitasking has no effect on the quality of answers (Ansolabehere and Schaffner 2015; Antoun, Couper, and Conrad 2017; Heiden et al. 2017). In spite of this, it is premature to draw conclusions given that the accumulated knowledge comes mainly from online surveys. The work reported here builds on previous research by examining a dual-frame telephone survey and including a greater variety of data quality indicators than those studies of telephone surveys carried previously. This work also includes interview characteristics that might be correlated with respondents’ behavior during the calls. Based on findings from previous studies, we hypothesize that a high percentage of respondents will report multitasking. However, we do not expect reported multitasking to necessarily predict poorer data quality.

Data and Methods

Sampling

Interviews were conducted using computer assisted telephone interviewing (CATI). A dual-frame random digit dial (DF-RDD) sample design, including landline and cellphones, was used in the study. The content of the survey was an assessment of health-related interventions across counties. In addition, the survey included oversamples in control and intervention counties. Samples were provided by Marketing Systems Group (MSG). Respondents were eligible if they lived in the state and were 18 years of age or older at the time of the interview. For the landline samples, interviewers randomly selected adult members of households using a modified Kish procedure.

Data Collection

Data were collected between September 29, 2016, and April 23, 2017, as part of a statewide dual-frame survey of adults in a Midwestern state regarding their perceptions and experiences with healthcare. The interviews (N = 2,132) averaged 20 minutes in length (SD = 4.86) and were conducted in English (n = 2,102) and Spanish (n = 30) by trained interviewers at the Center for Social & Behavioral Research at the University of Northern Iowa. No incentives or compensation was offered for participation.

Utilizing the American Association for Public Opinion Research (AAPOR) calculations, the overall response rate (RR3, AAPOR Standard Definitions 2016) was 27.1%. The response rate for the RDD landline sample was 25.4%, and the response rate for the cellphone sample was 27.3%. The overall cooperation rate (COOP3, AAPOR Standard Definitions 2016) was 73.5%. The cooperation rate for interviews completed via cellphone (78.9%) was higher than for landline (52.7%). The response (RR3) and cooperation (COOP3) rates for the oversample of counties were 25.5% and 70.8%, respectively.

Analysis and Measures

In order to test the hypothesis that a high percentage of respondents will report multitasking, we first examined descriptive statistics for respondent multitasking. Next, we used binary logistic regression to predict the probability of multitasking as a function of respondent and interview characteristics. To examine the relationship between multitasking and data quality we used t-tests, comparing the differences in data quality indicators between multitaskers and non-multitaskers.

Multitasking: Similar to Ansolabehere and Schaffner (2015), a self-reported measure of multitasking was included at the end of the survey. Specifically, respondents were asked whether they had engaged in any other activities while completing the survey (“During the time we’ve been on the phone, in what other activities, if any, were you engaged such as watching TV or watching kids?”). The question was field coded, and respondents could indicate as many activities as applied. After the responses were analyzed, respondents were classified as multitaskers or non-multitaskers. This process entailed recoding some answers (1.7%) in which respondents reported activities that, due to their minimal cognitive burden and high familiarity, were not considered secondary activities. These activities were: drinking or eating; looking out of the window or watching a building; petting a cat or a dog; sitting somewhere (car, church); smoking; and riding in a car.

Respondent characteristics included age, sex, race/ethnicity, education, employment status, annual household income, urbanicity, health status, and whether the respondents had children living in the house.

Interview characteristics included device type (cellphone and landline) and time of the call (day and night).

Data quality: As defined in Table 1, we examined five different indicators of data quality: (1) non-substantive responses, (2) non-differentiation, (3) round values for numerical responses, (4) response order effects, and (5) interview length (in minutes). A greater tendency toward satisficing would produce higher means for indicators 1, 3, and 4, and lower means for indicators 2 and 5.

Findings

Comparing Landline and Cellphone Respondents

Unweighted descriptive statistics for the cellphone and landline samples are presented in Table 2. As expected, landlines and cellphone respondents differed in several sociodemographic characteristics, including age, sex, race, ethnicity, employment status, income, and whether or not they had children in the household. Slightly over half of the cellphone respondents (53.6%) were under age 55 compared with approximately one-quarter in the landline sample (24.6%). Fewer of the landline respondents were employed (39.5% vs. 64.9%) or had children in the household (16.4% vs. 30.9%), which is likely attributable to their older age distribution. In the cellphone sample, a higher proportion of respondents identified themselves as non-whites (7% vs. 2.3%) and Hispanics (4.5% vs. 0.8%). The cellphone sample was comprised of a higher proportion of males (51.4% vs. 43.4%) and people with higher incomes.

Prevalence of Respondent Multitasking

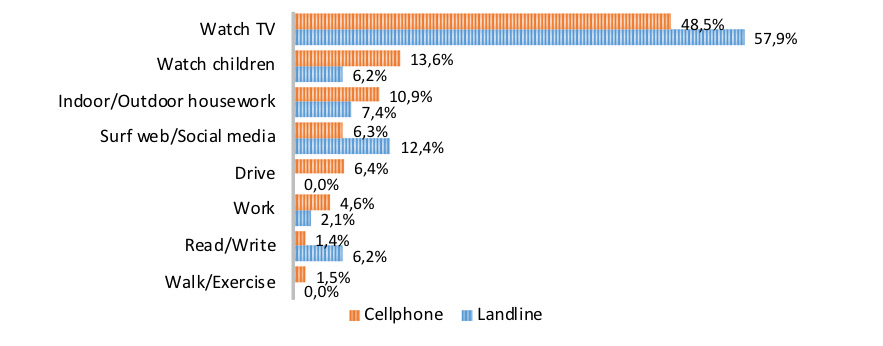

Overall, slightly over half of the respondents (53.3%) reported engaging in at least one secondary activity while answering the survey. Although a small percentage reported two or more activities (8.8%), most of them indicated only one. Landline respondents reported multitasking as often as cellphone respondents (54.1%% vs. 53.3%, χ2(1) = 0.057, p = .812). Secondary activities varied slightly by telephone type as shown in Figure 1. The most common activities cited by cellphone multitaskers were watching television (48.5%), watching children (13.6%), and doing housework (10.9%). Landline multitaskers identified watching television, surfing the Internet, and doing housework as their most common activities (57.9%, 12.4%, and 7.4%, respectively). Some of the activities reported by respondents were unique to the telephone type such as driving and walking/exercising, which were mentioned only by cellphone respondents.

Predictors of Multitasking

The adjusted odds ratio (AOR) and corresponding 95% confidence interval (CI) from the binary logistic regression are shown in Table 3. Controlling for a series of demographic and interview characteristics, age, parents with children in the household, education level, and time of the call predicted multitasking. As shown in Table 2, older respondents were more likely to report multitasking as compared to younger respondents (AOR = 1.11, CI = [1.03, 1.19]). Those with children living in the house were significantly more likely to multitask than were respondents with no children in the household (AOR = 1.61, CI = [1.27, 2.04]). Compared to respondents with high school educations or less, those with some college (AOR = 0.77, CI = [0.60, 0.98]) or four or more years of college (AOR = 0.59, CI = [0.46, 0.76]) were less likely to report multitasking. Finally, respondents who completed the survey at night (after 5:00 PM) had significantly higher odds of reporting multitasking than those interviewed during the day (AOR = 1.44, CI = [1.19, 1.75]). We also explored the possibility that the effect of the time of the call on self-reported multitasking was conditional on employment status or device type; however, the inclusion of these interaction terms provided no support for this statement. (Results available upon request.)

Effects of Multitasking on Data Quality

The number of non-substantive responses was not affected by multitasking status (t[2,126] = 0.140, p = .889). On average, both multitaskers and non-multitaskers provided 0.55 non-substantive responses throughout the survey (see Table 4). This low number of “don’t know” and “refuse” responses may be attributable to the low cognitive demand of the questions as most of them were behavioral and attitudinal, and there were no knowledge questions. Similarly, we found no support for the hypotheses that respondents differentiate less when they are multitasking (t[2,126] = 0.503, p = .616).

Across the four questions analyzed, there were no differences in the number of rounded answers (ending in 0 or 5) between multitaskers and non-multitaskers (t[2,126] = 0.239, p = .811). In addition, no evidence was found that recency effects were more pronounced for respondents who engaged in secondary activities (t[2,126] = 0.389, p = .697). Finally, the average interview length was virtually the same for multitaskers (M = 19.90, SD = 4.88) and non-multitaskers (M = 19.92, SD = 4.85), and the mean difference was not significant (t[2,126] = 0.085, p = .932).

Discussion and Conclusions

Slightly over half of the respondents in this study reported multitasking. This is similar to findings in previous telephone surveys (Heiden et al. 2017; Lavrakas, Tompson, and Benford 2010) and illustrates how widespread this behavior is. However, as Ansolabehere and Schaffner (2015) have noted, self-reported measures may induce underestimations of the prevalence of multitasking as multitasking might be considered an undesirable behavior that respondents might not be willing to admit. Future studies may overcome this limitation by supplementing self-reports with other indicators (e.g., paradata, interviewers’ observations of respondents’ distraction).

Consistent with previous studies (Ansolabehere and Schaffner 2015; Kennedy 2010), watching television was the most common activity, especially for landline respondents. In our case, the percentage of electronic multitasking was lower than that found in online studies (e.g., Zwarun and Hall 2014), especially for cellphone respondents. This could be explained, in part, by the ease of engaging in Web-related activities (e.g., social media, e-mail) when answering online surveys. Our findings further suggest that activities are a function of the type of telephone on which the survey was completed. For example, some activities that take place outside of the house (i.e., driving, walking) were reported only by cellphone respondents. Other activities, such as watching children and working, were twice as common among cellphone respondents as those on landlines. This finding might be attributable to the profile of cellphone respondents who reported having children in the household and being employed more often than landline respondents. Regardless of the device, two of the most frequent activities mentioned by respondents were those provided as examples in the question: watching television and watching children. Given that previous studies have found that the examples provided in questions are more likely to be listed (Tourangeau et al. 2014), future studies could investigate how examples affect self-reported multitasking.

Older respondents, parents with children in the household, less educated individuals and those interviewed at night were more likely to report multitasking. Unlike previous studies in which respondents were more likely to report multitasking when completing online surveys on their smartphones than on PCs (Antoun, Couper, and Conrad 2017), we found no difference in self-reported multitasking between devices (cell/landline OR = 0.968). The percentage of self-reported multitasking was almost identical for cellphone and landline respondents (53.3% and 54.1%, respectively). Our finding that older respondents were more likely to report multitasking contrasts with that of previous studies which documented the opposite impact of age (Ansolabehere and Schaffner 2015). The fact that most common activities in this study were nonelectronic (e.g., watching children, doing housework) may help explain these results taking into account that younger respondents tend to be overrepresented among those who engage in electronic multitasking (Zwarun and Hall 2014). Future research could expand on these results, examining what characteristics predict different forms of multitasking (e.g., electronic, non-electronic, environmental distractions).

Consistent with previous online surveys (Ansolabehere and Schaffner 2015; Antoun, Couper, and Conrad 2017), we found no evidence that respondents’ multitasking reduced data quality in our dual-frame telephone survey. None of the satisficing indicators differed significantly between multitaskers and non-multitaskers. Future studies could examine these results in greater depth analyzing other indicators that have been less studied such as answers to factual questions or responses to open-ended questions. It will also be valuable to analyze the effect that different forms of multitasking have on data quality indicators (e.g., distinguishing between low and high cognitive burden activities).

Acknowledgment

This study was part of a survey project sponsored by the University of Iowa Public Policy Center. The authors would like to thank Suzanne Bentler and Peter Damiano, who directed the larger project and encouraged the methodological study presented here.

This research was presented at the Midwest Association for Public Opinion Research 2017 Conference. We are thankful for the feedback provided by attendees and would like to specifically thank René Bautista for his thoughtful comments. We extend thanks to Andrew Stephenson and Sharon Cory for their helpful comments and suggestions.

Contact information

Eva Aizpurua

TRiSS

6th Floor, Arts Building

Trinity College Dublin

Dublin 2

aizpurue@tcd.ie

+00353 87 700 4552

Ki H. Park

Center for Social and Behavioral Research

University of Northern Iowa

Cedar Falls, Iowa 50614-0402

ki.park@uni.edu

319-273-2105

Erin O. Heiden

Center for Social and Behavioral Research

University of Northern Iowa

Cedar Falls, Iowa 50614-0402

erin.heiden@uni.edu

319-273-2105

Jill Wittrock

Center for Social and Behavioral Research

University of Northern Iowa

Cedar Falls, Iowa 50614-0402

jill.wittrock@uni.edu

319-273-2105

Mary E. Losch

Center for Social and Behavioral Research

University of Northern Iowa

Cedar Falls, Iowa 50614-0402

mary.losch@uni.edu

319-273-2105