Introduction

Cognitive interviewing is a widely accepted method for evaluating survey questions. During cognitive interviews, respondents are administered a copy of the survey questionnaire and are then asked a series of probe questions to examine their question response process (Willis 2005). Commonly asked probe questions include ‘can you tell me why you answered that way’ and ‘what do you think this question is asking?’ This method is particularly useful for determining whether respondents interpret survey questions as intended and whether they are providing valid responses (Miller 2011).

Many academic, government, and private organizations have established laboratories devoted to cognitive interviewing and other pre-test methods (Beatty and Willis 2007). Laboratory settings are ideal in that they offer a private space with limited to no distractions and a quiet space to capture audio or video recordings of the interview. It is also recommended that these laboratories be staffed with highly skilled researchers who have backgrounds in survey methodology and qualitative methods (Willis 2005). Working in a centralized laboratory setting, researchers have the ability to observe each other’s interviews, discuss findings after each interview and modify interview protocols as themes begin to emerge. However, a major disadvantage to a laboratory setting is that it is difficult and sometimes impossible for the appropriate respondents to travel to the laboratory.

The National Agricultural Statistics Service (NASS) conducts numerous surveys of the U.S. farm and ranch population. Respondents are typically located in rural, geographically disperse areas. Therefore, it is not feasible to have a centralized research laboratory to conduct cognitive interviews. Instead, researchers must travel to the respondents. Recruiting for and conducting cognitive interviews in rural areas is costly, time-consuming, and in the past, this has limited the amount, scope, and geographic diversity of cognitive interviewing projects NASS could conduct.

In recent years, the U.S. Office of Management and Budget has called for agencies to increase the amount and rigor of cognitive testing of federal surveys. In order to meet this objective, NASS needed to improve its cognitive interview program. NASS expanded its cognitive interview program by training production staff in state and regional offices to conduct cognitive interviews. Utilizing production staff located in rural areas allowed NASS to increase the amount of cognitive interviewing studies completed and improved the diversity of respondents recruited for this research, while simultaneously keeping costs down.

This paper outlines the planning and development of the expanded program and how it has improved NASS’s ability to pre-test its surveys. The number and size of cognitive interviewing projects before and after the implementation of this program will be examined, as well as associated cost savings from implementing this program. The limitations of this program and recommendations for others interested in adopting a similar approach to cognitive interviewing are also discussed.

Cognitive Interviewing at NASS

NASS is organized with headquarters in Washington DC, 12 regional field offices, and statisticians in 46 states. The headquarters staff are survey methodologists who are responsible for survey design, pretesting, and overall survey administration. Headquarters staff typically have advanced degrees (masters and doctorates). Meanwhile, the production staff are agricultural statisticians and economists in the state and regional field offices who are responsible for managing survey data collection, editing, and summary of survey data, as well as outreach. Production staff often have at least a bachelor’s degree. The goal of redesigning the cognitive interview program was to train production staff to assist with cognitive interviews. The production staff would be responsible for recruiting respondents, conducting cognitive interviews, and providing interview notes to methodologists in headquarters. The headquarters methodologists would serve as project leads and would be responsible for developing protocols, coordinating interviews, conducting some interviews, analyzing data, providing recommendations, and writing final reports.

Selecting Staff to Train

The first step was to determine whom to train to conduct this work. An obvious choice is survey interviewers, who are familiar with NASS questionnaires and who have experience interacting with respondents. However, use of survey interviewers as cognitive interviewers is not recommended as these individuals are skilled at helping respondents understand question wording, repairing questions, and completing interviews in a timely fashion. During cognitive interviews, interviewers often have to let a respondent struggle through a question to understand what is problematic about it and probe extensively on their understanding of questions and response formation (Willis 2005). In addition, it requires a great deal of retraining to prepare survey interviewers to conduct a cognitive interview. Instead, Willis (2005) recommends that cognitive interviewers have social science backgrounds, training in survey methodology and questionnaire design, and have experience with qualitative interviewing.

NASS has experienced production staff, who are knowledgeable about farming and farms in their state, data collection, editing, and analysis, in 46 states. Many of these employees are required to reach out to prospective respondents and promote NASS surveys. Although these employees are highly skilled in data collection and statistics and have extensive knowledge of agriculture and NASS respondents; they often have limited knowledge of questionnaire design and evaluation and do not have formal training in qualitative methodology. Despite not possessing all of the desired skills of a cognitive interviewer, their specialized skills make them great candidates to be trained to conduct this type of work.

Designing Cognitive Interview Training

Next, a plan on how to train the production staff was developed. There is little guidance in the literature on training cognitive interviewers. Willis (2005) recommends training new cognitive interviewers on the cognitive aspects of survey methodology (CASM) approach and probing techniques, allowing new interviewers to observe cognitive interviews and participate in design meetings and providing them adequate time to practice this new skill. Cognitive interviewers need to be able to recognize potential problems and ask emergent probes during the cognitive interview (Beatty and Willis 2007). Having a basic understanding of common types of response error is critical to formulating emergent probes during the cognitive interviews.

Although NASS production staff were only going to be responsible for recruiting respondents and conducting cognitive interviews, it was important to train them on how to conduct a cognitive interview project from start to finish. This allowed them to fully understand the purpose of the interviews and how the data from the interviews are used to evaluate the survey questionnaire, and ultimately what was being requested of them (e.g., how to probe, how to prepare notes).

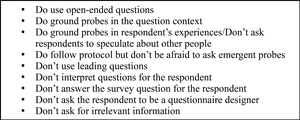

NASS has conducted the cognitive interview training three times over three years. Each training consisted of three 3-hour sessions staggered over a couple of weeks (see Figure 1 for an outline of the training). The trainings were conducted remotely via video teleconferencing during regular business hours, which meant the trainings could be conducted at no additional cost to NASS. In the first session, participants were provided a general overview of cognitive interviewing and observed mock cognitive interviews. They were then introduced to the question response process (Tourangeau, Rips, and Rasinski 2000), and additional challenges to the question response process introduced in establishment surveys (Willimack and Nichols 2010). The last part of the first session then focused on common types of response errors, and participants completed an in-class exercise diagnosing problems in questions that could lead to response error.

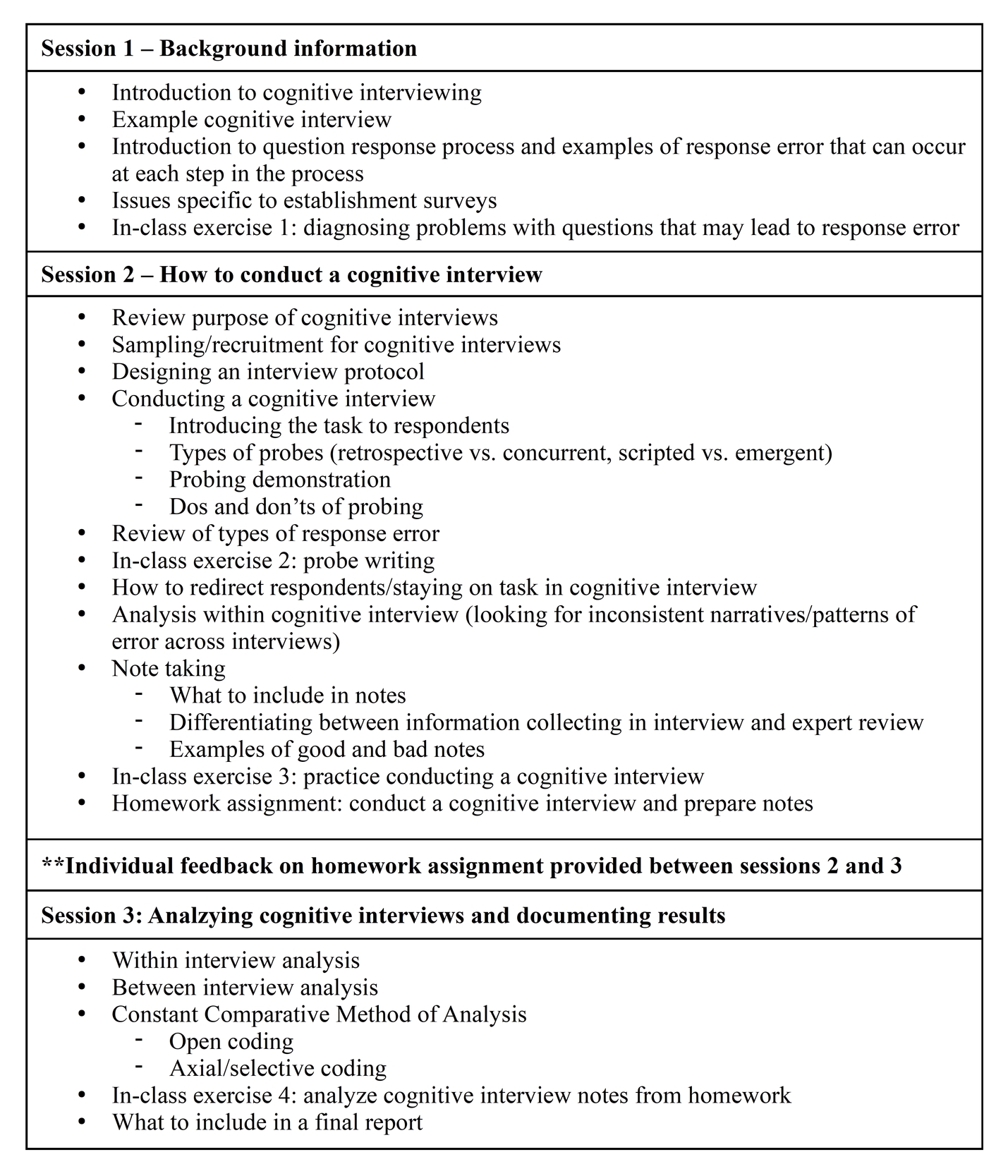

In the second session, participants were trained on how to conduct a cognitive interviewing project from start to finish. The first half of this session focused on how to conduct a cognitive interview. Participants learned how to recruit respondents and design an interview protocol. A large amount of time was spent learning how to probe effectively. Participants learned about different types of probe questions (i.e., concurrent vs. retrospective, scripted vs. emergent), and effective probes vs. ineffective probes (see Figure 2). They also practiced writing their own probe questions and conducting cognitive interviews. The second half of this session focused on note taking. Participants learned what types of information should and should not be included in notes. They also learned how to begin analysis within interviews and document initial findings in their notes.

Participants were then assigned homework to be completed before the next session. For homework, all participants had to conduct one cognitive interview and submit notes from that interview. This homework was used in session three to practice analyzing cognitive interview data.

Session three focused mostly on how to analyze cognitive interview data. Participants learned about within interview and between interview analyses. They were then taught the constant comparative method of analysis (Strauss and Corbin 1990). Homework from the second session was compiled, and participants used these compiled notes to practice completing each analysis step.

Results

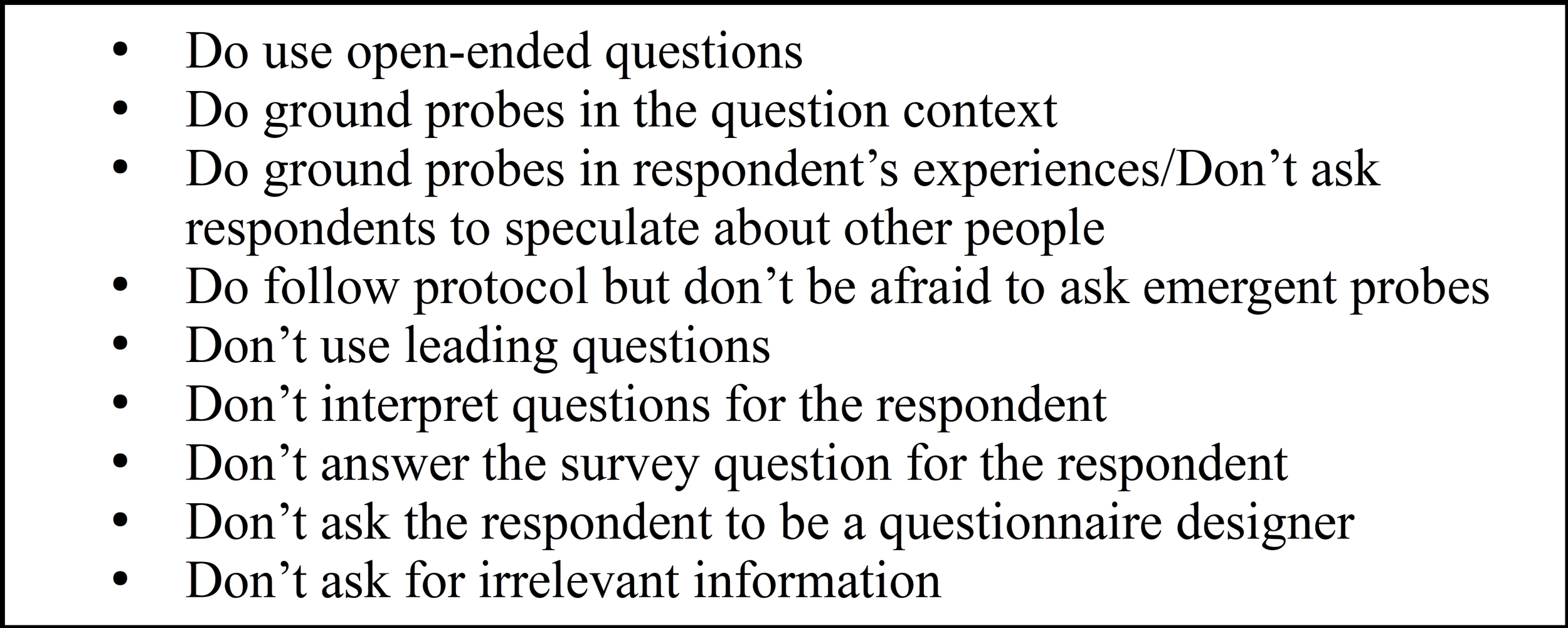

In total, 105 employees in 45 states were trained to conduct cognitive interviews. Since beginning the training program, the number of cognitive interview studies NASS conducts has increased. In the four years prior to the first training in 2014, NASS conducted 0–3 cognitive interviewing projects per year. After the initial training, the number of cognitive interviewing projects increased to an average of about 11 cognitive interview projects per year (see Figure 3). NASS also improved the rigor of these projects by conducting larger projects, which allowed testing the survey instruments using a wider scope of respondents (regional, farm type) and iterative rounds of testing. For example, in preparation for the 2017 Census of Agriculture (COA), five iterative cognitive interviewing projects consisting of 108 interviews across 30 states were conducted in a relatively short period of time (eight months). Increasing the number of interviews and geographic coverage allowed for the ability to evaluate each section of the 24-page COA form and test proposed changes to questions found to be problematic in earlier rounds of testing.

A project of this scope would not have been possible by solely relying on headquarters methodologists to complete this work. They could not have fully supported this number of cognitive interviews (regardless of size) in a single year. In addition, the extensive travel costs would have been prohibitively high. On average, for a NASS headquarters staff member to conduct a cognitive interview study with 10 respondents, it costs NASS $2,000 in travel costs (i.e., airfare, hotel, rental car, gas, and per diem). In contrast, production staff can interview respondents within 60 miles driving distance, and no airfare or lodging costs are needed. This reduced travel costs on average to $696 per project, which covered mileage. This resulted in a savings of travel costs of approximately $1,300 per project.

Although this training program was successful in terms of the expansion of cognitive interviewing studies, some areas still needed improvement. One of those areas was getting production staff to probe effectively. Most of these interviewers asked the probes provided in their interview guide. However, some did not ask all of them, particularly when respondents provided a negative response to a survey question. For example, if a respondent answered no to a screening question, some interviewers would not probe on that question, and subsequently, it was not possible to determine whether respondents answered incorrectly and thus skipped themselves out of the section. Other times, the interviewers were so committed to asking scripted probes that they would ask probe questions that did not apply to the respondent. It was also learned that interviewers were reluctant to ask emergent probes. In these situations, interviewers would uncover an unanticipated problem and would describe the issue in their notes. However, they would not probe deeper to determine the source of the problem. It can be argued that this is an issue for all new cognitive interviewers and is a skill that improves over time with practice.

Poor note taking was another challenge encountered. Despite the emphasis placed on good note taking in the training of production staff, headquarters staff still tended to provide richer and more detailed and objective information. Some interviewers in the field provided very scant notes that did not contain enough information for a good evaluation of questions. In these cases, interviewers needed to be re-contacted and asked to provide additional information on issues they uncovered in their interviews. Sometimes these interviewers were able to provide this information as it was in their handwritten notes from the interview or fresh in their memory. Other times, they were not able to recall this information. Currently, NASS is not permitted to record cognitive interviews, and interviewer notes serve as the sole record of what transpired during the interview. A future goal is to be able to audio record interviews to address this issue.

Based on these findings, interview guides were redesigned to make them more user friendly and to encourage interviewers to ask appropriate probes. In the redesigned interview guides, different probe questions are provided for each unique response respondents may provide to a survey question. (e.g., different probe questions for yes and no responses). Also additional space is provided for interviewers to write their emergent probes and responses to them (see the appendix for an example of a current interview guide). In our third training session, more time was spent discussing note taking and emergent probes (e.g., how to formulate them and when to ask them).

Another limitation of the program is that cognitive interviewing is an additional unplanned duty for production staff — not part of their main job. Interviewers in certain locations or who proved to be adept in cognitive interviewing were requested more often than others. NASS had to be cognizant of not overburdening the more effective cognitive interviewers in the field. These interviewers are typically assigned 1–2 interviews per project and are expected to complete these interviews during a short timeframe. It is not possible to meet with all interviewers and debrief after each interview as you typically would when conducting qualitative research. As a result, interviewers in the field do not have the ability to learn from each other and often cannot apply what they learned in their first interview to their own subsequent interviews.

Finally, the amount of effort and time that production staff needed to recruit a respondent and set up an interview appointment was underestimated. Subsequently, the amount of time allotted to these interviewers to complete their interviews needed to be increased. Also it was determined that these interviewers needed additional guidance on recruitment. Some interviewers had a tendency to return to the same respondents for each cognitive interviewing project. As a result, interviewers in the field needed to be monitored more closely to be able to control the number of cognitive interviews being completed and the respondents being recruited.

Conclusion

Expanding the cognitive interviewing program to production staff has greatly increased the amount of cognitive interviewing projects that NASS can conduct and the rigor of this work. While quality varies among interviewers, the benefit of the increased number and variety of interviews more than makes up for it. NASS should continue to work with production staff to improve their interviewing skills on an individual basis and should plan to conduct continued training to ensure interviewers know how to probe effectively and can incorporate reflexivity into their work (Roulston 2010).

Other organizations that have field offices, even if only a limited number, would benefit from training field staff to conduct this type of work as well. Training any number of staff in different geographic locations could allow different populations to be represented, while attenuating costs. However, other organizations should first evaluate the skillset and availability of field staff to conduct these cognitive interview projects to maximize the benefit of this approach. For certain organizations, it may not be cost-effective or methodologically advantageous to train field staff to conduct cognitive interviews if these projects occur only once or twice a year. Regardless whether cognitive interviewers are located in a field setting or in a centralized laboratory, they all will benefit from repeated opportunities to conduct these interviews.

Overall, training production staff to conduct cognitive interviews outside the laboratory is an effective strategy for establishment surveys where respondents are often difficult to reach. This can substantially increase the amount of testing and ultimately the data quality for establishment survey organizations.

Lead Author:

Heather Ridolfo

1400 Independence Ave., SW Room 6031

Mail Stop 2040

Washington, D.C. 20250

(202)-692-0293

Heather.Ridolfo@usda.gov

Appendix: Example Cognitive Interview Guide

Irrigation and Water Management Survey

Project Leads: Kathy Ott and Rachel Sloan

Version Number: FULL VERSION

Interviewer’s Name:

POID:

PID:

State:

Date of Interview:

Type of Farm:

Gender of Respondent:

Age of Respondent (18–29, 30–44, 45–59, 60+):

Other Information 1:

Other Information 2:

Other Information 3:

Before we begin, I want to tell you a little more about the project and what we will be doing today. Every five years, NASS conducts an irrigation survey to collect important information about water management practices. We want to make sure farm operators like yourself can understand the questions and fill it out in the new format. This survey is usually mailed out in January and covers the previous calendar year.

First, I’m going to ask you to fill out the form as if you have received it in the mail in January 2018 and were answering for calendar year 2017. I know the calendar year is not over yet, so you don’t have to come up with the exact figures, you can estimate or tell me about how you would have come up with an answer and the degree of difficulty involved in providing the information. Some sections have an “X” over them — in the interest of time today, you can skip those entire sections.

After you have completed the sections of the form that do not have an “X”, we will go back over the questions you answered. I’m going to ask you some follow-up questions on why you answered the way you did, how you interpreted certain questions and discuss any questions and/or terms that you found confusing or did not understand. This will help us make sure that everyone who receives this form understands the questions. All your answers and everything we discuss today will be kept completely confidential. If you have any difficulty answering a question, circle it and we will discuss it after you have completed the form. Do you have any questions before we start?

[Hand the respondent the questionnaire]

Section 1: Acreage in 2017

- Probe 1: (All) Tell me about the acreage you owned and/or rented in 2017.

- Probe 2: (All) Did you own any land you did not include in this section?

- Probe 3: (All) Did you rent any land TO anyone else in 2017? Where did you include that?

- Probe 4: (All) Did you rent any land FROM anyone else in 2017? Where did you include that?

- Probe 5: (All) Did you have any woodland or idle land? Did you include that in this section?

- Probe 6: (All) Did you have any greenhouses or other area under protection? Did you include that in this section? Did you round that acreage up to the nearest acre?

- Probe 7: (All) Were any of the questions in Section 1 (Acreage in 2017) difficult for you to answer? If so, which ones?

- Probe 7a: Why was this question difficult for you to answer? (If more than one question is reported above, include the question number in your notes here.)

- Probe 7b. Why did you answer the way you did? (If more than one question is reported above, include the question number in your notes here.)

- Interviewer observation: Use emergent probes to follow up on obvious response errors (e.g., questions left blank, Box A does not equal the acres owned + rented from — rented to, any missing land, etc.).

Section 2: Land in 2017

- Probe 1: (All): Tell me how the land you owned or rented was used in 2017.

- Probe 2: (Observe): Did they have woodland, idle land, greenhouses, CRP land, buildings, etc? Did they include that in the right section? Ask emergent probes.

- Probe 3: Did you read the instructions at the top of the page?

- Probe 3a. (read notes) Was anything in the instructions confusing?

- Probe 3b. (did not read notes) Why didn’t you read the instructions?

- Probe 4: (All) Were any of the questions 1 – 4 in Section 2 (Land in 2017) difficult for you to answer? If so, which ones?

- Probe 3a. Why was this question difficult for you to answer? (If more than one question is reported above, include the question number in your notes here.)

- Probe 3b. Why did you answer the way you did? (If more than one question is reported above, include the question number in your notes here.)

- Probe 5: (observe): Does Box B = Box A on page 1? If not, use emergent probes to follow up.

- Probe 6: (observe): Use emergent probes to follow up on obvious response errors (e.g., questions left blank, Box B does not equal the sum of numbers in column 1, Box C does not equal the sum of numbers in column 2, etc.).

Question 5: Was any area in the open or under protection irrigated on this operation in 2018?

- Probe 1: Tell me about the irrigation you used in 2018. How would you describe the types of irrigation you used? Did you have area in the open, under protection, or both?

- Probe 2: (interviewer observation): Any problems with the skip pattern?

Question 6: What state had the majority of irrigated acres on this operation in 2018?

- Probe 1: (observe): Any problems with this question? Ask emergent probes.

Section 3: Ground Water from Wells

Question 1: Did this operation irrigate with ground water from wells on this operation in 2017?

- Probe 1: (all, even if they said “no” to question 1!) What does “ground water from wells” mean to you?

- Probe 2: (answered “yes” to question 1) Tell me about the ground water from wells you used.

Questions 2 and 3: Report acres in the open irrigated with ground water and amount of water applied in 2017. Include irrigated horticultural crops grown in the open and irrigated pastureland.

- Probe 1: Can you tell me the difference between questions 2 and 3? (interviewer —do they mention “in the open” and “under protection”)

- Probe 2: (observe) Did the respondent report all acreage or square feet only once in questions 2 and 3? If no, ask emergent probes.

- Probe 3: (observe) If the respondent seemed to have any issues, ask emergent probes.

Question 4, 4a, 4b, 4c: How many wells on this operation were used in 2017?

- Probe 1: In question 4a, what does “backflow prevention device (check value)” mean to you?

- Probe 2: In question 4b, what does “flow meters or other flow measurement devices” mean to you?

- Probe 3: (observe) If the respondent seemed to have any issues, ask emergent probes.

Question 5, 5a: Report for the first three primary wells pumped in 2017.

- Probe 1: How did you determine which pump to list first, second, and/or third?

- Probe 2: (if respondent reported more than 3 pumps). How did you come up with your answers for “all other pumps” in questions 5a?

- Probe 3: Where any of the column headings difficult to understand? If so, which ones? What was difficult to understand?

- Probe 4: (observe) If the respondent seemed to have any issues, ask emergent probes.

Question 6: For the wells used on this operation, what is the best description for the depth to water over the last five years?

- Probe 1: What does question 6 mean in your own words?

- Probe 2: What time period were you thinking about?

- Probe 3: (observe) If the respondent seemed to have any issues, ask emergent probes.

Section 4: On-Farm Surface Water

Question 1: Did this operation irrigate with on-farm surface water including recycled water and on-farm reclaimed water in 2018?

- Probe 1: (all, even if they said “no” to question 1!) What does “on-farm surface water” mean to you?

- Probe 2: (all, even if they said “no” to question 1!) What does “recycled water” mean to you?

- Probe 3: (all, even if they said “no” to question 1!) What does “on-farm reclaimed water” mean to you?

- Probe 4: (answered “yes” to question 1) Tell me about the on-farm surface water you used.

- Probe 5: (answered “yes” to question 1) Was the on-farm surface water you used “recycled water,” or “on-farm reclaimed water” or something else?

Questions 2 and 3: “Report acres in the open irrigated with on-farm surface water and amount of water applied in 2018.” and “Report the area under protection irrigated with on-farm surface water in 2018.”

- Probe 1: Can you tell me the difference between questions 2 and 3? (interviewer — do they mention “in the open” and “under protection”?)

- Probe 2: (reported acres irrigated “in the open”) For question 2, first column “Acres in the Open Irrigated by On-Farm Surface Water,” how did you come up with your answer?

- Probe 3: (reported acres irrigated “in the open”), for question 2, second column “Quantity of On-Farm Surface Water Applied,” and the third column “Unit of Measure,” how did you come up with your answer? Do you have records for this?

- Probe 4: (observe), did the respondent fill in “Unit of Measure”? Ask emergent probes as necessary.

- Probe 5: (reported area under protection) for question 3, “Square Feet Under Protection Irrigated by On-Farm Surface Water,” how did you come up with your answer?

Question 4, 4a Did this operation use on-farm recycled water to irrigate…

- Probe 1: (observe): Did the respondent report any of the same land in both columns in 4a? Ask emergent probes, as necessary.

- Probe 2: (observe): Did the respondent put their data in the correct place? Ask emergent probes, as necessary.

Question 5. Did this operation use reclaimed water from on-farm livestock facilities to irrigate…

- Probe 1: (observe): Did the respondent follow the skip properly? Ask emergent probes, as necessary.

- Probe 2: What does the term “reclaimed water from on-farm livestock facilities” mean to you?

- Probe 3: (observe): Did the respondent report any of the same land in both columns in 4a? Ask emergent probes, as necessary.

- Probe 4: (observe) If the respondent seemed to have any issues, ask emergent probes.

Section 5: Off-Farm Water

Question 1: Did this operation irrigate with off-farm water in 2018?

- Probe 1: (all) Describe what “off-farm water” means to you.

- Probe 2: (answered yes to question 1) Did you read the “include” statements"?

- Probe 2a: (did not read include statements): Please look at the include statements now. Would you change you answer for question 1 now that you have read these?

- Probe 3: (answered yes to question 1) Tell me about the off-farm water you used.

Questions 2 and 3: “Report acres in the open irrigated with off-farm water and amount of water applied in 2018.” and “Report the area under protection irrigated with off-farm water in 2018.”

- Probe 1: Can you tell me the difference between questions 2 and 3? (interviewer – do they mention “in the open” and “under protection”?)

- Probe 2: (reported acres in the open) For question 2, first column “Acres in the Open Irrigated by Off-Farm Water,” how did you come up with your answer?

- Probe 3: (reported acres in the open) For question 2, second column “Quantity of Off-Farm Water Applied,” and the third column “Unit of Measure,” how did you come up with your answer? Do you have records for this?

- Probe 4: (observe), did the respondent fill in “Unit of Measure”? Ask emergent probes as necessary.

- Probe 5: (reported area under protection) for question 3, “Square Feet Under Protection Irrigated by Off-Farm Surface Water,” how did you come up with your answer?

Question 4. Did this operation pay for the off-farm water received on this operation?

- Probe 1: (answered yes to question 4) How did you come up with your answer for how much you paid? Did you use records?

Question 5. How much of this operation’s off-farm water was supplied, delivered, or transferred through a project financed, constructed, or managed by —

-

Probe 1: (interviewer observation): Did the respondent check “some” or “all” for at least one of the rows? Ask emergent probes, as necessary.

-

Probe 2: Tell me about the places you got the off-farm water from. Ask emergent probes, as necessary.

Question 6. Did this operation use reclaimed water from off-farm sources such as …..

- Probe 1: (all) In this question, what do you think the term “reclaimed water” means?

- Probe 2: (observe): Did the respondent follow the skip properly? Ask emergent probes, as necessary.

- Probe 3: (answered yes to question 6) What does the term “reclaimed water from off-farm sources” mean to you?

- Probe 4: (observe): Did the respondent report any of the same land in both columns in 6a? Ask emergent probes, as necessary.

- Probe 5: (answered “yes” to question 1) Tell me about the source of reclaimed water. Why did you select the answer you did for question 6c?

- Probe 6: (observe) If the respondent seemed to have any issues, ask emergent probes.

Section 6: Pumps, other than well pumps, Used for Irrigation

Question 1: Tailwater pits

- Probe 1: (all) What does “tailwater pit” mean to you?

- Probe 2: (reported tailwater pits) Tell me about the surface water source pumps you included here.

- Probe 3: (reported tailwater pits) How did you come up with these answers?

- Probe 4: (interviewer observation): Any other problems with Question 1? Ask emergent probes, as necessary.

Question 2: Ponds, lakes, reservoirs, rivers, canals, etc.

- Probe 1: (reported ponds, lakes, etc) Tell me about the surface water source pumps you included here.

- Probe 2: (reported ponds, lakes, etc) How did you come up with these answers?

- Probe 3: (interviewer observation): Any other problems with Question 2? Ask emergent probes, as necessary.

Question 3: Relifting or boosting water within system

- Probe 1: (all) What does “relifting or boosting water within system” mean to you?

- Probe 2: (reported relifting or boosting water within system) Tell me about the surface water source pumps you included here.

- Probe 3: (reported relifting or boosting water within system) How did you come up with these answers?

- Probe 4: (interviewer observation): Any other problems with Question 3? Ask emergent probes, as necessary.

Section 9: Acres Harvested in the Open

General Probes

- Probe 1: (All) What crops did you grow in 2017? Were there any crops that you grew that were not listed on pages 8 and 9?

- Probe 2: (All) Did you report irrigated land, nonirrigated land, or both in this section?

- Probe 3: (All) Did you have any issues with reporting average yield using the units provided?

- Probe 4: (Horticulture operations): Did you report horticultural crops grown in the open in this section? If so, where did you report them?

- Probe 5: (Horticulture operations): Did you report horticultural crops grown under protection in this section? If so, where did you report them?

- Probe 6: (observe) Did respondent report quantity of water applied per acre in either acre feet OR inches?

- Probe 7: (All) Did you have any issues with this section?

Section 10: Field water distribution and water source for crops in the open

Column 1 Irrigated Crops

- Probe 1: (All) Observe – did the respondent only report crops grown in the open?

- Probe 2: (All) Observe – did the respondent report the same crops from Section 9 in this section?

Column 2: Primary Method of Field Water Distribution

- Probe 1: (respondent used codes for column 2 “Primary method of field water distribution”) How did you decide which code to use for each crop?

- Probe 2: (respondent did NOT use codes for column 2) What do you think column 2 “Primary method of field water distribution” is asking for?

Columns 3/4/5: Water Source

- Probe 1: Do you use more than one water source to irrigation any of you acreage?

- Probe 1a: If so, how did you report those acres in columns 3/4/5?

- Probe 2: Observe – any problems? If so, ask emergent probes.

Column 6/7: Acres on which Chemigation was Applied Through the Irrigation System

- Probe 1: (All) What does the term “chemigation” mean to you?

- Probe 2: Observe – any problems? If so, ask emergent probes.

Section 11: Irrigation for Horticultural Crops Grown in the Open

Question 1: Were any irrigated floriculture, nursery, sod, propagative materials, Christmas trees, or other horticultural crops grown in the open (including natural shade on this operation in 2017?

- Probe 1: (all) What does “grown in the open” mean to you?"

- Probe 2: (Observe) Did respondents without horticultural crops in the open correctly skip this section? If not, ask emergent probes.

Question 2: Report total acres and irrigated acres for horticultural crops grown in the open (including natural shade)

- Probe 1: (answered yes to question 1) How did you come up with these answers?

- Probe 2: (Observe) Is IC 0738 less than or equal to 0737? If not ask emergent probes.

Question 3: For horticultural crops in the open, enter the total acres and irrigated acres by horticultural crop category on this operation in 2017.

- Probe 1: Were all of the horticultural crops you reported here grown in the open? Did you include any horticultural crops grown under protection here?

- Probe 2: Were you able to report all horticultural crops grown in the open in one of these categories? Were there any crops that you weren’t sure where to report?

- Probe 3: (Observe) Are the acres reported as irrigated less than or equal to the total acres? If not, ask emergent probes.

Question 4: For horticultural crops in the open, report area irrigated or watered in 2017 by method used.

- Probe 1: (Observe) Did the respondent fill out Column 1 AND EITHER 2a AND 2b OR 3a, 3b, AND 3c? If not, ask emergent probes.

- Probe 2: Were any of the acres you reported irrigated with more than one method? If yes, how did you report these acres in this table?

- Probe 3: (If Respondent answered column 2) (Observe) Did the respondent enter the quantity and unit of water correctly? If not, ask emergent probes.

- Probe 3a: How did you come up with these answers?

- Probe 4: (If respondent answered column 3) How did you come up with these answers?

- Probe 5: Do these answers only include acreage for horticultural crops grown in the open? Did you include any other acreage here?

Question 5: Irrigation of horticultural crops grown in the open by water source

- Probe 1: (Observe) Do all rows add up to 100%? If not, ask emergent probes.

- Probe 2: How did you come up with these percentages?

- Probe 3: Do these answers only include irrigation for horticultural crops grown in the open? Did you include any other irrigation here?

Section 12: Irrigation for Horticultural Crops Grown Under Protection

Question 1: Were any irrigated nursery, greenhouse, floriculture, mushrooms, propagative materials, or other horticultural crops grown under protection on this operation in 2017?

- Probe 1: (all) What does “grown under protection” mean to you?"

- Probe 2: (observe) Did respondents without horticultural crops grown under protection correctly skip this section? If not, ask emergent probes.

Question 2: Report total area and irrigated area for horticultural crops grown under protection.

- Probe 1: (answered Yes to question 1) How did you come up with these answers?

- Probe 2: (Observe) Is irrigated acres less than or equal to total area? If not ask emergent probes.

Question 3: For horticultural crops under protection, enter the total acres and irrigated acres by horticultural crop category on this operation in 2017.

- Probe 1: Were all of the horticultural crops you reported here grown under protection? Did you include any horticultural crops grown in the open here?

- Probe 2: Were you able to report all horticultural crops grown under protection in one of these categories? Were there any crops that you weren’t sure where to report?

- Probe 3: (Observe) Are the acres reported as irrigated less than or equal to the total acres? If not, ask emergent probes.

Question 4: For horticultural crops under protection, report area irrigated or watered in 2017 by method used.

- Probe 1: (Observe) Did the respondent fill out Column 1 AND EITHER 2a AND 2b OR 3a, 3b, AND 3c? If not, ask emergent probes.

- Probe 2: Were any of the acres you reported irrigated with more than one method? If yes, how did you report these acres in this table?

- Probe 3: (If Respondent answered column 2) (Observe) Did the respondent enter the quantity and unit of water correctly? If not, ask emergent probes.

- Probe 3a: How did you come up with these answers?

- Probe 4: (If respondent answered column 3) How did you come up with these answers?

- Probe 5: Do these answers only include the area for horticultural crops grown under protection? Did you include anything else here?

Question 5: Irrigation of horticultural crops grown under protection by water source

- Probe 1: (Observe) Do all rows add up to 100%? If not, ask emergent probes.

- Probe 2: How did you come up with these percentages?

- Probe 3: Do these answers only include irrigation for horticultural crops grown under protection? Did you include any other irrigation here?

Section 15: Labor Used for Irrigation

Question 1: Did you have any paid labor for the irrigation portion of your operation in 2016?

- Probe 1: What does “paid labor for the irrigation portion of your operation” mean to you in this question?

- Probe 2: How did you come up with these answers?

Section 16: Irrigated Land in the Past Five Years

Question 1: Complete this section only if you did not irrigate any land in 2017.

- Probe 1: (Observe) Did the respondent follow instructions and skip this section?

Question 3: What were the reasons for not irrigating any land in 2017?

- Probe 1: Do you have any issues with any of the reasons on this list?

Section 18: Person Completing This Form ---- Please Print

- Probe 1: (interviewer observation) Did the respondent have any issues? Ask emergent probes as necessary.

- Probe 2: Are there any questions that we did not go over that you want to talk about?