Introduction

For survey practice, interrelated challenges of the contemporary survey climate like mode efficacy, communication preferences, and availability of technology affect response quality, nonresponse error, and perceptions of survey burden (Loosveldt and Joye 2016). For instance, access to and use of web-based survey modes have increased as (a) broadband, cellular, and wireless networks expand, (b) email becomes a ubiquitous contact mode, and (c) low-cost, user-friendly survey software becomes more popular (National Research Council 2013). The proliferation of email and web-based surveys has also led to a tendency to make survey requests brief, with minimal explanations about the survey’s purpose, how the results will be used, or the implications for participants (Dillman 2016). In contrast, tenets of social exchange theory, as applied to survey practice, assume people are more likely to respond when they are aware of the survey’s value and perceive less burden because of that value (Dillman, Smyth, and Christian 2014).

Given continued nonresponse concerns among survey practitioners, a simple question is what people’s general attitude toward surveys are, i.e., do they see value in completing a survey, enjoy the survey experience, or feel overburdened with survey requests (Loosveldt and Storms 2008). To address this question, de Leeuw et al. (2019) developed a survey attitude scale to assess dimensions of enjoyment, value, and burden. Initial instrument validation revealed adequate psychometric properties and indicated higher enjoyment and value, and lower burden, were predictive of higher response rates. To complement that initial validation, this study assesses the psychometric adequacy of the survey attitude scale via established reliability and validity criteria among a commonly sampled U.S. population.

Methods

Participants

In the United States, the outdoor recreation industry and its users, including hunters and anglers, are a common target population (Fish and Wildlife Service 2023). This study utilized a target population of Idaho resident hunters and a sample frame of tag purchasers in 2021 who provided an email contact drawn from the Idaho Department of Fish and Game’s (IDFG) license database.

Materials

Assessment of participants’ survey attitude was based on a three-dimensional instrument developed by de Leeuw et al. (2019) that consists of nine items organized into three subscales: survey enjoyment (e1-e3), survey value (v1-v3), and survey burden (b1-b3) (Table 1). The question stem read, “Thinking about the surveys that you are asked to participate in, please indicate your level of agreement with the following statements” and was measured on a 5-point bipolar response scale from strongly disagree (1) to strongly agree (5).

Procedure

In February 2021, survey invitations were emailed to a probability sample of 41,058 participants. Survey design and contact utilized social exchange tenets (Dillman, Smyth, and Christian 2014), i.e., stated the survey’s purpose, how respondent’s data would be used, and was sponsored by legitimate public organizations, the University of Idaho and IDFG. Survey invitations were sent via Granicus’ GovDelivery system, and respondents completed a web-based questionnaire hosted by Qualtrics. Reminders were sent at four-day intervals and the survey effort ended after 30-days. Following this effort, a nonresponse check mailed a shortened hardcopy questionnaire and prepaid return postage envelope to a probability sample of 3,000 participants who did not respond to the initial effort (i.e., we refer to this as the nonresponse sample below).

Analysis

To assess psychometric adequacy, analytical procedures follow de Leeuw et al. (2019) and established methods of scale measurement, validity, and reliability (DeVellis 2016; Kyle et al. 2020; Netemeyer, Bearden, and Sharma 2003). Reliability was assessed via (a) McDonald’s coefficient omega (> 0.7), (b) Cronbach’s coefficient alpha (> 0.7), and (c) composite reliability (CR) (> 0.7). Construct validity was assessed via a three-factor confirmatory factor analysis with a maximum likelihood estimator and established fit indicators and criteria: CFI (> 0.9), TLI (> 0.9), and RMSEA (< 0.08). Convergent validity was assessed via (a) significance of factor loadings (p < .001), (b) strength of standardized factor loadings (> .707), (c) squared multiple correlations (SMC; > .5), and (d) average variance extracted (AVE; > .5). Discriminant validity was assessed via (a) AVE greater than squared latent correlations, (b) AVE square root greater than latent variable correlations, (c) confidence intervals of latent variable correlations not including 1.0, and (d) SMCs less than AVE. Criterion validity (predictive validity) was assessed via (a) correlation between subscales and response and (b) logistic regression of survey attitude subscales (independent variables) on survey response (dependent variable). Statistical analyses were conducted in Mplus 8.2 and JASP 0.19.

Results

Survey Response

The sample consisted of 6,235 usable responses (respondents were 93% male, 93% white, and averaged 52 years of age, which reflect the demographics of the target population), resulting in an effective response rate of 20% and a ±2% estimate margin of sampling error. Mean composite scores for each subscale were: 3.3 (enjoyment), 3.6 (value), and 2.8 (burden) (Table 1). The nonresponse sample consisted of 1,011 usable responses and mean composite scores of 3.1 (enjoyment), 3.5 (value), and 3.2 (burden) (Table 2). Further information on responses for each item can be found in Supplementary File Table 1 and 2.

Reliability

Indicators of congeneric reliability (McDonald’s ω) were adequate for all subscales: .85 (enjoyment), .79 (value), and .74 (burden) (Table 1). Indicators of internal consistency (Cronbach’s α) were similarly adequate: .85 (enjoyment), .79 (value), and .73 (burden). Indicators of composite reliability (CR) were adequate for survey enjoyment (.75) and survey burden (.79) and marginal for survey value (.67).

Validity

Confirmatory factor model parameters based on de Leeuw et al. (2019) indicate acceptable fit indices and construct validity: χ2 = 224.12, df = 24, CFI = .99, TLI = .98, RMSEA = .04, SRMR = .04. Indicators of convergent validity were variably adequate: (a) all factor loadings (λ) were significant, (b) the majority were greater than the established threshold of 0.707 and all were greater than an acceptable threshold of 0.4, (c) analogous patterns of squared multiple correlations (SMC) greater than 0.5, and (d) average variance extracted (AVE) greater than 0.5 for survey enjoyment (0.50) and survey burden (0.55), though survey value (0.41) was below the established threshold (Table 1). Indicators of discriminant validity were variably adequate: (a) comparison of AVE to the squared latent variable correlations (r2) and (b) comparison of the AVE square root (√AVE) to latent variable correlations (r) indicate survey value and survey burden items were marginally supported but the adequacy of those criteria are less convincing when applied to the survey enjoyment subscale (Table 3). Discriminant validity was also marginally supported by (c) confidence intervals for survey value and survey burden construct correlations not including 1.0 and (d) the observation of some item SMCs less than AVE within each subscale (Table 3).

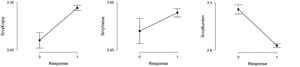

Criterion validity (predictive validity) was assessed via (a) correlation between subscales and response and (b) logistic regression of survey attitude subscales (independent variables) on survey response (dependent variable). Based on Spearman’s rho and Pearson’s point-biserial correlations, we observed the expected direction of relationship between response and survey enjoyment (rs = .08, rpb = .10) and survey burden (rs = -.16, rpb = -.15); the correlation between response and survey value was near zero (rs = .01, rpb = .04) (Figure 1). Based on the pooled sample of respondents and nonrespondents, a logistic regression of survey enjoyment, value, and burden composite scores predicted survey response (χ2 = 288.91, p < .001; βEnjoy = .45, βValue = -.15, βBurden = -.51) (Table 4). Given a unit change in survey enjoyment, the odds of responding are expected to increase by nearly a factor of 2 (OREnjoy = 1.75, 95%CI [.45, .67]), whereas survey value lowers the odds of responding, approaching no effect (ORValue = .82, 95%CI [-.33, -.08], and a unit change in survey burden decreases the odds of response (ORBurden = .55, 95%CI [-.67, -.50]). Further information on validity tests can be found in Supplementary File Tables 3-6 and Figure 1.

Discussion

The main contribution of this study is an assessment of the psychometric adequacy of a general survey attitude scale to inform discussions of unit-level nonresponse, data quality, and, more broadly, the contemporary survey climate. Similar to de Leeuw et al. (2019), our results suggest acceptable reliability of the survey attitude instrument and subscales (particularly in consideration of the number of items and threshold conventions). Construct validity was also adequately established, which, likewise, aligns with de Leeuw et al.'s (2019) findings of a three-factor having acceptable fit indices (and we similarly observed indicators of e3 cross-loading onto value and v3 cross-loading onto burden). In our interpretation, these results stem from the content validity of the scale items and their basis in established theory and practical evidence. Of note, we did not test a model that would support the combination of the three subscales into a single summated score.

Convergent and discriminant validity were variably adequate and provide limited support in this regard. Except for burden, adequate convergent validity for enjoyment and value was observed based on AVE and CR. However, the criteria for both forms of validity vary by indicator, scale size, and disciplinary conventions. Importantly, criterion validity provided support for the predictive ability of the survey attitude subscales to predict response. For survey practice, establishing this form of validity may be most consequential and bolster less certain indicators of convergent and discriminant validity.

Next steps to further establish psychometric adequacy would be additional replications, particularly efforts that vary the target populations among general and context-specific publics (e.g., voters, recreationists, customers), probability and panel samples, and efforts that emphasize cross-cultural elements. In terms of predictive validity, replication of de Leeuw et al.'s (2019) ‘willingness to be surveyed again’ question would add an additional layer of criterion validity, which was an inadvertent omission of this study.

In conclusion, survey practitioners may find that the incorporation of a validated survey attitude scale into their work provides practical insights relevant to the contemporary survey climate, including issues of mode, burden, fatigue, and panel attrition. With more actionable insights in mind, the continued validation of the survey attitude scale (particularly, criterion validity) may likewise aid survey practitioners better frame their participant recruitment and invitation strategies (similar to the premise of social exchange theory). Moreover, in the tradition of “surveys on surveys” research, a validated multidimensional survey attitude scale contributes an essential line of inquiry useful to academic and industry survey practitioners.

Acknowledgements

We thank all respondents and the Idaho Department of Fish and Game for facilitating data collection. We thank members of the USDA WERA-1010 committee, Don A. Dillman, Judith de Leeuw, and Joop Hox for their helpful insights. We also thank the editor and reviewer for their professionalism and valuable comments that improved the quality of the manuscript.

Lead author’s contact information

Kenneth E. Wallen, Ph.D., Department of Natural Resources and Society, University of Idaho, 875 Perimeter Dr., Moscow, ID 83844, USA

Email: kwallen@uidaho.edu