Introduction

Within the field of education, there is little research available about effective methods for recruiting and obtaining high response rates on educator surveys. In a review of 100 peer-reviewed articles on surveys of principals in K-12 schools, 87 percent of the articles reported the response rate, and only 24 percent of these articles discussed the limitations of nonresponse bias (Kano et al. 2008). Yet response rates can have important implications for the validity of the survey results and the generalizability to the population of interest.

There is some evidence that modifying aspects of the survey administration, such as the mode of delivery, may improve response rates. Tepper-Jacob (2011) randomly assigned elementary school teachers to web-based or paper-based surveys and found response rates were higher in the paper-based survey (59 percent vs. 40 percent). Another study by Jacob and Jacob (2012) randomly assigned high school principals to four different survey conditions that varied by prenotification, incentives, and survey modality. They found that response rates were lowest for principals that received an advance letter by email to participate in a web-based survey with no incentive (18 percent) and highest for principals that received an advance letter by email to participate in a web-based survey with a $10 incentive (58 percent). Additionally, Schilpzand et al. (2015) found that survey response rates for parents in school-based research can be improved by using an enhanced recruitment approach that includes sending prenotification postcards to parents and providing school staff with graphs of their school’s response rate.

Recruiting participants for a statewide education survey is a complex process involving a series of efforts at the district, school, and teacher respondent levels. CNA Education, in partnership with the Florida Department of Education (FLDOE), conducted a five-year, mixed methods program evaluation of the Florida College and Career Readiness Initiative (FCCRI), which involved participation among all public high schools statewide. Under the initiative, grade 11 students took the state’s college placement test, and those scoring below college-ready were required to enroll in grade 12 college readiness courses. Although FLDOE established common standards for these courses, district and school educators had considerable autonomy in making decisions about course curriculum and instruction.

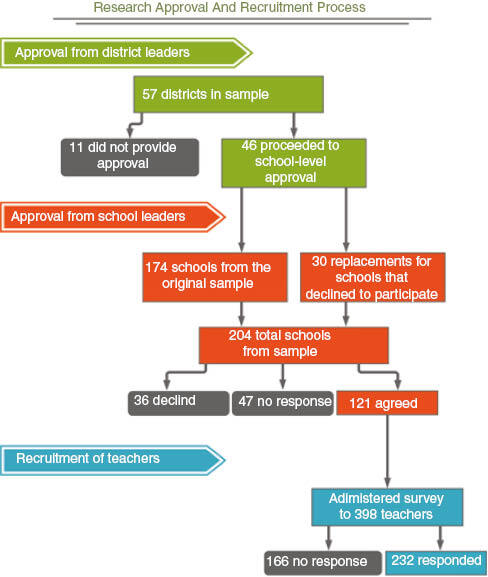

Data collection on program implementation included a web-based survey that aimed to capture teachers’ perceptions of the FCCRI’s implementation and their recommendations for how to improve it. This article discusses the complexities of the research approval and recruitment processes in our statewide education survey, as summarized in Figure 1. We begin by discussing the selection of a stratified random sample for the survey. Subsequent sections detail processes and challenges specific to recruitment of districts, schools, and teacher respondents. We conclude with lessons learned to inform the administration of future statewide education surveys.

Selecting a Stratified Random Sample

We developed a comprehensive survey that was administered in spring 2013 to a stratified sample of Florida high school teachers of college readiness courses. The characteristics used to define the size and performance strata were total high school enrollment in the district and the percentage of students scoring in the lowest two levels of the statewide assessment in grade 10. Within each stratum, we randomly selected a number of schools for the entire sample that was proportional to the number of schools in the strata statewide relative to the total number of schools participating in the FCCRI. The resulting sample for recruitment consisted of 190 schools in 57 districts.

District Research Requests

Outreach to districts began in December 2012 and continued through May 2013. Of Florida school districts, 27 have specific, district-developed forms and requirements for submitting a research application.[1] Most of these applications were submitted by email, although some districts required a hard copy by mail. The remaining 29 districts simply request a description of the proposed research. Each application package CNA Education submitted included an endorsement letter from the state department of education and an approval letter from the project’s Institutional Review Board. This information was modified and added to as needed to meet district requirements. To encourage districts to participate, the FLDOE director of K-12 schools sent a memorandum to all district superintendents, assistant superintendents for instruction, principals, and assistant principals expressing support for our research and urging participation in the survey.

Because there were 57 separate research request applications and multiple researchers working on recruitment, we created an online tracking system using Microsoft SharePoint to store information on the content submitted to the districts, the point of contact, the date of the application submission and any follow-up contacts, and the responses received. In addition, to ensure that the approval process continued to move forward, the research team established a formal procedure for following up with districts (Figure 2).

We waited three weeks to follow up with districts that had not responded to the research requests and established a regular schedule to continue reaching out to these districts until they approved or denied the research applications. These contacts were conducted by phone and email by research assistants. If there was no response after two attempts to inquire about the status of the application, we called an administrative assistant at the district office and asked if there was someone else who could help with the request.

If a district denied our research proposal, we contacted the district office to identify the reason the study had not been approved and determine whether a revised submission would be considered. For example, some districts would not allow the survey to be administered during the timeframe for standardized testing, so we agreed to delay the administration of the survey until testing was completed. Other districts would not allow us to provide gift cards to participants, so we modified the request to remove the incentives. All follow-up with districts that rejected the initial application was conducted by the principal investigator (PI) or co-PI.

One district with a formal research request process never responded to our application. Therefore, we did not recruit schools in that district. There were seven districts that did not respond to our submissions and also did not have a formal process for submitting research requests. We followed up with these districts at regular intervals and then sent an email notifying the administrator of our intention to proceed with recruiting high schools to participate in the survey. Table 1 summarizes the reasons why districts rejected the proposals.

Using specific feedback from districts, we submitted revised research requests to seven districts, two of which subsequently accepted the applications. Because our total sample did not include all of the high schools in each district, we were able to select 14 replacement high schools. Replacement schools were not selected for the 16 schools within districts that rejected our research proposal at a date that was too late for us to recruit replacement schools. After the district-level approval process, we had a complete sample pool of 174 schools, and we began reaching out to individual schools to secure their approval for the research project (Table 2). As shown in Table 3, the fastest response was within the same business day, and the longest response time was 18 weeks (over four months). Most response times longer than two months were delayed because the package had to be revised and resubmitted, which extended the time from the initial application.

School-Level Approvals

Outreach to schools began in February 2013 and continued through May 2013. Initially, we sent an email to principals describing the research project and asking them to supply contact information for up to five teachers of college readiness courses. If there was no response, a member of the research team called the front office and asked if there was an assistant principal or other staff member who could help. We were usually unable to get a decision when we called because key decision makers, such as principals, rarely answered their phones. If we were able to talk with someone else in the district office, that person often did not have the authority to approve the request on their own.

School staff had little incentive to respond to our request. Even though the request had been approved by the district, principals have autonomy to decide whether their school participates in any research studies, and many did not see a direct benefit to their school. After three months of ongoing recruitment, we initiated a multimodal outreach approach by sending a formal letter, visiting the school in person, or both (Table 4).[2] Whereas phone calls were often unanswered and sent to voicemail, it was more difficult for school administrators to ignore someone physically present at the school. However, at nearly half of the schools visited there was still no response because the principal or another administrator with decision-making authority was not available at the time of the visit. In these cases, we left printed materials and followed up with an email and a phone call.

In total, we contacted 204 schools to participate in our study: 174 that were part of the original sample following the district approval process and an additional 30 schools intended to replace schools from the original sample whose principals declined the research request. The number of replacement schools is lower than the number of schools that declined to participate because there was not enough time to recruit replacements for all of the nonparticipants. Of those, 157 ultimately replied to the request, with a total of 121 (77 percent) agreeing to participate in the survey research. Nearly all of the principals who declined to participate stated that teachers were too busy or that staff members were simply not interested in participating. If we were unable to speak with these principals directly, they often ignored our explanations that teacher participation was voluntary and that any respondents would be compensated for their time.

Survey Invitations and Incentives for Teachers

After obtaining school approval for the surveys, we asked school staff to provide contact information for up to five teachers of college readiness courses (most schools had fewer than five) and received a list of 398 teachers. We emailed each teacher a survey invitation that included a description of the study, a direct link to the survey, and a gift card incentive. The email invitation indicated that the survey should take 25 minutes or less to complete. It also explained that the survey was being conducted by an external research organization and that all responses would be anonymous. To encourage prompt participation, the gift card’s value decreased over time. Teachers who completed the survey within three days received a $25 gift card. The value decreased to $20 for completion within 4 to 7 days, $15 for 8 to 12 days, and $10 thereafter. Of those who received an invitation, 58 percent (n=232) completed at least some portion of the survey. Almost all of these teachers (n=225, 96 percent) responded to all of the multiple-choice questions, although not all of these included responses to the open-ended questions at the end of the survey.

The time-sensitive gift card may have affected the speed of responses, although it is impossible to know how many would have responded as quickly if the gift card had not been front-loaded. The majority (53 percent) of teachers who responded to the survey did so within three days, and an additional 20 percent completed the survey within a week. Throughout the process, teachers received email reminders. If they did not open the survey within 20 days, we coded them as nonrespondents.

Lessons Learned for Administration of Statewide K-12 Education Surveys

This survey research was funded by a grant from the U.S. Department of Education, but it has important implications for researchers doing statewide education surveys under other funding agencies as well. In Florida, all surveys are required to go through separate approval processes at the district, school, and respondent levels. The survey that we administered was about new college readiness courses being implemented by the schools, and the research was supported by FLDOE. It may be even more difficult to get approval for surveys that focus on topics of less direct relevance to the schools or the state department of education. In addition, our survey questions were about standard educational practices, and there were very minimal risks to participants. Surveys that include questions about sensitive topics or confidential information may also be more difficult to get approved.

The following are lessons learned from our survey of Florida high school teachers:

- The district-level and school-level approval process is time-consuming and requires multiple follow-up contacts for nonrespondents. Allow adequate time for the participant recruitment process prior to the planned start of the survey administration. For our survey, the greatest variation in the recruitment process occurred at the district level, where response times ranged from one day to more than four months.

- Review district websites for information about important timeline considerations. District calendars list school breaks and testing periods, which should be avoided when planning the timing of the survey administration. Additionally, some districts review research requests only on prescheduled dates, whereas others accept research requests on a rolling basis.

- Dedicate more than one researcher to the effort and develop a formal process for following up with districts and schools that do not respond. Our recruitment efforts included three research assistants who were each assigned to 19 districts over the six-month period. The level of effort for each district varied based on the complexity of the district approval process, the number of schools in the district, and the amount of follow-up required (which was especially time-consuming if visits to schools were conducted). One approach is to create an online tracking tool for monitoring recruitment progress.

- Call school districts before submitting a research request to determine whether the research plan is likely to be accepted or whether revisions are likely necessary. Revise the research request as needed to increase the likelihood of acceptance.

- Although in most cases, the principal makes the ultimate decision about whether a school will participate in research, he or she is not always the best point of contact – principals are simply too busy. Determine early who at the school will get the principal to decide by talking to someone at the school’s main office.

- School-level contacts are more likely to respond to multiple modes of communication about proposed research. Consider mailing recruitment materials to all schools and visiting as many schools in person as possible. However, researchers also should consider at what point it becomes more cost advantageous to stop attempting to recruit a school that was part of the original sample plan and transition to a replacement.

Acknowledgements

The Institute of Education Sciences, U.S. Department of Education, supported this research through grant R305E120010 to CNA. The report represents the best opinion of CNA at the time of issue and does not represent the views of the Institute or the U.S. Department of Education. The study was conducted by CNA Education under an Institutional Review Board (IRB) approval from Western IRB (WIRB protocol # 20132134).

For an example of the research review application process in Miami-Dade County Public Schools, see http://oer.dadeschools.net/ResearchReviewRequest/ResearchReviewRequest.asp

One district did not approve the research request until two weeks before the end of the school year. Three schools within this district did not respond, and we did not have enough time to follow up by letters or visits.