Introduction

Cognitive interviewing is one of the most widely used pretesting methods for identifying problems in survey instruments. In cognitive interviewing, respondents are sometimes asked to utilize a think-aloud method to describe their thought processes as they complete a survey instrument. Often additional probing questions are asked to gauge the respondent’s understanding of the meaning or intent of specific questionnaire items to illuminate respondents’ understanding of the survey questions (Blair and Brick 2010; Hughes 2004). Therefore, respondents’ articulation ability, that is, verbal ability to express one’s thoughts and opinions clearly, can affect their performance in cognitive interviews (Park, Sha, and Olmsted 2016).

In U.S. based research, non-English cognitive interviews are often reported to be more challenging than those conducted in English due to different communicative norms across cultures and lack of understanding or familiarity with the cognitive interview task (Goerman et al. 2007; Pan et al. 2010; Park, Sha, and Olmsted 2016). In particular, respondents who speak a variety of languages have been found to provide responses to cognitive interview probes which are not extremely helpful in evaluating survey questions. For example, Pan (2008) found that Chinese speakers often answered “it doesn’t matter” to probing questions when they were asked to provide their feedback during cognitive interviews.

In this study, we explored the effects of different types of pre-practice during the cognitive interview to help non-English speakers become more familiar with the cognitive interview think aloud, probing, and answering processes.

Literature Review

One possible strategy to improve the quality of cognitive interview responses across cultural and language groups is to train the respondents by providing them with feedback as they practice responding to typical probes. In the field of education, timely and explicit feedback by teachers to students has been found to be effective in improving student performance on tasks. Dekker-Groen, Van der Shaaf, and Stokking (2015) analyzed dialogue between teachers and students in a vocational education setting. Their study showed that teachers who gave prompt feedback to stimulate students’ reflection skills yielded increased reflection time for the students.

Other studies also have shown that interactive two-way communication as a feedback mechanism enhances students’ learning. Huang et al. (2015) investigated the effects of problem-solving prompts[1] and feedback on students’ knowledge acquisition among secondary students. The study found that not only did the problem-solving question prompts lead to improved knowledge acquisition, the improvement was more pronounced when combined with giving students explicit feedback on whether the answer was correct or not. Similarly, Chin (2006) analyzed discourse between teachers and secondary students and found that that feedback by teachers that encourages student response and thinking about conceptual content is particularly successful in improving learning.

Because people may vary in their ability to verbalize their thoughts, researchers emphasize the importance of training survey respondents prior to an interview or providing neutral feedback to survey answers (Cannell, Miller, and Oksenberg 1981; Ericsson and Simon 1993; Olson, Duffy, and Mack 1984; Willis 1999). However, although the cognitive interview has been used in questionnaire evaluation for the past three decades, little research has been done regarding what type of training is effective in helping respondents perform the cognitive interview task more effectively.

Methods

In a series of Asian-language cognitive interviews (30 each in Chinese, Korean, and Vietnamese) conducted by the U.S. Census Bureau, we included a short practice session similar to the type researchers often recommend including prior to a main cognitive interview (Goerman 2006; Park and Goerman, n.d.; Sha, Park, and Pan 2012). We used two different types of practice: (1) lecture-based “traditional” practice, where the interviewer explains the interview procedures and the interview task with an example; and (2) action-based “enhanced” practice, which includes the same two components as the above but has an additional segment at the end where the respondent actually completes a short, practice task which is very similar to the ones he or she will encounter in the main interview. Both practices included a follow up question and answer when necessary. While the traditional practice was mostly one-way communication, the enhanced practice included two-way interaction between the cognitive interviewer and the respondent. In the enhanced session, the interviewer gave immediate feedback about the performance of the respondent during the practice. Figure 1 shows the scripts that were used in the traditional and the enhanced practice sessions.

Ten interviewers were trained on how to administer these two types of practice sessions as a part of general cognitive interviewer training. These interviewers were blinded from the purpose of the research to prevent their expectations from affecting the research outcome.

This research component was added to existing cognitive interview projects which had the aim of identifying errors in newly translated survey items. As such, this research could not be implemented under ideal experimental conditions such as random assignment controlling for respondent characteristics. In an informal attempt to control for educational level, we asked interviewers to alternate between the two research conditions (traditional vs. enhanced) when they conducted interviews with respondents with the same education level. However, when we compared respondent characteristics in the two groups after the end of data collection, it turned out that respondent education level was not evenly distributed across the two conditions.

Among the 90 cognitive interviews, 42 interviews were done in the traditional method, and 48 interviews were done under the enhanced condition. The respondents who were assigned to the enhanced condition tended to be more highly educated, younger, and more recent immigrants ( Table 1). Despite these limitations, this study provides exploratory insight into the effects of using an enhanced practice or training session as part of the non-English cognitive interview process.

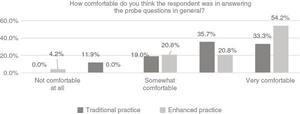

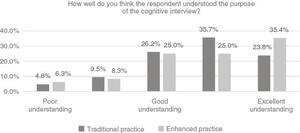

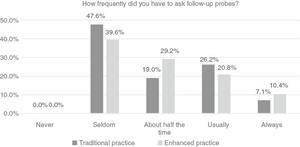

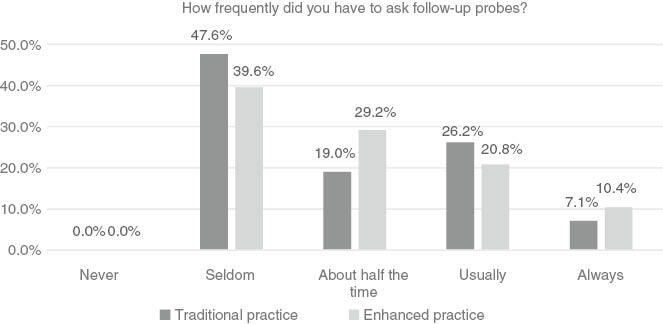

We measured the effects of different types of pre-interview practice in the interviewer debriefing by asking the interviewers who administered the sessions to rate each respondent’s behavior during the cognitive interview using a 5-point Likert type scale at the end of each interview. The first question was “How comfortable do you think the respondent was in answering the probing questions in general?” The interviewers chose their answers on a 5-point scale from “not comfortable at all,” to “very comfortable” (see Figure 2). The second question was “How well do you think the respondent understood the purpose of the cognitive testing?” Interviewers again chose their answers on a 5-point scale from “poor understanding” to “excellent understanding” (see Figure 3). The third question was “How frequently did you have to ask the respondent to provide more detail about his or her answers?” Here interviewers chose their answers on a 5-point scale from “never” to “always” (see Figure 4). Finally, the interviewers rated the respondents’ performance in answering the probing questions as intended at different stages – during the beginning, middle, and the end of the interview. Our specific research questions were:

- Would respondents in the enhanced condition experience higher levels of comfort than those in the traditional condition?

- Would respondents in the enhanced condition experience greater understanding of the interview purpose than those in the traditional condition?

- Would respondents in the traditional condition require more follow up probing than those in the enhanced condition?

- Would respondents in the enhanced condition perform better than those in the traditional condition on the whole?

To answer these research questions, we analyzed the interviewer debriefing data of three Asian languages. We combined the data rather than separating it because the sample size of each language is small and the trend across the languages was similar.

Findings and Discussion

To answer the first research question, we examined the frequency of the interviewer answers to the question “How comfortable do you think the respondent was in answering the probe questions in general?” The interviewers rated respondents in the enhanced practice condition as “very comfortable” more often (54.2%) than they did those in the traditional practice condition (33.3%).

For the second research question, we examined the answer distribution of the question “How well do you think the respondent understood the purpose of the cognitive interview?” The interviewers rated enhanced condition respondents as having “excellent understanding” more often, 35.4% of the time, than they did those in the traditional practice condition, 23.8% of the time.

For the third research question, we examined the answer distribution of the question “How frequently did you have to ask follow-up probes?” The interviewers more often rated respondents in the enhanced condition as “always” and “about half the time” than they did those in the traditional practice condition (10.4% vs. 7.1% for “always” and 29.2% vs. 19.0% for “about half time time”). This is against our expectation that respondents in the traditional condition require more follow up probing than those in the enhanced condition, and we discuss some possible reasons for the finding in Figure 4.

To facilitate the comparisons of the two conditions, we calculated the average of the above three performance variables. As shown in Figure 5, the respondents in the enhanced condition felt more comfortable (4.2 in the enhanced condition on a scale of 5 vs. 3.9 in the traditional condition) when answering the probing questions, and they also better understood the interview purpose (3.8 in the enhanced vs. 3.6 in the traditional).

On the contrary, the interviewers thought they had to ask more follow-up probing questions (3.0 in the enhanced vs. 2.9 in the traditional) to the respondents in the enhanced condition. We had hypothesized that interviewers would need to ask fewer follow-up questions if respondents had a better understanding of the cognitive interview process, and this finding was counter to our expectations. However, asking more follow-up probes than fewer probes could indicate that the respondents were actually providing helpful information to identify survey problems and that a dialogue was taking place. Increased dialogue could be a sign of greater levels of comfort and rapport between interviewer and respondent, and as such, the discussion could help to uncover any issues with the survey questions. However, it is somewhat incautious to draw this conclusion out of such a small difference. Therefore, the effect of enhanced practice on the level of dialogue needs further investigation.

We compared the interviewers’ rating of the respondents’ performance at three different points of time in the interview to answer the last research question. This was an attempt to see whether we could identify any effects of the different types of pre-interview practice and experience respondents may gain as the interview continued.

As we hypothesized, the respondents assigned to the enhanced practice session were rated as performing better than those assigned to the traditional practice session throughout the interview (Figure 6). The interviewers rated the performance of the respondents assigned to the enhanced practice condition as 3.77 as opposed to 3.57 for those assigned to the traditional practice condition in the beginning of the interview. They also rated the performance of the respondents assigned to the enhanced practice condition as 3.94 as opposed to 3.71 in the middle of the interview. This trend stayed the same toward the end of the interview, so those assigned to the enhanced practice condition were rated as 3.96 as opposed to those assigned to the traditional condition 3.64. Although this finding is well aligned with our expectation, it is hard to attribute the overall better performance of the respondents in the enhanced condition to the pre-interview practice session per se due to respondent characteristics not having been controlled for across the two conditions. Instead, this may indicate that the respondents’ demographic differences across the two conditions were a key factor in performance. That is, since those in the enhanced condition were more educated and younger, they could have performed better related to those characteristics (Park, Sha, and Olmsted 2016).

From anecdotal experience, cognitive interviewers sometimes say that respondents perform better later in the interview as they become more accustomed to the interview task. However, it can be hard to keep respondents engaged or talking toward the end of an interview, particularly in the case of long interviews as respondents can lose interest over time (Deutskens et al. 2004; Galesic and Bosnjak 2009)

With this notion in mind, the changing patterns of the respondents’ performance in this research during the course of the interview are noteworthy (see Figure 6). The average ratings of respondents’ performance at the different stages of the interview process in the traditional condition is a perfect illustration of these anecdotal observations. However, the respondents in the enhanced practice condition performed better in the middle of the interview as expected, and performed even better toward the end of the interview. Since the respondents in the enhanced condition had already performed well from the beginning and performed even better in the middle, it is surprising to watch this pattern when the decline of data quality is often observed due to respondents’ fatigue (Deutskens et al. 2004; Galesic and Bosnjak 2009). Therefore, this observation showing that those in the enhanced condition remain engaged is very interesting and calls for future research. Perhaps, better understanding of the purpose of the interview might have helped them stay engaged.

Conclusions

This paper is an exploratory first attempt to study the effects of pre-interview practice in Asian language cognitive interviews. We implemented a traditional practice condition, where the interviewer explained the interview procedures and tasks and an enhanced practice condition, where the respondent did a short task similar to those in the main interview and got feedback about his or her performance. Our results indicate that enhanced practice may have helped the respondents understand the purpose of the interview better, feel more comfortable with the interview process, and may have helped to maintain their interest throughout the interview more than the traditional lecture-based practice.

Despite our interesting findings, our research has some limitations. First, our research was quasi-experimental and was not implemented under strict experimental conditions. Second, our analysis was based on a convenience sample from voluntary research participants. Third, the respondents’ performance measures depended on the interviewers’ own subjective ratings since they had each coded their own cases. We recommend future research replicating this research but controlling for respondent characteristics in order to increase generalizability of the findings.

Disclaimer

This paper is released to inform interested parties of research and to encourage discussion of work in progress. Any views expressed on statistical, methodological, technical, or operational issues are those of the authors and not necessarily those of the U.S. Census Bureau.

The examples of problem-solving prompts are (a) What is the main purpose of this question? (c) In the problem, which of following do we already know? (b) Based on your answers to the two previous questions, which formula is applicable to the problem?