Introduction

The University of Michigan’s Surveys of Consumers (SCA) tested the effect of a set of design variations on response rates in address-based sampling (ABS) mail and Web-Mail surveys in monthly experiments between March 2011 and April 2012. In this paper, we report findings from a selected set of experiments focusing on the effect of prepaid cash incentives in the ABS Web-Mail surveys for a general population. The selected set of findings includes response rates, proportion of completed interviews by web (web completion rates), and demographic and socioeconomic characteristics of respondents by the experimental groups.

Methods

Sample

The SCA is a monthly telephone survey that follows a rotating panel survey design (Curtin 1982). Each monthly sample is composed of 300 fresh and 200 recontact cases. The SCA targets household heads 18 years and older in the contiguous United States. In addition to the random digit dialing (RDD) sampling telephone interviews, postal addresses from the U.S. Postal Service Computerized Delivery Sequence File were contacted in a set of monthly mail survey experiments. Further information on the SCA can be found on https://data.sca.isr.umich.edu/.

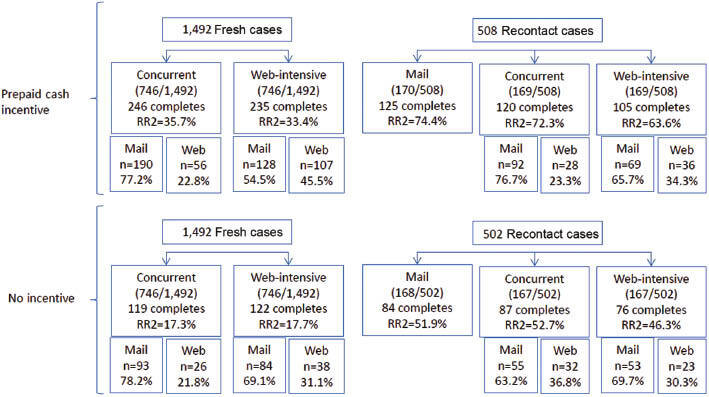

In September and October 2011, monthly fresh samples of 1,500 postal addresses were randomized to concurrent and web-intensive contact strategies. In these two Web-Mail contact strategies, the respondents were allowed to complete either a web or a paper questionnaire. In addition to the fresh samples, a total of 507 respondents who completed the interview six months ago were randomly assigned to be recontacted by one of the three strategies: mail, Web-Mail concurrent, or Web-Mail web-intensive. Under the assumption that monthly differences are not related to the outcomes of interest and contact strategies, prepaid incentive and no incentive conditions were assigned to September and October samples, respectively (see Figure 1).

In both the mail only and the Web-Mail, the mailing protocol consisted of five mailings which are seven days apart as follows: (1) advance letter, (2) paper questionnaire package, (3) nonresponse follow-up: postcard reminder, (4) nonresponse follow-up: replacement paper questionnaire package, and (5) nonresponse follow-up: second postcard reminder. In the Web-Mail design, the invitation package included letters with a Uniform Resource Locator (URL) and a custom id for the sample units to use to complete the web survey if they choose to do so. While in the concurrent design, the web survey invitation was included concurrently with the paper questionnaire package, the web response option was included in the advance letter in the web-intensive design. When incentives were offered to the prepaid cash incentive group, a 5 USD cash incentive was included when a web survey and/or paper questionnaire invitation was included the first time. The advance letter in the web-intensive contact strategy mentioned a forthcoming paper questionnaire.

Analysis Plan

Between the incentive and no incentive groups, we compared the following: response rates, web completion rates, and demographic and socioeconomic characteristics. The comparisons included the differences by the contact strategy for both fresh and recontact cases. In addition, we explored the differences between the incentive and no incentive groups, specifically for the recontact cases, in terms of the SCA baseline scores.

Results

Table 1 reports the response rates by the experimental groups. The incentives yielded higher response rates (Wald chi-square [df=1]=40.1, p<0.0001) controlling for the fresh and recontact case status. The differences in response rates by fresh and recontact case status were not significant by the incentive group. In addition to the fresh and recontact case status, we also tested the effect of incentives and contact strategy combined in each group (results not shown here), and these effects were not significant.

The web completion rates by sample and contact strategies and incentive groups are shown in Table 2. For the fresh cases, incentives increased the web completion rate significantly in the web-intensive contact strategy (Wald chi-square [df=1]=6.8066, p=0.01). That is, when the incentives were offered, respondents were more likely to respond by web in the fresh sample. Although the direction of the difference was same for the recontact cases, it was not significant significantly due to small sample size. For the concurrent contact strategy, when the incentives were offered, respondents were less likely to respond by web in the recontact sample (Wald chi-square [df=1]=4.3730, p=0.04).

As shown in Tables 3 and 4, the characteristics of the respondents were similar by the incentive group in both fresh and recontact samples except for the stock ownership in the recontact group. In the recontact sample, percentage of stock owners was significantly higher in the no incentive group.

Table 5 shows the baseline scores from the first interview for the recontact cases. Table 5 includes three leading indices that the SCA reports monthly. The Index of Consumer Sentiment is composed of five survey items: (1) current personal finances, (2) expected personal finances, (3) expected business conditions in 12 months, (4) expected business conditions in 5 years, and (5) current buying conditions. While the current index includes items 1 and 5, the expected index includes items 2, 3, and 4. The pairwise comparisons were not significant in the index scores by the incentive group.

Discussion

These experiments allowed the principal investigator to test the mailing protocol and the package for a general population in addition to testing the effect of the incentives. Overall, the prepaid cash incentive increased the response rates in this study. This is in line with the previous literature (Church 1993). Additional analysis offered further information on the potential magnitude of the possible change in nonresponse bias for a general population survey on the economic attitudes.

According to the leverage-saliency theory of survey participation, the survey participation decision is based on an equation including the importance and the saliency of the feature and the direction of the influence (Groves, Singer, and Corning 2000). Furthermore, the saliency and the importance of a survey feature may differ by people in addition to the direction of the influence. That is, incentives could motivate people with certain characteristics to respond disproportionately at a higher rate. The higher the covariance between the survey measures of these people and their response propensities, the higher the nonresponse bias (Groves, Presser, and Dipko 2004; Groves et al. 2006, 2009). For example, incentives attracting poorer people disproportionately could be a concern as this could imply a higher covariance between the economic attitudes and the response propensities, consequently higher nonresponse bias. In particular, this study used a set of socioeconomic demographics to understand the differences in the respondent characteristics by the incentive group. For the fresh sample, socioeconomic demographics were comparable. For the recontact cases, further research is required on the significant difference by the stock ownership, but the baseline scores did not suggest any differences in the nonresponse bias by the incentive group. Overall, we concluded that using the incentives increased the average response propensity without changing the covariance component of the nonresponse bias substantially.

For the fresh cases, the incentives did increase the web completion rates in the web-intensive contact strategy. On the other hand, the concurrent contact strategy yielded lower web completion rate in the incentive group for the recontact cases. This finding should be interpreted with caution due to small sample sizes and future research should investigate its replicability. One possible explanation is that incentives could be urging the participants to respond and to choose the mode at hand.