Background

Ranking scales have long been used to obtain an ordering of researcher-provided alternatives from a respondent. Typically, respondents assign a rank value (a whole number from 1 to X, where X is the total number of alternatives to rank) based upon a continuum provided by the researcher, and each rank value can only be used once. These scales are used to obtain information about the relative relationships among alternatives (Alreck and Settle 2004). A major benefit of forced ranking is that it mirrors real-life situations common to all respondents. For example, when shopping for peanut butter at the grocery store, an individual must choose among several brands.

Two major shortcomings of forced ranking scales are that: (1) forced ranking scales do not permit respondents to indicate ties between alternatives, and (2) the data do not provide information about the perceived distance between ranked alternatives. By not allowing ties between alternatives, forced ranking may not always produce valid results. If a respondent values two alternatives to the same degree, forced ranking does not allow the respondent to express this psychological reality; he or she is required to rank one alternative higher than the other. In such cases, the ranks assigned to the two alternatives are arbitrary.

In addition to the constraint imposed by a lack of ties, the data produced by a forced ranking scale are ordinal (Alreck and Settle 2004; Stevens 1946). Therefore, information about the distance between alternatives is not collected. Consider a respondent that ranks three alternatives. The alternative that is assigned a rank of 1 may be preferred over the other two alternatives, but the scale provides no information regarding the magnitude of that preference. It is possible that the alternative ranked 1st is preferred more than the alternatives that are ranked 2nd and 3rd. It is also possible that a respondent’s preferences for the alternatives ranked 1, 2, and 3 vary only slightly in magnitude. Since a traditional ranking scale is not sensitive enough to detect these differences, valuable information about the intervals between alternatives is lost.

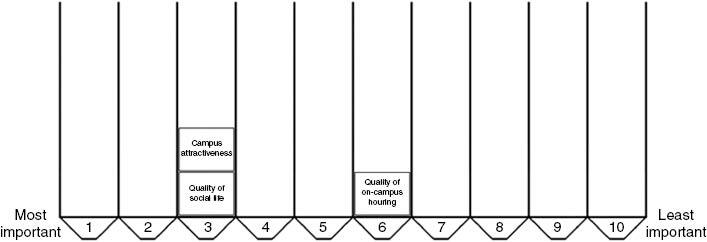

The purpose of the present study was to develop and evaluate a novel ranking format that allows respondents to indicate a tie, and allows respondents to express distance between ranked alternatives. This new paper-based ranking format, called the BINS system (Figure 1), was based on the design of a visual analog scale and can be used in either in-person interviewing or in mail surveys. The BINS format provides respondents with up to 10 alternatives to be ranked. This 10-alternative limit was imposed to ensure that the ranking task is not too cumbersome for respondents (Alreck and Settle 2004; Dillman, Smyth, and Christian 2014). The back side of each alternative is affixed with an adhesive allowing for easy placement and rearrangement (similar to Rokeach’s 1973 Value Survey).

To rank an alternative, respondents paste an alternative into one of 10 bins of equal dimension. These bins are arranged along a horizontal continuum and are anchored on both ends by the variable being measured (“Importance” in the case of this study). If a respondent places an alternative in the bin closest to the label of “Most Important,” that respondent is indicating that he or she values that alternative the most. Conversely, by placing an alternative in the bin closest to the label of “Least Important,” a respondent is indicating that he or she values that alternative the least. Respondents using the BINS format can indicate an equivalent rank by stacking alternatives within the same bin (Figure 2). Each bin can have as many or as few alternatives in them as a respondent desires.

The BINS format allows respondents to indicate distance between alternatives. By creating space between two alternatives, respondents can communicate the interval between those ranks. Figure 1 demonstrates how a respondent might indicate closeness in importance between his or her first and second ranked alternatives (bins 2 and 3), and also how he or she can indicate a disparity between their second and third ranked alternatives (bins 3 and 6).

The present research addresses two main questions about the BINS ranking format. First is the concept of stacking alternatives to communicate an equivalent rank easy to learn and a desirable feature of a ranking format? And second when ranking alternatives on Importance, do participants perceive the distance from one bin to another as an equal psychological interval?

Method

Materials

Two versions of the BINS format were used in this study. In the explicit continuum version, each bin was numbered (from 1 to 10, left to right; Figure 1). The implicit continuum version of the BINS format did not have numbered bins. Both versions of the format (including ranking task instructions) were printed on 20×30 inch sheets of foam board. Foam board is best suited for the reusability required by in-person interviewing (as in this experiment), but the format can also be printed on paper for mail surveys. (See Appendix A for sample instructions that can be printed above the scale.)

Each alternative to be ranked was printed inside of a bordered 1.75×1.25 inch square of paper and glued to a piece of white cardboard with the same dimensions. A strip of adhesive putty on the back of each alternative allowed participants to easily rank and reposition the alternatives on the foam board. Alternatives can also be printed on peel-and-stick labels (as in Rokeach 1973) for mail surveys.

Procedure

Forty-eight University of Dayton undergraduate students in an introductory psychology class volunteered to participate in this study. Participants were randomly assigned to complete their ranking task using either the implicit continuum or the explicit continuum version of the BINS format. After listening to the experimenter explain the BINS ranking process, all participants demonstrated their understanding of the task by successfully completing a practice question. Participants then completed an experimental ranking task by ranking 10 aspects of the University of Dayton (Appendix B) from “Most Important” to “Least Important” in their decision to attend the university.

Results

Continuum Preference

After participants completed their ranking task, they were shown a diagram of the BINS continuum that they did not use. Participants were then asked if they felt that their ranking task would have been easier if they had used the other continuum. The majority (64 percent) of participants that used the implicit continuum indicated that they would have preferred to use the explicit continuum. The majority (83 percent) of participants that used the explicit continuum indicated that they would have preferred to continue using their own continuum. Overall, 73 percent of participants would have preferred to use the explicit continuum over the implicit continuum.

Stacking

In order to determine if participants perceived two stacked alternatives as having an equal rank, participants were shown a diagram of a ranking completed by Sally, a fictional character. We asked the participants to describe the relationship between the alternatives that were stacked on top of one another (Figure 3). Participants were asked to decide which of four responses best described Sally’s rankings in Figure 3. There was near-unanimous agreement that the two alternatives were of equal importance on the given scale (Table 1).

Participant satisfaction with stacking was positive (Table 2). Participants reported that the stacking process was easy to learn and perform. Overall, 82 percent of participants used stacking when completing their ranking. When asked if they would prefer a ranking format that did not allow them to stack alternatives, participants expressed a preference for stacking (Table 2). While these results were not derived from a direct comparison between a stacking format and a nonstacking format, the data suggest that given a choice between two formats, participants would prefer to use a stacking format.

Relative Distance

Michell (1997) established that in order to create a valid interval scale, the attribute being measured by the scale must demonstrate an additive structure. Therefore, in order for the BINS format (when using the Importance construct) to be considered to have equal intervals, respondents using the format must perceive a numeric relationship between alternatives ranked in each bin. To determine if this requirement was met, participants were asked two questions. The first question (Figure 4) was designed to determine if participants saw the relationship between bins as being multiplicative (i.e., that an alternative in the 3rd bin was twice as important as an alternative in the 6th bin).

The relationship between continuum type (implicit vs. explicit) and response classification (identifying the relationship between bins as multiplicative or not) was significant, χ2(1, n=48)=15.57, p<0.001. As shown in Table 3, 100 percent of participants in the explicit continuum condition indicated that they saw a numerical relationship between two alternatives in different bins. Conversely, only 11 of the 25 participants (44 percent) in the implicit continuum condition saw the relationship between bins as being multiplicative. The majority of participants in the implicit continuum condition saw no numerical relationship when comparing two alternatives in different bins (56 percent).

The second question (Figure 5) was designed to determine if participants saw the relationship between bins as divisible (i.e., that an alternative in the 6th bin was half as important as an alternative in the 3rd bin). Participants’ responses are presented in Table 4.

The relationship between continuum type (implicit vs. explicit) and response classification (identifying the relationship between bins as divisible or not) was significant, χ2(1, n=48)=6.52, p<0.01. As shown in Table 4, more participants in the explicit continuum condition (87 percent) saw the relationship between bins as divisible when compared to participants in the implicit continuum condition (48 percent).

Discussion

The results suggest that the ability to indicate equivalent ranks via the BINS format’s stacking mechanism was a simple, intuitive, and desirable process. This feature of the BINS format is notable because it allows respondents to express a ranking that more closely resembles their intended response and is a feature not available in traditional forced ranking procedures. This information will be useful to researchers seeking to measure and predict human behavior in real-life ranking situations where ties between alternatives are possible.

Respondents provided with an implicit continuum did not see a precise numeric relationship between alternatives in the bins. However, respondents provided with an explicit continuum (through numbering the bins), easily expressed numeric relationships between alternatives. This supports the notion that participants perceive the explicit BINS format as having equal intervals.

Researchers employing the BINS format are provided with information about the relative relationships of ranked alternatives that was previously unavailable to them. However, it is important to note that this research does not claim that the data obtained using the BINS format are compatible with parametric analysis. Despite the fact that these results suggest that participants were able to use the BINS format to express relative distance between alternatives, it would be inappropriate to use measures such as mean and standard deviation to describe data obtained by the BINS format. Reporting that a beautiful campus has an importance mean of two has no practical significance. The data become useful only when comparing the scores of at least two alternatives; when a beautiful campus has an importance mean of two and social life has a mean of four, researchers can deduce that on average, participants rated a beautiful campus as twice as important as social life. Although the explicit BINS format does not elevate ranking data to the level of interval measurement, the format represents an improvement over the conclusions that can be drawn using a traditional forced ranking format.

In this study, the only continuum on which participants completed a ranking task was “Importance in your decision to attend the University of Dayton.” Future studies should examine the use of other continua (Most Favorite to Least Favorite, for example) and other domains (e.g., various brands of consumer products) and determine if the equal intervals relationship seen by participants in the present study still holds. Future studies should also examine a direct comparison between traditional ranking and the BINS format on factors such as completion time and usability. Additionally, a future study will compare web-based versions of a traditional ranking format to the BINS format.

Overall, the results from this study paint a clear picture about the advantages of the BINS format for either in-person or mail surveys. By simultaneously providing information about the order and relative relationship among alternatives and by allowing for tied alternatives, the data obtained by the BINS ranking format offer a unique snapshot into the psychological reality of survey respondents.

Appendix A

Sample instructions that can be included with a printed version of the BINS format.

For this question, we would like for you to rank ten aspects of the University of Dayton (included on peel-and-stick labels) according to how important they were in your decision to attend. By placing an aspect in the bin closest to “Most Important,” you are indicating that this aspect influenced your decision to attend the University of Dayton the most. By placing an aspect in the bin closest to “Least Important,” you are indicating that this aspect influenced your decision to attend the University of Dayton the least. If two aspects were equally important in how they affected your decision to attend the University of Dayton, you can stack them on top of one another within the same bin. Aspects can be placed in any bin along the continuum. Each bin can contain as many or as few aspects as you’d like. It is ok if some bins do not have any aspects in them.

Appendix B

Alternatives to be ranked in order of importance.

- Availability of recreational facilities on campus

- Campus surroundings (neighborhood)

- Quality of on-campus housing

- Cost (after scholarships and grants)

- Personal attention to students

- Quality of social life

- Quality of majors of interest to you

- Campus attractiveness

- Academic reputation

- Quality of academic facilities (library, etc.)

Aspects were derived from a listing of responses most frequently obtained by the University of Dayton’s Admissions Office.