Introduction

Previous research suggests that self-administered, mailed surveys using pre-paid incentives yield higher response rates than surveys without an incentive (Berry and Kanouse 1987; Church 1993; Dillman 2000). However, the effect of pre-paid incentives on response rates for web-surveys is unclear. In fact, some studies have found no effect on response rates (Downes-Le Guin et al. 2002; Heerwegh et al. 2005; Kypros and Gallagher 2003) while others have reported lower response rates associated with incentives (Cook, Heath, and Thompson 2000). In this study, we explore the effect of a post-paid incentive on response rates to a web-based survey and elicit relative preferences for a $5 cash or e-certificate incentive.

Methods

Sample

The sample was adult patients from two southern California medical centers selected because a substantial number of patients (about 30 percent) within each were registered users of Kaiser Permanente’s My Health Manager. My Health Manager is a sophisticated patient portal that supports secure communication with physicians and other providers (including pharmacy staff), appointment setting, access to a personal medical records, test results, and pharmacy information, provision of health care reminders, and tools for requesting prescription refills. Patients had to have had either visited or emailed 1 of 30 primary care providers in the study within the prior 12 months (McInnes et al. 2012).

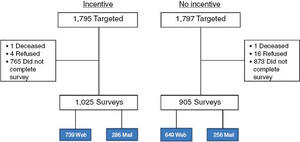

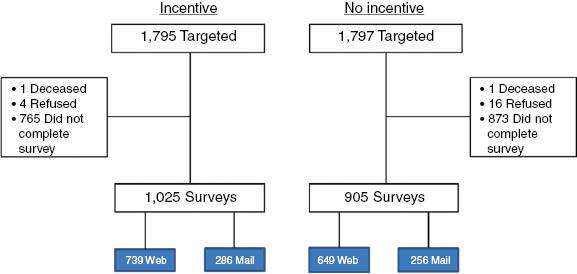

We used a split-ballot approach and randomized half of the sample from each medical center to a post-paid incentive (experimental group) and half to no incentive (control group): approximately 1,800 patients (900 per medical center) were assigned to each mode (see Figure 1).

Patients were invited to complete a patient experience survey via an email sent to the email address they had registered on the Kaiser Permanente system. The email appeared to come from kp.org but was generated and sent by RAND’s data collection system. The email contained a link to the survey URL and the individual’s survey code, required to access the survey. The only difference in the invitation text between the experimental and control groups was the addition of one sentence in the experimental group invitation, offering a $5 incentive (those who responded to the web-based survey were given the choice between a $5 cash or Target e-certificate) for completion of the survey. A patient received a maximum of three emailed invitations: initial invitation, a reminder sent to non-responders, and a second reminder sent to nonresponders. The first reminder was sent twelve days after the initial invitation, and the second reminder was sent 30 days after the initial invitation. Fifty-seven days after the initial email invitation, nonresponders were sent a paper version of the survey via first-class mail.

Analysis Plan

We compare the characteristics of respondents and response rates for the experimental and control groups. We also compare the number of days between the request to complete the survey and the date it was received and item non-response rates. In addition, we examine whether item responses differ by group. We also document preferences for the cash vs. the e-certificate incentive in respondents to the web-based survey. Those in the incentive group who completed the mail version of the survey were sent $5 cash. Finally, we estimate the costs per completed survey including the dollar value of the incentive and labor associated with incentive preparation and delivery. For the cash incentive, the unit cost includes cost of incentive, labor for assembly of incentive mailings, first-class postage and envelope for delivery. For the e-certificate incentive unit, cost includes cost of incentive and labor associated with e-mailing of incentive.

Results

The characteristics of respondents in the incentive and control groups was similar (Table 1) including age, education, length of membership in Kaiser Permanente, number of emails sent to the primary care provider in the 12 months prior to sampling, and the overall 010 rating of the primary care provider. However, the incentive group had more Asians [8 percent vs. 5 percent, χ2 (1 df)=7.92, p=0.005] and fewer Blacks/African Americans [2 percent vs. 4 percent, χ2 (1 df)=11.00, p=0.001] than the control group.

As shown in Table 2, those randomized to the incentive were significantly more likely to respond to the survey than those in the control group. The delay between a request for survey completion and the receipt of a completed survey was similar for the two groups (5.76 days and 5.83 days for the incentive and control groups, respectively). Rates of completion of the survey were similar but higher for the incentive group ([9 percent incentive vs. 97 percent control, χ2(1)=5.99, p=0.0144]. In addition, responses to survey items were similar for those in the two groups (Table 3).

Table 4 shows that respondents randomized to the incentive condition were significantly more likely to prefer a cash incentive over the e-certificate; 69 percent of the incentives delivered to web respondents were in the form of cash. The unit cost per incentive was $8.32 for cash and $7.49 for the e-certificate.

Discussion

The results of this experiment indicate that a post-paid incentive can significantly increase response rate to a web-based survey. The improvement in response rate was significant in both of the medical centers in our study (results not shown but available upon request). Our experiment controlled for many of the factors that are associated with higher response rates in web surveys (Cook, Heath, and Thompson 2000): number of invitations to complete the survey, mode of delivery of invitations, and use of personalization. By providing similar treatment to our incentive and control groups in the number, mode of delivery, and level of personalization in survey contacts, we were able to identify differences in response due to the use of the incentive.

The time to complete a survey and the responses to survey items did not differ between the incentive and the no incentive groups. So the 7 percentage point difference in response rate did not alter conclusions that would have been drawn about these items in this sample. Further research is needed to see if and when the higher response rates associated with incentives for web surveys have an impact on survey responses.

This study also shows that a cash incentive is more desirable than an e-certificate to survey respondents, consistent with a web survey that used pre-paid incentives (Birnholz et al. 2004). The preference for cash makes sense because of the greater flexibility in purchasing what the respondent desires compared to a specific e-certificate. The unit cost of cash was 83 cents greater than the e-certificate, however.

Acknowledgements

This paper was presented at the 2014 Society for Computers in Psychology Meeting, Long Beach, CA. The work was supported by a cooperative agreement from the Agency for Healthcare Research and Quality (U18 HS016980).