Abstract

Traditional wisdom about mail survey envelope design warns that colors and extra logos can make the envelope look like marketing, fundraising, and other “junk mail.” Yet, a distinctive and inspiring graphic representing a recognizable and beloved sponsor, combined with a prominent motivational message could distinguishing the survey from junk mail and encourage participation. We present evidence from a randomized experiment in which half of the mailings included a sponsor logo on the envelope and a motivational insert affixed to the cover letter. Findings show that conventional design wisdom is sound. The logo envelope received about a 1.3 percentage point lower return rate from the first mailing in which it was used. However, the difference was only significant in one of the two communities sampled suggesting that the logo effects may not be universal. Only age and dwelling type (single-unit vs. multi-unit) appeared to be related to the effect of the logo. Suggestions for future research and practice are discussed.

Introduction

Survey design guidance recommends using professional yet plain mailing materials to maximize response rates (Dillman 1978; Dillman, Smyth, and Christian 2014; Fowler 2014). Dillman et al. (2014) recommend a “recognized and respected logo with the return address” and to “limit print to standard colors such as black or charcoal gray” (p. 384, Guideline 10.13). These recommendations imply that unknown logos and unusual colors indicate marketing or fundraising to sampled households, rather than a survey in service of the public good.

The few studies testing markings and messaging on envelopes show decreased response (Dykema et al. 2012; Levin 2015). Yet, logos and markings can vary on many dimensions, and their effects may not be universal across populations. Government agency logos may lend status to a survey, but they do not necessarily increase response rates (Edwards et al. 2006). Even printing “Thank you! A cash gift is enclosed” on the envelope may suppress response, suggesting that any markings should be avoided (Dykema et al. 2012).

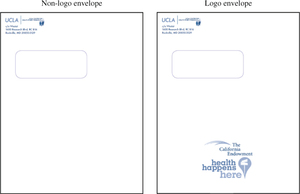

We hypothesized that a funder logo could work in this survey because the funder was conducting community-wide health promotion programs in the sampled communities. Households not actively participating might still recognize the logo from local advertising. The logo also had an explicit health promotion message (see Figure 1), which we thought might induce proactive behavior and motivate response. A sponsor endorsement was also inserted into the mailing.

Research Question:

“Will the logo and insert make respondents more likely to open, complete, and return the questionnaire? If yes, among whom?”

Methods and Data

We conducted this experiment in an address-based sampling (ABS) pilot test with a mail-to-phone design. A self-administered screener questionnaire was mailed to sampled addresses in two California communities (Jans et al. 2013), which had several hard-to-survey characteristics including moderate-to-high Spanish use, high rates of renter-occupation and multi-unit dwelling, younger age, higher proportion of Hispanic/Latino ethnicity, and moderate rates of poverty (see Table 1).

Mailing Protocol and Screener Questionnaire

A three-mailing protocol was used, testing the logo and insert in the second and third mailings (here forward called “experimental mailings 1 and 2). We did not manipulate the first full-protocol mailing to minimize any negative impact on the final results. The screener questionnaire asked household demographic and health questions, and requested phone numbers at which the household could be contacted for an interview (see Appendix). The outgoing envelope, used in full-protocol mailing 1 and as the “control” condition in experimental mailing 1 and 2 was white, 9”×12”, with a UCLA logo in the return address field. A cover letter, a one-page FAQ, a one-page screener form, and a postage-paid return envelope were enclosed and printed in English and Spanish. Mailings were conducted between October 12 and November 29, 2012.

A random half of mailings in experimental mailings 1 and 2 included the sponsor’s logo (Figure 1) on the envelope, and a sponsor endorsement insert glue tacked to the cover letter (Figure 2). The same random half received the logo and insert at experimental mailings 1 and 2 (see Table 2). At experimental mailing 2, the entire packet, including the original outgoing envelope, was placed in a Priority Mail envelope for delivery.

Results

We analyzed experimental mailings 1 and 2 separately because the Priority Mail envelope creates a different stimulus for householders. Return rates differed by less than one percentage point (n=83 people, 1.3 percent of experimental packet 1). Non-deliverable cases were excluded from these analyses. Analyses were unweighted, but simple random sampling was used within each community.

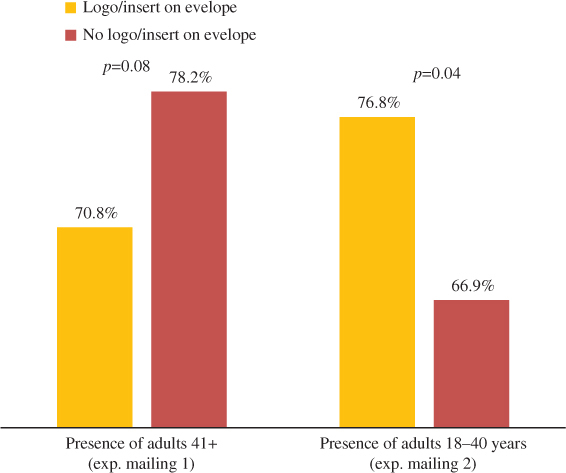

Overall, significantly-lower participation was seen among logo/insert packets see Figure 3) in the first experimental mailing only. The effect only appeared in Merced

At experimental mailing 1 , data from logo/insert packets were marginally less likely to indicate someone age 41 or older in the household see Figure 4). At experimental mailing 2 (i.e., Priority Mail), forms from logo/insert packets were significantly more likely to indicate adults age 18–40 in the household Chi-square tests of association between logo/insert condition and the presence and number of children and teens, number of adults, language in which the form was completed, and preferred language for the call were conducted but none reached even marginal significance.

Single-family and multi-family dwelling units (from sampling frame data) may be differentially-affected by the logo/insert manipulation. At experimental mailing 1, single-family dwellings appeared to be marginally less likely to return a completed form when the logo was used (5.42 percent for logo/insert v. 6.69 percent for control, Multi-family dwellings show the same direction of difference, though non-significant effect. There were no differences in effects between housing unit type at experimental mailing 2.

Discussion

Findings Summary

We find evidence that a sponsor logo on the outgoing envelope can suppress the return rate in a screener questionnaire mailed to an ABS sample, but only in the first experimental packet mailing, and only in one community. Clearly, logo effects are not universal. We cannot disentangle community, housing unit, and householder effects in this study, but several demographic features distinguish the two communities. Boyle Heights is more urban, more Hispanic, more Spanish-speaking, and has more renters than Merced, each of which is expected to reduce response. Thus, results may be due to community factors more than individual factors. Printing the logo only in English may partly explain why we see results in Merced, and not Boyle Heights. Linguistically-isolated households may not have processed the message of the logo. The low absolute rate of response in Boyle Heights could also lead to a lack of significant effect.

We found that single-family dwellings were marginally less likely to return a completed form when the logo and insert were used, and multi-family dwellings showed a non-significant difference in the same direction. When mail is delivered to a central location, as is common in multi-unit dwellings, there are more opportunities for mis-delivered mail or for someone other than the sampled residence to receive the package, which could contaminate experimental effects.

While we cannot infer about the effect of Priority Mail per se, we can gain some insight from the age difference in response from experimental mailing 1 (First-Class mail) and 2 (Priority Mail). Adults age 41+ were reported less often on forms from logo/insert mailings when the mailing was sent first-class (i.e., experimental mailing 1; logo visible from the outside). Younger adults (age 18–40) were reported more often on forms from logo/insert mailings when mailed via Priority Mail (i.e., experimental mailing 2; logo not visible until Priority Mail envelope opened). This may mean that younger adults are more impacted by Priority Mail, or simply that they respond later. With respect to the logo, older adults may be deterred by the logo (experimental mailing 1) and younger households attracted by it (but only after opening the Priority Mail envelope). We cannot conclusively assess the cause of this age difference in response across mailings because logo/insert (vs. none), Priority Mailing (vs. First-Class), and mailing order were not factorially-manipulated.

Limitations, Design/Analysis Caveats, and Future Directions

Limited Languages: The logo was only in English, so its message (“Health Happens Here”) may not have been understood by linguistically-isolated households. Only English and Spanish were used for the questionnaire and insert. Future studies should translate all messages into all relevant languages.

Results are Few: Most of the associations we tested between demographics and experimental conditions were non-significant.

Confounding of Logo and Insert: Logo and insert effects could not be assessed independently because they were both part of the same experimental condition. Future research should manipulate these factors (and others, like Priority vs. First-Class mailing) independently. Further, our “control” condition had a UCLA logo in the return address field, so it is not a true control condition with respect to the use of logos.

Truncated Sample: The experiment was implemented in the second and third mailing for practical reasons. Stronger or different effects may be seen in the full sample.

Recommendations for Practice

Practice recommendations are simple; do not use logos in general population surveys. Our findings and others’ (Dykema et al. 2012; Levin 2015) strongly suggest that logos and other messages reduce response. Attractiveness to a graphic designer or an organization’s membership may have negative connotations to the general population. While logos do not seem to work for general population surveys, they may work for surveys of organizational membership lists or other situations where the logo will have a more direct and personal association.

We are left with the stark reminder that mail response is a “black box” relative to face-to-face or telephone response. We never know what happens to unreturned mailings once they leave our hands. Did sampled households even notice the mailing? Did they notice the envelope and choose to throw it away? Did they open the envelope and then decide not to participate? Sorting and opening mail, like considering a survey participation request, is a dynamic cognitive/perceptual process that likely happens very quickly and subconsciously. Without data on the steps between mailing and response (e.g., receiving, opening, and responding), it is impossible to isolate the mechanism of the logo effect and know how to improve designs. Yet this experience has given us a few insights for practitioners facing similar design decisions.

Know the subjective assessment of the sponsor and logo in the population: Envelope designs will benefit from qualitative or quantitative measures of familiarity with and opinions the logo and sponsor.

Balance sleekness with simplicity: Impressive graphics and inspiring messages may reduce response because they imply that the organization has money, is looking for money, or is something other than a non-profit research operation that truly needs the household’s help. Researchers should seek uniqueness in plainness and try to design mailings that distinguish themselves from junk mail by being plain.

Create design-robust experiments: Practitioners who want to explore envelope visual design should plan experiments with strong manipulations that isolate design features (e.g., logo vs. insert vs. Priority Mailing). When such experiments are impractical in the field, lab-based experiments may be helpful, particularly when they focus on perceptual processes of mail handling.

Consider intensive cognitive and perceptual testing of materials and the mail receipt/decision process: Usability-like tests and cognitive-interviews of respondents’ reactions to the envelope design are priceless, since we cannot observe them opening their mail in the field. Behavioral debriefings are also possible (i.e., “Why did you choose to open the envelope with the TCE logo?; see Jans and Cosenza 2002) but suffer from the general limitations of retrospective reporting (Willis 2005). Eye tracking studies also hold promise for understanding how people perceive envelope design elements (Romano Bergstrom and Schall 2014).

Experiment with other uses of color, markings, and images that could visually draw the eye to the mailing but not suggest marketing or fundraising: For example, a plain mailing with a brightly-colored mark on it could draw the eye to the envelope while not creating the feel of marketing or implying anything about the sponsor. Local images seem to positively affect response (Smyth et al. 2010). Size, shape, weight, material, and other perceptual dimensions of the mailing’s “look and feel” should also be further tested, following recommendations of Dillman et al. (2014).

Acknowledgements

The authors thank The California Endowment for support of this study and continued support of the California Health Interview Survey. At Westat, Matt Regan and Howard King assisted with the data collection, and Ismael Flores-Cervantes conducted the sampling.

Appendix

.jpg)

.jpg)