Introduction

The Women’s Empowerment in Agriculture Index (WEAI) measures the empowerment, agency, and inclusion of women in the agriculture sector, where they contribute to more than 50 percent of the world’s total food production and in sub-Saharan Africa, as much as 80 percent (FAO 1996). It is used for performance monitoring and impact evaluations of USAID’s USD3.5 billion Feed the Future (FTF) Initiative, as well as for related initiatives such as USAID’s Food for Peace program.

This multidimensional indicator, which encompasses measures of the roles and extent of women’s engagement in the agriculture sector in five domains, is an innovation in the field. As such, lessons are still being learned regarding collection of the data needed to inform the indicator.

The objective of our study is to evaluate the cognitive validity of questions used in the WEAI using cognitive interview data collected during the fielding of the 2012 Haiti Multi-Sectoral Baseline Survey, in order to discern areas of particular concern in terms of the ability of the WEAI questions to elicit valid responses from survey participants.

Data and Methodology

Data

Data were collected during the 2012 Haiti Multi-Sectoral Baseline Survey, which was designed to gather baseline data against which programmatic impacts could be assessed across four development sectors: infrastructure, food and economic security, health and basic services, and governance and rule of law. Fieldwork was implemented from October to December 2012 in three geographic corridors: St. Marc Corridor, Cul-de-Sac Corridor, and Northern Corridor. A subsample of the households selected for interview were administered questions on agriculture and women’s empowerment. This subsample included 62 rural enumeration areas and had a final sample size of 1,382 households, including 1,017 joint female- and male-headed households (“dual households”) and 365 female-only households (“single households”).

To implement cognitive testing of the WEAI questions, a subsample of 12 households was selected from the main survey in rural areas of the Nord, Ouest, and Artibonite departments between November 30 and December 2, 2012. Households were stratified by type of household (dual vs. single) and a woman’s age (18–35 vs. 36 and older). A total of 10 field supervisors were selected and trained for 2 days on the WEAI cognitive testing exercise. The final sample included a single round of 20 individual interviews, including 12 women and eight men (Table 1).

Methodology

Instrument Design

Cognitive testing is defined as the administration of draft survey questions while collecting additional verbal or observational information about the survey responses that is used to evaluate the quality of the response obtained or to help determine whether the question is generating the intended information (Willis 2005). A cognitive interview can be based on different methodologies: think aloud vs. probing, standardized vs. active, and concurrent vs. retrospective (Beatty and Willis 2007). For the WEAI cognitive interview, the authors identified items likely to present a cognitive risk and predesigned specific probes for each. To limit interviewer error, standardized probes were designed for each item, according to the specific cognitive risk for each item. This type of probing does not require expert WEAI knowledge and can be done by regular professional interviewers. Probing was done after each section of the multisection WEAI questionnaire. Interviewer observations of the verbal and nonverbal behavioral responses to each question were recorded, as were interviewer observations of any difficulties the respondent had with specific questions.

Analytical Approach

Analysis followed a three-step process. The first step involved an analysis of close-ended cognitive testing questions posed to all respondents who answered the corresponding WEAI question. Only questions for which three or more respondents (15 percent of the sample) reported difficulties were explored further – a total of 12 questions. In the second step, follow-up open-ended cognitive testing questions were analyzed for the key problem areas identified during the first step. These open-ended questions were only posed to those respondents that reported cognitive difficulties in the close-ended questions. These two steps narrowed the list of problem areas and focused subsequent probing. In the third step, answers for all items, including paraphrase and comprehension probes, were reviewed to identify any problems the filtered probes may have missed. Both observation-based and probe-based indicators of cognitive difficulties were analyzed for each step.

Empirical Results

While our respondents seemed to understand most of the WEAI questionnaire as intended, several aspects of the questionnaire emerged as problematic. The results presented here highlight the more significant problems observed, organized according to the four cognitive stages involved in responding to survey questions: comprehension, retrieval, judgment, and response. We also report on the similarities and differences found between observation- and probe-based indicators of cognitive difficulty.

Comprehension

We found that use of unfamiliar terms and concepts, technical jargon, and culturally-inappropriate terms in the questionnaire contributed to comprehension difficulties.

Use of Unfamiliar Terms: “In-kind”

Respondents were asked about whether they engaged in a number of production and income generation activities over the past 12 months, including in-kind and monetary work. Four respondents reported that the question was difficult to comprehend. In particular, the term “in-kind work” was not understood: only three of 20 respondents could explain the meaning of the term, e.g., “work in exchange for things,” and “work for food.”

Similar results were observed regarding questions on access to credit, specifically in-kind borrowing. Only two respondents provided an accurate explanation of the term “borrow in-kind,” e.g.:

That means to borrow something from someone. You are given that thing, and then you must return it on a given date.

(Man, age 68, dual-headed household)

Use of Technical Jargon

Respondents were asked questions about their access to productive capital, including “non-mechanized farm equipment,” “mechanized farm equipment,” and “non-farm business equipment” – all terms that can be considered technical jargon. Cognitive probes were introduced to assess respondent understanding of these terms.

Most respondents correctly identified examples of non-mechanized farm equipment; however, the examples mentioned were restricted to three field-based hand tools – hoes, machetes, and pick-axes.pick-axes.

There is the machete, hoe and pick-axe. These are the utensils we use to cultivate the land.

(Man, age 68, dual-headed household)

These responses reflect a narrower understanding of the term “non-mechanized farm equipment” than intended. For example, respondents could also have reported post-harvest non-mechanized farm equipment, such as grain-processing equipment or containers.

Eleven of 20 respondents could not correctly define “mechanized farm equipment,” while the remainder referred to field-specific examples such as a tractor and motor cultivators – again indicating that respondents held a more field-focused interpretation of this class of equipment than intended.

Only five of 20 respondents provided accurate examples of “non-farm business equipment”:

Hardware such as a sewing machine (Woman, age 36, dual-headed household)

Bowls, bags, seals (Woman, age 25, single-headed household)

Reference to Unfamiliar Concepts

Decisionmaking authority over various aspects of one’s life and livelihood is a key dimension of empowerment. As such, there are several questions in the instrument designed to assess the relative contribution respondents make to household decisions, including about household finances, for example, “Who contributes most to decisions regarding a new purchase of [ITEM]?”

Five of 20 respondents reported that they did not understand this question at all, while nine could not put the question in their own words. Seven provided an accurate paraphrase, such as:

Whether it’s you who decides to buy things at home.

(Woman, age 33, dual-headed household)

Respondents demonstrated similar difficulties in comprehending other questions on decisionmaking such as “How much input did you have in making decisions about [ACTIVITY]?” and “How much input did you have in decisions on the use of income generated from [ACTIVITY]?”

When probed about the reasons these questions were difficult, most respondents indicated they did not understand the question, particularly the concept of having relative input into decision making.

Use of Culturally-inappropriate Expressions

Questions were asked to assess whether an individual’s actions are relatively more motivated by his or her own values than by coercion or fear of others’ disapproval (Alkire et al. 2012), for example,

“Regarding [ASPECT], I do what I do so others don’t think poorly of me.”

Most respondents felt that people might have difficulty in answering these questions; some pointed specifically to the phrase “think poorly” (“panse mal”) as problematic:

Because as soon as you say “panse mal,” they will think you are referring to wickedness

(Man, age 49, dual-headed household)

The term “think poorly of someone” was explored further using comprehension probes. The responses highlight the variety of connotations the term has in Haitian Creole, ranging from the intended meaning of “think poorly” to more aggressive connotations such as “wishing bad things to other people” and “destroying someone,” which may be related to superstitious beliefs.

Retrieval

Ambiguous Reference Periods

Respondents were asked about their participation in a number of productive activities over the past year using questions phrased as follows:

“Did you participate in [ACTIVITY] in the past 12 months (that is during the last [one/two] cropping seasons)?”

The interviewer is to adapt the question to the agricultural cycle in each survey area so that it covers a full year. However, this formulation confused respondents. Only five respondents correctly identified a full 12-month cycle when asked about the time period they thought of when answering these items. The remaining respondents either identified a single season (defined variously across respondents) or could not define their reference period.

Recall for 24-hour Time Use Measure

Respondents were asked to recall all activities performed in the past 24 hours, with a precision of 15-minute increments. To assess the reliability of their recall, respondents were asked how they knew what time of day it was when they were doing specific activities. Ten respondents said they check the time using a cell phone they carry with them. However, nine respondents indicated that they rely on neighbors or passers-by to know the time. Further probes indicate that respondents often rely on a variety of methods to estimate the time that they wake in the morning and go to sleep at night:

Usually, I observe the dawn of day. When I see the morning stars, I know it is 4:00 AM

(Man, age 40, dual-headed household)

I prepare to go to bed at the same time as the chicken

(Woman, age 39, dual-headed household)

In some cases, respondents directly acknowledged that they do not really know the time.

Judgment

Sensitivity to Lack of Economic Opportunity

Two respondents indicated that the questions about participation in productive activities were difficult because they draw attention to income-generation activities that respondents may consider to be private, particularly in poverty-stricken areas with a scarcity of opportunities.

Sensitivity to Political Consequences of Public Speaking

Respondents were asked about their comfort in speaking publicly about issues such as the building of community infrastructure, ensuring proper payment of wages for public works programs, and protesting misbehavior of authorities or elected officials.

Eight of 20 respondents reported that they thought people might find these questions difficult to answer. While most responses only hinted that these difficulties were related to political sensitivities, some identified it more explicitly:

They may think that if they respond, they will be betraying other people.

(Man, age 49, dual-headed household)

Response

Respondents had difficulty when asked to respond according to a prespecified scale. For example, respondents were asked to report their satisfaction with the time available for leisure activities on a scale of 1 to 10. Observation-based reports indicate that at least four respondents had difficulty providing a score within this scale.

Observation- versus Probe-based Indicators of Cognitive Difficulty

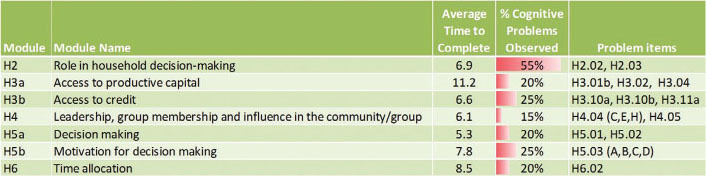

The observation-based indicators of cognitive difficulties indicate that most people had trouble with questions on roles in household decisionmaking, particularly the concept of inputs into decisionmaking (Module H2; see Figure 1). This may be a function of question-specific difficulties, or it could be related to the placement of the module at the beginning of the interview, when respondents are still acclimating to the format of the interview.

The probe-based indicators of cognitive difficulties corroborated some of the observation-based indicators (see Figures 2 and 3). The probe-based indicators also identified new problem areas in terms of questions on household asset ownership (H3.01a), decisionmaking over household purchases (H3.06), decisionmaking contingent on others not thinking poorly of the respondent (H5.04), and time use (H6.01). Questions on household decisionmaking and motivations for decisionmaking (Modules H5a and H5b) were flagged by half or more of respondents as being difficult for others to answer (see Figure 3).

We found that even when respondents are directly asked about difficulties in answering the survey questions, sometimes cognitive difficulties can only be identified through observation.

Summary and Conclusions

A total of 20 cognitive difficulties were identified in this study (see Table 2).

Comprehension: The majority of cognitive difficulties occurred at the stage of comprehension. Aspects of comprehension ranged from not understanding terms used in questions to not understanding the concepts underpinning certain questions. In most cases, rewording the questions using more accessible or accurate language would result in improved comprehension.

Retrieval: Recall was challenging for the question in which the respondent was asked to report on the agricultural activities engaged in over the past 12 months. Retrieval difficulties were also observed in terms of accurate recall of times for the daily time use log. Another problem related to retrieval has to do with respondents’ tendency to understand farm activities as being limited primarily to what occurs in the field, without recognizing that these could also include activities further up the value chain such as food preparation and storage. As a result, this survey instrument may undercount respondent engagement in agricultural activities.

Judgment: Two types of questions respondents indicated might be under- or misreported due to sensitivities were those on income generating activities, and on speaking out in public for workers’ rights and against misbehavior by authorities or elected officials.

Response: Respondents showed clear difficulty with the use of scales to quantify their response to questions on levels of input into decisionmaking and levels of satisfaction with available leisure time.

Recommendations

The questions on the WEAI were generally well-understood. However, our analysis of data from the cognitive assessment allowed us to discern areas of particular concern in terms of the ability of the WEAI questions to elicit valid responses from survey participants.

Based on this analysis, we recommend the following issues be reviewed in advance of future implementation of the WEAI questionnaire:

Use of jargon and overly formal language in questions: Language used in questionnaire development should be accessible both in the original formulation of the questions and in translation. Technical terms should be avoided.

Standardization of questions: The WEAI is intended to generate cross-nationally comparable data. Unnecessary differences in questions should therefore be minimized. However, the recall period for asking about farm-related activities has been formulated differently in different publicly-available drafts of the WEAI questionnaire, reducing the cross-national comparability of the indicator.

Provision of country-specific examples: When asking questions on agricultural production, we found that most respondents provided answers that reflected only the types of agricultural equipment used to work a plot of land. To ensure that respondents understand that “agricultural activities” include activities beyond working the soil, examples of the kinds of equipment that may be used along entire value chains of agricultural production should be incorporated into selected questions. Failure to provide a more comprehensive range of equipment types may result in gender-biased results.

Regular incorporation of cognitive testing: Cognitive testing, including structured observation of the respondent throughout the interview, is important for ensuring a valid and locally appropriate translation for this instrument.

.jpg)

.jpg)

.jpg)

.jpg)