Introduction

Audio computer-assisted self-interviewing (ACASI) is widely used to collect sensitive information from survey respondents in face-to-face interview settings (Couper, Tourangeau, and Marvin 2009; Nicholls, Baker, and Martin 1997). Examples of large national surveys currently employing ACASI include the National Survey of Family Growth (sponsored by National Center for Health Statistics [NCHS]), the National Study on Drug Use and Health (sponsored by the Substance Abuse and Mental Health Service Administration), the National Health and Nutrition Examination Survey (sponsored by NCHS), the National Longitudinal Study of Adolescent Health (sponsored by the National Institute of Child Health and Human Development), the National Survey of Child and Adolescent Well-being (sponsored by the Administration for Children and Families), the National Survey of Youth in Custody (sponsored by the Bureau of Justice Statistics [BJS]), the National Former Prisoner Survey (sponsored by BJS), and the National Inmate Survey (sponsored by BJS). Several other national surveys that have not traditionally used ACASI as a mode of data collection are considering the change – for example, the National Crime Victimization Survey (sponsored by BJS) and the National Health Interview Survey (sponsored by NCHS).

ACASI is designed to ensure respondent privacy and to optimize accuracy during the collection of sensitive information and allows the respondent to listen to questions that are asked by either a recorded human voice or a voice recorded using text-to-speech technology (Couper et al. 2014). Despite the prevailing assumption that this mode of data collection provides privacy and will increase reporting of sensitive attitudes and behaviors, the benefits of the audio aspect of this mode relative to computer-assisted self-interviewing (CASI) are not entirely clear (Couper, Tourangeau, and Marvin 2009), outside of mitigating concerns about the literacy of selected populations. In addition, human interviewers need to introduce the ACASI portion of a given survey interview, provide the respondent with instructions about how to use the technology, and remain present during the ACASI “session” in case respondents ask questions or encounter other difficulties while using the technology.

The presence of the interviewer and the ability of the interviewer to provide various forms of assistance therefore introduce the possibility of interviewer effects on responses collected during the ACASI portions of interviews. There is a history of studies in survey methodology that find surprising evidence of significant interviewer variance in survey responses in self-administered modes of data collection, where an interviewer was still present (Hughes et al. 2002; O’Muircheartaigh and Wiggins 1981; O’Muircheartaigh and Campanelli 1998; Research Triangle Institute International 2012). Differential interviewer behaviors during ACASI, such as varying provision of assistance with ACASI questions, varying location of the interviewer during ACASI, varying ability of the interviewer to establish privacy during ACASI, and establishment of rapport in the more general face-to-face interview could therefore be mechanisms that at least partially explain these unexpected findings. Unfortunately, no studies to date have examined these possibilities.

Empirical evidence supporting hypotheses about the effects of these potential behaviors on data quality does exist. Interestingly, high rapport between interviewers and respondents has been associated with more socially desirable reporting. For example, Hill and Hall (1963) and Weiss (1968) found that higher levels of rapport lead to less accurate survey data, while Mensch and Kandel (1988) reported evidence of lower drug use estimates when respondents were familiar with the interviewer. Couper and Rowe (1996) reported that 14 percent of CASI interviews in a study were actually completed by interviewers, and 7 percent were read by interviewers while respondents were keying their responses. In a meta-analysis of 29 studies comparing computerized self-administration with other formats, Weisband and Kiesler (1996) reported that self-disclosure was increased when subjects were alone during self-administration. Similarly, Kroh (2005) reported that the absence of an interviewer from the room yielded higher body weight reports among males, but had no effect on female reports. Recent research by Mneimneh (2012) has suggested that interviewers vary in their ability to establish privacy when confronted with varying household environments, and even more recent work by Mneimneh et al. (2014) reports that 48 percent of respondents asked for help during ACASI. In light of the results of these prior investigations, there is a need for research examining potential effects of interviewers on data quality when the ACASI mode of data collection is used, especially given the large number of surveys currently employing ACASI.

The objective of this study is to fill the gap in the ACASI literature with regard to potential effects of interviewer behaviors on the quality of data collected when using this mode. Analyzing data from the seventh cycle of the National Survey of Family Growth (NSFG), we address two specific questions:

- Do interviewers vary in terms of their behaviors during ACASI?

- Are different interviewer behaviors associated with different response distributions for sensitive survey measures collected using ACASI?

Methods

We first analyzed post-survey observations recorded by NSFG interviewers after the completion of main interviews in Cycle 7 (2006–2010) of the NSFG (see Lepkowski et al. 2013 for details on the NSFG design, including response rate information). A total of 22,682 interviews were completed by 108 trained female interviewers in this cycle of the NSFG, and each interview included an ACASI component featuring several sensitive questions on topics such as drug use and risky sexual behavior. Specifically, we focused on three post-survey observations: 1) seating arrangement during the ACASI portion of the interview (1 = next to respondent, 0 = seated elsewhere); 2) interviewer’s ability to see the screen during the ACASI portion of the interview (1 = yes, 0 = no); and 3) provision of assistance to the respondent during the ACASI component (1 = yes, 0 = no). We examined variance among NSFG interviewers in the proportions of interviews with a “1” on each observation, as this would provide empirical evidence of a mechanism by which interviewers may (unexpectedly) affect the responses provided in the ACASI component of the interview. We combined visual exploration of this variance with multilevel random-intercept logistic regression models, enabling estimation and testing of variance components for the interviewers in terms of the proportions of interest.

We next compared the responses provided by NSFG respondents on selected sensitive survey items between groups of interviews defined by the values of the post-survey observations. These sensitive items (all binary indicators) included having two or more sexual partners in the past year (for those who had ever had sex), receiving treatment for a sexually transmitted disease in the past year, using marijuana on two or more occasions in the past year, binge drinking (5+ drinks in a single sitting) on two or more occasions in the past year, and voluntary first sexual intercourse of female respondents. We used Rao-Scott chi-square tests to initially examine bivariate associations between the interviewer reports and the survey responses. We then fitted separate multiple logistic regression models, regressing the binary survey reports on the three indicators of interviewer behavior during ACASI, while controlling for respondent age, gender, and race/ethnicity, and education. These analyses used the SURVEYFREQ and SURVEYLOGISTIC procedures in the SAS software (Version 9.3; SAS Institute Inc., Cary, NC) to account for complex sampling features used in the NSFG (population weights, sampling stratum codes, and sampling cluster codes), enabling design-based inferences about these relationships in the larger NSFG target population (non-institutionalized U.S. persons between the ages of 15 and 44).

Results

Research Question 1: Interviewer Behavior Variance in ACASI

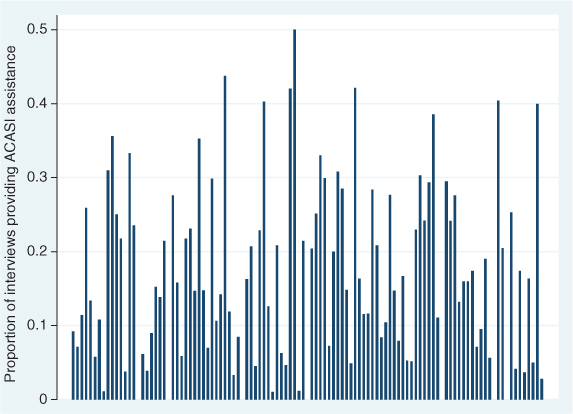

Figure 1 illustrates the substantial variance among the NSFG interviewers in terms of the provision of assistance during the ACASI components of the interviews. Figure 1 suggests that interviewers do in fact vary widely in terms of the amount of assistance that they provide during the ACASI components (ranging from less than 5 percent of interviews to 50 percent of interviews), introducing the possibility of interviewer effects on the ACASI measures. After fitting a multilevel logistic regression model with random interviewer effects to this particular binary interviewer report, a likelihood ratio test of the null hypothesis that the variance of the random interviewer effects was zero showed that the interviewer component of variance was significantly greater than zero (p<0.001), which was not surprising based on the results in Figure 1.

Figure 2 presents the results of a similar analysis for the proportion of times that an interviewer sat next to the respondent during the ACASI portion of the interview. Figure 2 provides evidence of substantial variability in how the interviewers positioned themselves during the ACASI components, again introducing the possibility of interviewer effects on the ACASI measures (potentially due to variability in the amount of privacy that the respondents feel that they have). In a multilevel logistic regression model for this particular observation, a likelihood ratio test once again showed that the interviewer component of variance was significantly greater than zero (p<0.001), as expected based on Figure 2.

We found very similar results for the indicator of the interviewer being able to see the respondent’s screen. Taken together, these initial analyses provide fairly convincing support of possible mechanisms for interviewer effects in the ACASI mode of data collection, at least in the NSFG.

Research Question 2: Differences in Reports of Sensitive Behaviors Based on Interviewer Behaviors during ACASI

Table 1 below presents estimated prevalence rates for risky sexual and drug use behaviors as a function of the interviewer reports of behaviors during ACASI. Table 1 suggests that different interviewer behaviors during ACASI do in fact affect response distributions on some sensitive items. Specifically, when interviewers are able to see the screen during ACASI, the prevalence of frequent marijuana use is significantly lower, and interestingly, the prevalence of consensual first intercourse among females is significantly lower. Similar results are seen as a function of the interviewer providing assistance during ACASI, where the reported prevalence of frequent binge drinking is also significantly lower when assistance is provided. Finally, sitting next to the respondent (without necessarily being able to see the computer screen) does not seem to have a significant impact on response distributions.

The lower prevalence of consensual first sexual intercourse among females when the interviewer can seen the screen (85.56 percent) and/or provides assistance (89.95 percent) is contrary to expectations, as reports of very sensitive behaviors in front of an interviewer are typically lower. A testable post-hoc hypothesis is that nonconsensual first intercourse may be selected in ACASI in order to be consistent with socially undesirable behaviors reported earlier during the interviewer-administered portion of the survey (e.g., a large number of pregnancies, given respondent’s age or lack of contraceptive use). Other possible explanations could be that given the survey topic, respondents may feel obliged to report more behaviors of interest when the interviewer can see their responses or that respondents may be seeking an interviewer’s attention and sympathy. Future replications of this study certainly need to assess whether similar unexpected findings occur.

Table 2 presents estimates from five logistic regression models, predicting the sensitive survey reports as a function of the binary interviewer reports of ACASI behavior and respondent socio-demographics.

The results in Table 2 show that even when adjusting for important socio-demographic factors in logistic regression models for the sensitive behaviors, selected interviewer behaviors during ACASI still have a significant impact on reports for three of the five respondent behaviors we examined. Specifically, when holding the four socio-demographic factors fixed, provision of assistance during ACASI reduces the probability of reporting frequent marijuana use and/or frequent binge drinking in the past year, and interviewer report of being able to see the computer screen reduces the probability of reporting that first sexual intercourse was consensual among females. These results therefore suggest that interviewers who more frequently provide assistance and are more often able to see the screen may ultimately impact the types of responses collected during ACASI, regardless of the demographic features of respondents (including education).

Discussion

This study provides an initial examination of the effects that selected interviewer behaviors during the ACASI portion of face-to-face survey interviews may have on responses to sensitive survey items. Based on analyses of data collected in the seventh cycle of the NSFG (2006–2010), we find that being close enough to see the computer screen and providing assistance during ACASI do actually affect the response distributions for sensitive items in the NSFG target population (noninstitutionalized U.S. individuals between the ages of 15 and 44), even when taking other socio-demographic factors (age, gender, race/ethnicity, and education) into account. Based on post-survey interviewer reports, we also find evidence of substantial interviewer variance in the proportion of the time that different interviewers engage in these behaviors. For all three behaviors (providing assistance, sitting next to the respondent during ACASI, and being able to see the computer screen during ACASI), the NSFG interviewers were found to vary substantially in the frequency of each behavior.

This study is certainly not without limitations. First, the quality of the post-survey observations recorded by the interviewers may itself be subject to socially desirable reporting (e.g., providing assistance), and there is no way to validate whether these behaviors actually occurred. (NSFG interviews are not video- or audio-recorded.) Future research needs to consider recording selected interviews in order to validate such types of interviewer observations. Second, we only considered a small subset of the sensitive measures collected in the NSFG, and other types of sensitive measures in surveys with different topics may be affected in different ways by such interviewer behaviors. Third, there certainly may have been other uncontrolled environmental factors during the ACASI portions of these interviews that could have had an impact on the response distributions (e.g., the presence of others at home). Fourth, our findings regarding the relationships of the ACASI behaviors with the reported values on the sensitive survey items may be partially confounded by respondent concerns about privacy; unfortunately, we did not have access to measures of respondent privacy concerns to include in our multivariate models.

The findings from this study motivate future randomized experiments in laboratory settings, where interviewers are randomly assigned to a prescribed behavior during recorded ACASI interviews. The groups of interviewers defined by these behaviors can then be compared in terms of the values collected on sensitive survey items. Future research should also consider the effects of other types of interviewer behaviors that are not captured in the NSFG data, such as establishment of rapport. There also exists the possibility that changes in the voice used during ACASI (e.g., use of a male voice or a text-to-speech male voice when the human interviewer is a female) may induce interviewer effects, creating a situation where the respondent perceives the recording as a “second interviewer” in the room. This factor could also be manipulated experimentally in future studies.

In general, results from future research in this area should be used to inform interviewer training with regard to behaviors that interviewers should avoid during ACASI to maximize data quality. ACASI is an important data collection tool for generating information on sensitive topics from large populations, but the presence of an interviewer has the potential to affect the collected responses. More attention to minimizing behaviors that could affect survey responses will help to improve data quality for a variety of future data collections employing ACASI.

Acknowledgements

We would like to thank the National Center for Health Statistics for providing us with access to data and post-survey observations from the seventh cycle of the National Survey of Family Growth (Contract #200-2000-07001). We also thank our colleagues at Research Triangle Institute, including Paul Biemer and Rachel Caspar, and the University of Michigan Survey Research Center, including Mick Couper, for their comments and advice.