Offering multiple modes (toll-free number call-in, website, or mail in) of return for surveys is thought to be beneficial to both respondents and researchers. The goals for multiple modes of return in our study are to (1) improve the overall response to the survey and (2) increase the portion of responses that are web-based. This paper examines the impact of offering the modes of return simultaneously (modes offered at the same time) versus sequentially (modes are offered one before the other) for a demographic questionnaire that is sent via mail to households in order to recruit them for Nielsen’s diary-based TV Audience Measurement Service. These two approaches are demonstrated by changing the order of when different mailings and mode choices were sent to the respondents. The results are from two separate survey tests: November 2007 and May 2008. The results indicate that attempting to improve overall response and driving respondents to the web (rather than have them return via mail) has its challenges. We discuss our results and identify further areas of research in multiple mode research.

Background

Between phone calls, websites, and mail, as well as newer modes such as text messaging and smartphone applications, there are an abundance of options by which researchers can have respondents return a survey. Further, there is opportunity to recruit a respondent using one mode and having the survey returned using a completely different mode. These options provide an area of great exploration in terms of determining which mode, or combinations of modes, provides the greatest return.

For the two studies conducted here, we used an address-based sampling (ABS) approach to identify eligible homes. With ABS, addresses are selected from a sample frame consisting of nearly all residential addresses in the United States (the frame is an enhanced version of the USPS Delivery Sequence File). Sampled addresses are then “matched” to telephone numbers via directory listing or commercial databases. With approximately 40% of the sample selected we are unable to match a phone number to the address, referred to as the “unmatched” sample. This is important as the unmatched sample is comprised of cell phone only homes as well as a high proportion of younger and racial-ethnic homes. To improve our ability to recruit these homes, a one-page pre-recruitment survey is mailed to all unmatched homes in the hopes of obtaining a telephone contact number and key demographic information needed to drive incentive treatments and the number of diaries sent to the home. Respondents can return the survey by (1) calling our study-specific toll-free number, (2) logging on to the study website, or (3) mailing it back to us. This pre-recruitment approach consists of three separate mailings: a post card (with no web survey information), a packet containing the mail survey, and a letter with information about the survey and unique web credentials (i.e., username and password). In the two studies conducted, we changed the order in which these three pieces were delivered to the home.

With this study we are trying to (1) improve the overall response to the pre-recruitment effort to maximize our ability to recruit the home for the subsequent diary survey, and (2) to increase the portion of those using the website mode of return in hopes to reduce survey costs and have quicker access to returned data.

Methodology

During November 2007 and May 2008 Nielsen conducted pretests to refine the methodology for using ABS in our TV diary measurement, prior to launching production in November 2008. The difference between the tests was the order in which the three mailed pieces used in the pre-recruitment effort were sent to the homes. For the November 2007 test, the ordering of the materials was as follows: (1) advance postcard (with no mention of how the survey could be completed); (2) a mail survey packet which also included unique web credentials for the home as well as the option of completing the survey via the toll free number; and (3) a reminder letter, which also encouraged use of all three modes as well as containing the web credentials for the home. Because the first offering to complete the survey was via the packet with all three modes emphasized, we refer to this as the “simultaneous” approach. In contrast, for the May 2008 test, the order was: (1) advance letter, with web credentials for respondents who wanted to complete the web-based survey right away, (2) the full mail survey packet which also included the web credentials, and (3) a reminder postcard, which only encouraged returns but didn’t provide additional information. Because the web was offered first as a single return mode, followed by the packet which allowed for all three return modes, we refer to this as the “sequential” approach.

Table 1 compares the two test designs including when the website log on credentials were available to respondents. For both tests we used a random national sample, with approximately 10,000 households mailed for November 2007 and 24,000 households mailed for May 2008.

Results

For our analysis we evaluated the two approaches in terms of return modes as well as the demographic characteristics of the returning homes. Overall, the November 2007 study, reminding the households of the website in the third mailing, had both a higher overall rate of completion (32.6% versus 30.5%), but also a higher rate of return for the web component (18.1% versus 14.4%).

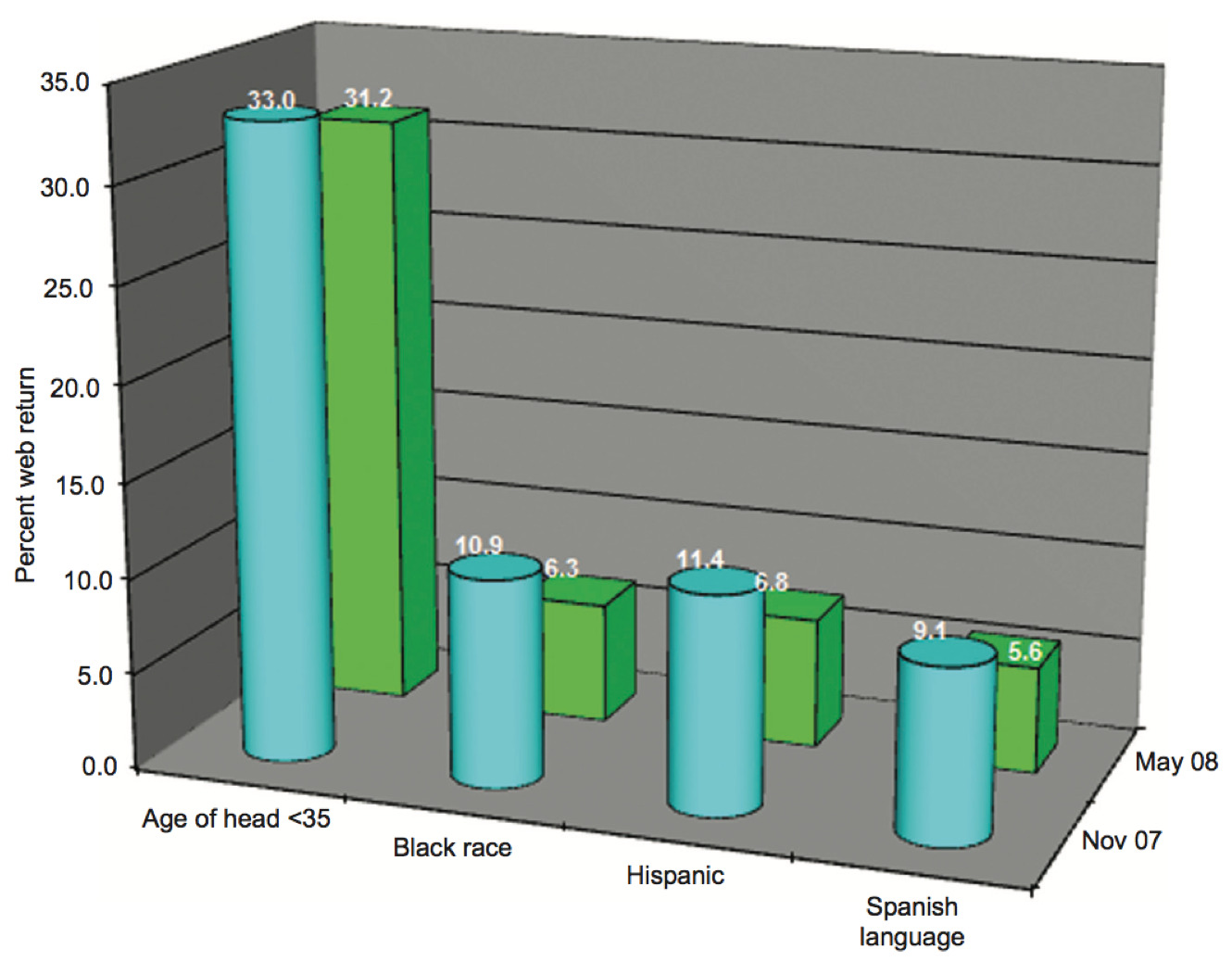

When looking at certain hard-to-reach demographics we see similar drops in return to the web mode were found between November 2007 and May 2008 across age, race, Hispanic ethnicity and Spanish language. The biggest drops were found with Black Race (4.6% drop) and Hispanic Ethnicity (also 4.6% drop).

Discussion

Initially it was thought that by providing respondents the return mode options for the pre-recruitment survey sequentially, web option first, would result in higher overall returns as well as a higher proportion of web returns. The hypothesis being that when given a choice, particularly between mail and web-based return options, respondents often think they will do the survey via web but fail to do so (and likely discard the mail survey in the interim). However, what we found is that the simultaneous approach, all modes presented together, actually performed better in terms of overall and web-based responses.

The findings, however, may have less to do with whether the options were presented sequentially or simultaneously, but rather the placement of the postcard and letter. With the sequential approach, the letter with the web log-in information was the first piece and the postcard with no web credentials was used as the reminder. With the simultaneous approach, these mailings were reversed – postcard first and letter second. We hypothesized that presenting the web option by letter in the first mailing was insufficient to garner much interest in the survey. The larger mail packet mailed subsequently likely spurred greater interest or attention to our request for their participation; however, if the respondent discarded or misplaced this packet, when they received the postcard they would have had no way of completing the survey. In contrast, when the follow-up third mailing was in the form of a letter, with the log-in credentials, this provided the information needed for completing the survey among those who may have thrown away the mail survey. The postcard – mail packet – follow-up letter was, therefore, the most effective means of both providing higher response and garnering a larger proportion of web-based interviews. In other words, it was the most effective and efficient approach. Given these results, when Nielsen implemented an ABS sample design for the diary-based TV audience measurement service in November 2008, we followed the November 2007 test design for the pre-recruitment mailings.

There are, however, a number of outstanding questions related to the optimal use and presentation of multiple modes of survey return to respondents. Some of the key questions for us are the following:

- How can we continue to maximize use of the lower cost web-based survey over the more expensive mail survey?

- Despite the proliferation of Internet access, why do respondents overwhelmingly still prefer to complete and return mail surveys over web-based surveys? and

- What design elements might allow researchers to more effectively use multimode designs to encourage participation among the hardest-to-reach groups (i.e., younger and racial-ethnic sample members?)