This paper is a report of the survey practice from a unique study covering all 14,085 elected representatives of the 290 municipal councils and 20 regional councils in Sweden (n=9890, response rate 70.2 percent)[1]. The Swedish Municipal and County Council Survey 2008–2009 is the largest of its kind in Sweden, and to our knowledge there are no previous surveys conducted in any other country targeting all directly elected local and regional political representatives[2]. In total it includes 69 questions concerning the representatives’ attitudes on politics and democracy[3].

In the paper, we first report our successful mixed mode design that combines a web survey, a postal questionnaire and reminding telephone calls to maximize the response rate. We then discuss possible mode biases in the response patterns from the web survey and the postal questionnaire. The paper concludes by discussing possible improvements in further large-scale studies of political representatives.

Description of the Study

Fieldwork started in 2008, and we decided early on to use a web survey as the main mode for data collection in order to increase the number of responses and reduce the costs. To make this possible, we first had to collect the political representatives’ e-mail addresses. These addresses are not available in any database, but many of them could be found on the municipalities’ websites. When we could not acquire e-mail addresses from the websites, we contacted the national, regional and local party organizations and requested addresses.

In order give the survey good a reputation and visibility, we received an explicit endorsement from the Swedish Association of Regions and Local Authorities. We also chose to cooperate with the weekly newspaper specializing in local and regional politics: Dagens Samhälle (The Daily Society), which is widely read by local and regional politicians. The newspaper published articles about the survey and interviewed the project leader when the field work commenced.

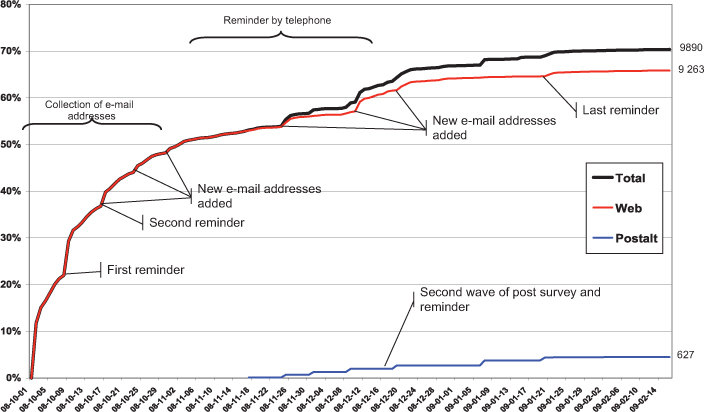

In October 2008, we approached the political representatives for the first time via e-mail and invited them to fill out the questionnaire on our online web survey. During the first day, we had already managed to achieve a response rate of over 10 percent. Figure 1 presents the number of responses per day.

We approached the non-responding representatives several times, first via e-mail and then up to ten times via telephone. Finally, each and every one of those who lacked an e-mail address, or showed no sign of having an e-mail address, were offered a postal questionnaire.

Previous research has shown that offering non-respondents an alternative mode of survey can significantly increase response rates (Dillman et al. 2008). This turned out to be the case in our study as well; switching from a web survey to a postal survey when respondents could not be reached via e-mail turned out to be a successful way to increase the number of responses. As it turned out, 4.4 percent of the total population responded using the postal questionnaire instead of the web survey. Hence, if we had used the web survey as the only mode, we would probably only have gotten a response rate about 66 percent.

All in all, after four and a half months of fieldwork, we had received nearly 10,000 responses (9,890) and a response rate of 70.2 percent. Figure 2 illustrates the cumulative number of responses in percent and the major events of the data collection process.

Even though we regard our response rate as quite successful, the non-responses are of course not equally distributed among all groups of representatives: when it comes to party affiliation, local (municipality unique) party representatives are the most underrepresented (5.2 percent in the survey compared to 6.6 percent in the municipalities). Regarding gender, the difference between our sample and the entire population is <0.5 percentage points (females are slightly underrepresented in the survey).

Among non-respondents, only 9.1 percent refused to answer the survey (2.7 percent of the total amount of respondents). The largest deficit of the survey is that we failed to make contact with 10.3 percent of all the respondents (34.6 percent of the non-responses). In retrospect, we should have started earlier with the gathering of e-mail addresses. In addition, we believe that the response rate could have been even higher since 45.7 percent of the non-responses promised to answer when we reminded them, but ultimately did not respond. The response rate is presented in Table 1, and the different forms of non-responses are shown in Table 2.

Evaluating Mode Bias

The multimode design, employing a combination of a web survey and a postal questionnaire, gave us the opportunity to test how the data collecting method affected the responses. Does the multimode design affect the quality of the data, or is it a feasible strategy to increase the response rate? We began with presenting comparisons of the different patterns regarding non-response items in the web and postal questionnaires respectively. The percentages of item non-response for each variable in the web and postal questionnaires are shown in Figure 3.

Figure 3 shows that in the web survey, the rate of non-response items gradually increases towards the end of the questionnaire, while the non-responses in the postal questionnaire are more equally distributed throughout the entire questionnaire. In all likelihood this is an inevitable problem that survey researchers need to learn to accept. Our best but rather obvious suggestion is to put the most important questions as early in the web survey as possible. Although this might of course not work for all surveys, especially if the most important questions are complex, require more cognitive work, or are open-ended.

In mixed mode surveys, an important question is whether the mode of data collection affects the results (e.g., Aritomi and Hill 2011; Malhotra and Krosnick 2007). Does the considerably less expensive web survey provide data of the same quality as the postal survey? Previous studies have pointed out that the mode of data collection might indeed affect the responses.

We tested mode bias using six questions regarding respondents’ attitudes towards important political proposals in the Swedish political debate. For each question, we presented two models. The first model included only a dummy variable for the utilized data collection mode (0=web, 1=postal) to evaluate whether responses of the web survey differed from the responses of the postal survey. In the next model, we included a number of controls: gender, age, age squared, education, party affiliation and median income in the municipality. Results from these models are presented in Table 3a and Table 3b.

Looking at the first model for every dependent variable, we find considerable differences related to survey mode for three of the six variables. The important question, however, is whether these differences are consequences of the mode of survey, or if they are spurious and simply a manifestation of the fact that different groups of respondents have used the web survey and the postal survey. When adding the controls, we can evaluate whether there are genuine mode effects or whether the differences are related to the factors such as age, education and party affiliation.

Looking closer at the second model for each dependent variable, we find that the differences related to survey mode are spurious and due to the fact that individuals with different characteristics have used different modes. The mode effect remains only for the question regarding nuclear power after the controls were performed. However, since the mode effect is totally reduced for all other variables, this effect is likely to be due to omitted variable bias. The differences related to mode are not effects of the mode; they are simply a manifestation of the fact that the different kinds of respondents choose to use different modes. We therefore conclude that web surveys can be combined with postal surveys in future surveys of groups, such as political representatives, without affecting the quality of the results or increasing the risk of bias concerning the survey mode.

Possible Improvements in Further Studies

From the basis of our study of all 14,000 Swedish local political representatives, we argue that the following three strategies should be employed in further research:

-

Collect as many e-mail addresses as possible in advance. If we had been more patient and used more time on that, and perhaps reached 70 percent of the non-contacted respondents and gotten answers from 70 percent of them, our response rate would have been at 75 percent instead of 70 percent.

-

Try to follow up with respondents who promise to answer but do not. If 50 percent of the non-respondents who promised to answer had done so, the response rate would have increased with another 7 percent and thereby reached 82 percent.

-

Employ mixed mode designs. Combining web survey, postal survey and reminding telephone calls is an effective way to increase the response rate and it does not seem to bias the results. What in our survey seemed to be mode bias was in fact spurious correlations. When controlling for a set of background characteristics, the mode bias disappeared.a

Acknowlegments

A previous version of this paper was presented at the Annual AAPOR Conference in Phoenix, May 2011. We would like to thank Emma Andersson for valuable comments on a draft version of the paper.

The dataset containing The Swedish Municipal and County Council Survey 2008–2009 can be obtained upon request from the primary investigators (codebook and dataset are so far only available in Swedish). Since the data contain sensitive information we require researchers to follow a set of rules. For further information contact david.karlsson@spa.gu.se.

One reason for surveying all local politicians is that reliable results can be reported for each municipality and to maximize the possibilities of using a comparative research approach (we have used the survey for this purpose in Gilljam, Karlsson and Sundell 2010). Surveying the total population also maximizes the opportunity to explore the multilevel structure of the data and analyze the variance in representatives’ attitudes at both the individual level and the municipality level (the survey is used for this purpose in Gilljam, Persson and Karlsson 2011). In cases where citizen surveys are already in place, our survey of the total population also facilitates interesting comparisons between representatives’ attitudes and citizens’ attitudes on the municipality level. In addition, results can be aggregated to the municipality level and used in analyses with municipality as the level of analysis, for example, in studies of how ideology affects the level of privatization (the survey is used for this purpose in Sundell and Lapuente 2011) and how the gender composition of the local parliament affects economic performance of the municipality (e.g., Wängnerud and Sundell 2011).

The survey was conducted by researchers employed in the project “What Politicians Mean by Democracy”. The project was located at the Department of Political Science, University of Gothenburg, Sweden, financed by the Swedish Research Council and led by Professor Mikael Gilljam.

.jpg)

.jpg)