Introduction

Research from the 1940s onward has shown that respondents more often answer “no” or “disagree” to negative questions than “yes” or “agree” to positive ones (e.g., Rugg 1941). This holds both for questions with an explicit sentence negation such as not (“The government cannot cut down on social work”; e.g., Holleman et al. 2016), and for questions with an implicit negation, containing a word with negative valence (cf. Warriner et al. 2013), such as forbid (“Do you think the government should forbid the showing of X-rated movies”; e.g., Schuman and Presser 1981/1996). Hence, someone’s opinion about an attitude object seems to be more positive when the question is phrased negatively (for an overview, see Kamoen et al. 2013).

These question polarity effects on the mean substantive answers have sparked a debate on which question wording is best (e.g., Chessa and Holleman 2007; Holleman 2006; also see discussions about unipolar versus bipolar questions, e.g., Friborg et al. 2006; Saris et al. 2010). No-opinion answers are an important proxy for (a lack of) data quality, as survey respondents frequently choose such answers to indicate comprehension problems (e.g., Deutskens et al. 2004; Kamoen and Holleman 2017). The current research therefore investigates the effect of question polarity on nonsubstantive answers: the proportion of no-opinion answers. To the best of our knowledge, no-opinion answers have not yet been analyzed as a dependent variable in polarity research before. This is probably because survey respondents shy away from providing no-opinion answers (Krosnick and Presser 2010), which means that a large sample size is needed for demonstrating any effect.

The Complexity of Positive vs. Negative Questions

Survey handbooks acknowledge the advantages of mixing positive and negative wording in sets of questions in order to “alert inattentive respondents that item content varies” (Swain, Weathers, and Niedrich 2008, 116) and also to detect straightliners (e.g., Sudman and Bradburn 1982; Weisberg 2005). Yet, they also warn against using negative questions in abundance (e.g., Dijkstra and Smit 1999; Dillman et al. 2009; Korzilius 2000). This is because negative questions are more difficult to process than their positive counterparts. Outside of a survey context, it has been shown repeatedly that negatives take more processing effort than their positive equivalents (e.g., Clark 1976; Hoosain 1973; Sherman 1973). Horn (1989, 168) summarizes that: “all things being equal, a negative sentence takes longer to process and is less accurately recalled and evaluated relative to a fixed state of affairs than the corresponding positive sentence”. This holds both for sentences that include an explicit negation (e.g., not happy/happy) as well as for sentences including an implicit negative, and generalizes across morphologically markedness (e.g., unhappy/happy vs. sad/happy), and across semantic types such as verbs (e.g., forget/remember) and contradictory adjectives (e.g., absent/present) (Clark and Clark 1977). The presumed cause for these processing differences is that negatives must be converted into positives before they can be understood (see Clark 1976; Kaup et al. 2006).

A second reason for survey handbooks to advise against the use of negative questions is that the answers to negative questions are relatively hard to interpret. This is because it is counterintuitive for respondents to answer ‘no’ or ‘disagree’ to indicate that they favor an attitude object (Dillman, Smith, and Christian 2009). For questions with an explicit negative, this confusion is particularly large, because in daily language use a no-answer to a question with an explicit negation indicates agreement with the negated statement. For example, one would probably answer No, asylum seekers should not be allowed to indicate agreement with the statement Do you think the government should not allow any more asylum seekers (example taken from Dijkstra and Smit 1999, 84). In a survey context, however, a yes-answer is the desired response to indicate agreement. This causes difficulties in interpreting the meaning of yes/no and agree/disagree-answers to questions with an explicit negation. On top of that, questions with explicit negatives sometimes generate invalid responses, because fast responders miss the negative term and therefore provide a response that does not match their opinion (e.g., Dillman et al. 2009).

Taken together, based on survey handbooks and linguistic research, we may assume that negative questions are more difficult to process than their positive counterparts. As no-opinion answers are an important proxy for question complexity (e.g., Deutskens et al. 2004; Kamoen and Holleman 2017), we expect more no-opinion answers for negative questions than for positive ones. We will test this hypothesis in the context of a specific type of survey called a voting advice application (VAA). VAAs are online tools that help users determine which party to vote for in election times. These tools have become increasingly popular in Europe over the past decades, reaching up to 40% of the electorate in countries such as the Netherlands (see Marschall 2014). In a VAA, users express their attitudes to a set of survey questions about political issues. These questions are formulated by a commercial or government-funded VAA developer, in dialogue with the political parties running in the election. Based on the match between the user’s answers and the parties’ issue positions, the tool subsequently provides a personalized voting advice. In the calculation of this advice, no-opinion answers are excluded (De Graaf 2010; Krouwel, Vitiello, and Wall 2012). As VAA developers want to base their voting advice on as many VAA questions as possible, this makes an investigation of the effect of question polarity on the proportion of no-opinion answers practically relevant too; it would be problematic if one wording would lead to more no-opinion answers than another wording. This is especially true since several studies have shown that the VAA voting advice has an impact on users’ vote choice (e.g., Andreadis and Wall 2014; Wall et al. 2012).

Method

Design and Materials

During the Dutch municipal elections of March 2014, we conducted a real-life field experiment on a VAA developed for the municipality of Utrecht, which is the fourth largest city in the Netherlands with 258,087 inhabitants. In collaboration with VAA developer Kieskompas and all of the 17 political parties running in the elections, four experimental versions of Kieskompas Utrecht were constructed in addition to this benchmark version.[1]

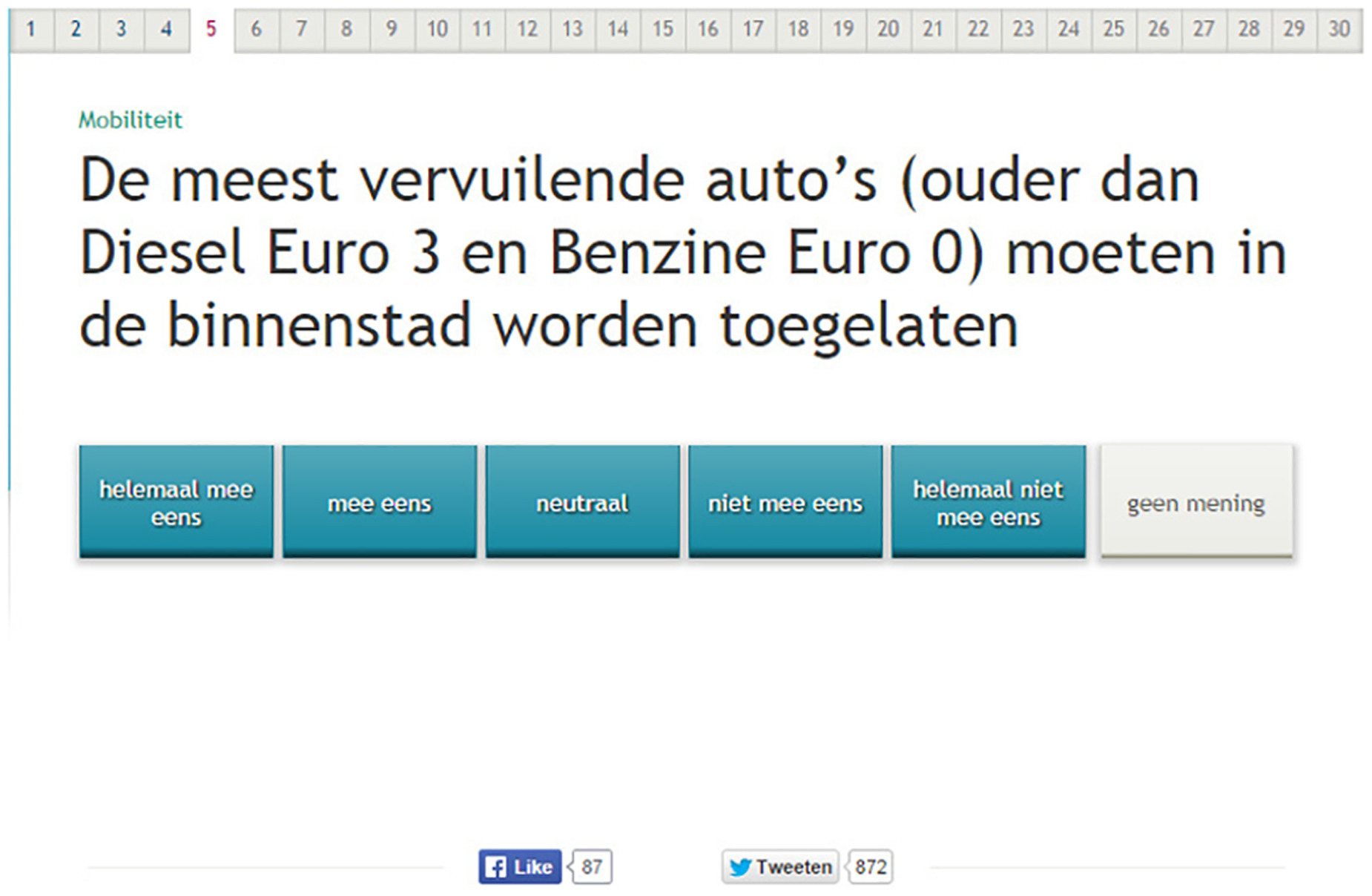

In the experimental VAA versions, the polarity of the question was varied for 16 out of 30 questions (see Figures 1 and 2 for an example). These manipulations can be distinguished into two types. A total of 10 questions contained an explicit sentence negation (e.g., ‘The municipality can cut down/cannot cut down on social work’). The remaining 6 manipulations contained an implicit negative, so a word with negative valence (e.g., The requirement for a building permit of one’s own house should remain to exist /be abolished). Research shows that language users can easily distinguish between words with positive versus negative valence (Hamilton and Deese 1971), and all the implicit negative terms used in the current research scored high on negative valence in an empirical study (Warriner, Kuperman, and Brysbaert 2013)[2]. All experimental materials can be found in supplemental materials.

The manipulated questions were distributed across the VAA versions in such a way that each VAA contained an equal number of positive and negative items. All of the Kieskompas Utrecht visitors (N = 41,505) were randomly assigned to either the standard Kieskompas version, or to one of the experimental versions.[3]

Participants

Kieskompas Utrecht was launched on February 18, 2014. Between February 18 and March 19 (Election Day), the tool was visited 41,505 times.[4] For the purposes of the current study, we focus on those VAA users who were randomly assigned to one of the experimental versions of Kieskompas, which means that VAA users who were assigned to the benchmark version (N = 7,812) were excluded. This was necessary because the benchmark version differed in more than one respect from the four experimental versions, as the benchmark version did not contain headings above the question.

In addition, we only took into account those VAA users who were 18 or older (and hence eligible to vote), for whom it took longer than 2 minutes to fill out all 30 statements, and who did not show straight-lining behavior (i.e., reported the same answer to each and every statement). This cleaning method is similar to the one used in Van de Pol et al. (2014). Cleaning the data led to the exclusion of another 2,581 cases, which means that 31,112 Kieskompas users were included in the analyses.

In our final sample, the male/female division is about equal (50.7% female). The mean age is 37.3 years (SD = 13.8). VAA users are fairly highly educated (the median category was higher vocational education or university bachelor), and rather interested in politics (mean of 3.3 on a 5-point scale, SD= 0.83). These imbalances with respect to educational level and political interest are very common for samples of VAA users (Marschall 2014).

In order to check the randomization, we compared the experimental versions with respect to age (F(3, 22207) = 1.58; p =.19), gender (χ2 (3) = 2.90; p = .41), educational level (χ2 (3) = 11.90; p = .85) and interest in politics (F(3, 21990) = 0.34; p = .80). As none of these tests showed a difference between conditions, there is no reason to assume that there are a priori differences between the VAA users in the experimental conditions.

Measurement and analyses

To analyse the effect of statement polarity on the proportion of no-opinion answers, we constructed a binary variable indicating whether the VAA user provided a no-opinion answer (0) or a substantive answer (1) to each of the 16 manipulated questions. This binary dependent variable was subsequently predicted in the logit multilevel model displayed in Equation 1 below. In this model, Y(jk) indicates whether or not individual j (j = 1, 2…31,112) gives a substantive answer to question k (k = 1, 2,…16). In the model, two cell means (Searle 2006) are estimated in the fixed part of the model: one for positive and one for negative question wordings.

To estimate these, dummy variables are created that can be turned on if the observation matches the prescribed type (D_POS or D_NEG). Using these dummies, two logit proportions are estimated (β1, and β2), which may vary between persons (u1j, u2j) and questions (v0k)[5]. The model assumes that the proportion of no-opinion answers is nested within items and respondents at the same time. This means that a cross-classified model is in operation (Quené and Van den Bergh 2004, 2008). Please note that while the person-variance is estimated separately for positive and negative wordings, the question variance is estimated only once. This is a constraint of the model. All residuals are normally distributed with an expected value of zero, and a variance of, respectively, S2u1j, S2u2j, and S2v0k.

Equation 1:

Logit Y(jk) = D_POS(jk)(β1 + u1j) + D_Neg(jk)(β2 + u2j) + v0k

Results

The first row in Table 1 shows the mean proportion of substantive answers across the 16 manipulated statements. A comparison of positive and negative wordings shows that there is no effect of question polarity on the number of nonsubstantive answers (χ2 = 0.04; df = 1; p = .95).

As we did not observe an overall polarity effect, we also explored the effect of question polarity for each of the two types of negatives separately. The second row of Table 1 shows the polarity effect for explicit negatives (N = 10). In line with prior expectations, we observed that negative questions generate more no-opinion answers than their positive equivalents (χ2 = 14.00; df = 1; p < .001). The size of this effect, however, is tiny relative to both the between-person standard deviation (Cohen’s d = 0.03) and the between-question standard deviation (Cohen’s d = 0.05) . In absolute terms, the chance of providing a no-opinion answer is about 5% larger for negative questions than for positive ones.

The third row of Table 1 displays the effect of question polarity for the subset of items containing an implicit negative (N = 6). Also for this subset of items, an effect of question polarity is observed, albeit in a different direction: contrary to expectation, positive questions yield more no-opinion answers than their negative equivalents (χ2 = 35.5; df = 1; p < .001). The size of this effect is small compared to the differences between respondents (Cohen’s d = 0.07), and substantive but small compared to the differences between items (Cohen’s d = 0.28). The chance of providing a no-opinion answer is roughly 14% larger for positive questions than for negative ones.

Conclusion

The current research investigated, in the context of an online VAA, whether the proportion of no-opinion answers depends on an important question characteristic: the choice for a positive or a negative statement wording. Across a set of 16 manipulated questions we find no overall effect of question polarity. This is contrary to expectations, because survey handbooks (e.g., Dijkstra and Smit 1999; Dillman et al. 2009; Korzilius 2000) as well as linguistic research (Clark 1976; Kaup, Ludtke, and Zwaan 2006) point out that negative questions and their answers are structurally more difficult to comprehend than their positive counterparts are. We did observe polarity effects when analysing two types of negatives separately. For questions including an explicit negation (e.g., not or none), we observed more no-opinion answers for the negative question versions as compared to their positive equivalents. The reverse was true for the set of implicit negatives (e.g., forbid/allow): For these pairs, the positive wording generated more no-opinion answers.

Discussion

We can only speculate about the reasons for the unexpected finding that implicit negatives yield less no-opinion answers than their positive equivalents. One explanation is that implicit negatives are actually easier to process than their positive counterparts. This explanation matches with work on the forbid/allow-asymmetry. In a semantic analysis of forbid and allow questions, it has been shown that the meaning of forbid-questions is really well-defined, as forbidding always indicates “…an act of inserting a barrier, and to a force dynamic pattern which brings about change”, whereas allow-questions are more ambiguous, because allowing “may imply causing (removing a barrier) as well as letting (not inserting a barrier)” (Holleman 2000, 186). In line with this work, it has been shown that the answers to a set of forbid-questions are more homogeneous, and therefore more reliable, than answers to a comparable set of allow-questions (Holleman 2006). Hence, if these results generalize to other contrast pairs, results of the present study can be explained by the fact that implicit negatives have a clearer meaning as compared to positive equivalents.

Alternatively, not (just) the linguistic form, but the match between the linguistic form and the status quo of the question topic may explain our findings. In our experimental materials, the items including an implicit negative (e.g., forbid/allow) always related to situations where the status quo matched the positive wording (e.g., circuses with animals are currently allowed). By contrast, for items including an explicit negation (e.g., may/may not cut down), the negative wording always matched the status quo (e.g., there are no cut-downs on art and culture). This means that we can also rephrase our results such that, irrespective of the linguistic form, wordings representing the current state of affairs generate more no-opinion answers than wordings that represent change. The idea that the appropriateness of a linguistic form in its usage context determines processing complexity, matches with theories from pragmatic linguistics (e.g., Sperber and Wilson’s Relevance Theory 1995). According to this work, language users want to make their contribution to a discourse situation as informative as required for the purposes of the exchange. In a political attitude context, wordings that represent change with respect to the status quo are probably more informative than wordings that describe the current state of affairs. This is because most citizens have little knowledge of the exact political issues at stake (Delli Carpini and Keeter 1996), whereas they do have knowledge about the status quo, as they encounter this status quo in their daily lives (Lupia 1992). To disentangle these two explanations, we propose a future study in which both the question wording (positive, implicit negative, explicit negative) and the status quo (issue X is currently allowed in municipality 1/ and forbidden in municipality 2) are varied. Such an experiment will allow tearing apart the two explanations.

Although we cannot yet explain the current findings, they are clearly relevant for survey and VAA practice. In a VAA context, the answers VAA users give to the political attitude questions directly influence the voting advice (De Graaf 2010; Krouwel, Vitiello, and Wall 2012). As the voting advice affects vote choices (e.g., Andreadis and Wall 2014), we believe that it is legitimate to conclude that our results are in fact important for VAA developers, even though the statistical size of the effects observed is small. Taking the odds ratio as a standard of comparison, about 1 in 20 no-opinion answers can be avoided if explicit negations matching the status quo are replaced by positive wordings representing change. Hence, if there is a political discussion about whether or not new houses should be built in a certain area, a question wording such as “New houses should be built in area X” should be preferred over the negative phrase “There should be no new houses built in area X”. In addition, roughly one out of every 7 no-opinion answers can be avoided when positive wordings that match the status quo are replaced by implicit negatives describing change. Hence, if there is a debate about whether taxes on housing should or should not continue to exist, one can better ask respondents to react to the statement “Taxes on housing should be abolished”, rather than “Taxes on housing should remain to exist”.

Moreover, our results are also relevant for the broader context of political attitude surveys and surveys on policy issues, as these surveys include very similar questions to the ones we find in VAAs. Inspect, for example, questions in Eurobarometer (e.g., asking if respondents are pro or con a European economic and monetary union with one single currency, the Euro; Eurobarometer 2015, QA18.1), or popular polls in newspapers and other media (e.g., Do you think Ukraine should become a member of the EU?; https://www.burgercomite-eu.nl/peiling-maurice-de-hond/). The only difference between a VAA and these other political attitude survey contexts might be the type of respondents: VAA users may be more motivated to fill out the questionnaire as they are rewarded with a personalized advice (Holleman, Kamoen, and Vreese 2013). Moreover, the VAA users in our sample appeared to be rather highly educated and fairly interested in politics. We know from Krosnick’s work on survey satisificing (e.g., Krosnick 1991) that the more motivated, highly educated and interested in the survey topic the respondent is, the smaller the size of the effect of various wording variations on reported attitudes. Hence, if VAA users are really more motivated (but studies showing otherwise are Baka et al. 2012; Kamoen and Holleman 2017), highly educated and interested, this would imply that polarity effects may be even larger in these other political attitude contexts.

Overall, we conclude that questions about political issues can best be phrased in terms of a change with respect to the status quo, and in doing so, not to shy away from using implicit negatives; using this technique can reduce the proportion of no-opinion answers. Therefore, if a country is currently in the European monetary union, it is better to ask if the country should leave this union rather than ask about staying in.

Funding

This work was supported by the Dutch Science Foundation (NWO), grant number 321-89-003.

The project description was approved prior to fielding the study by Utrecht University, the Dutch Science Foundation, and the Utrecht City Council. Visitors always entered Kieskompas Utrecht voluntarily, and they could stop filling out the VAA at any point in time.

Warriner et al. (2013) report valence scores on a scale from 1 (negative) to 9 (positive) for 13,915 English words. Human evaluators assign these scores. Across all reported words, the average valence score is 5.06. The implicit negative terms used in the current research (forbid, ban, stop, abolish, and force) had valence scores between 2.82 and 4.73. For the positive equivalents (allow, decide for yourself, remain to exist, maintain, continue) scores ranged between 5.61 and 6.39.

A total of 13 out of the 16 manipulated questions also contained a manipulation of issue framing, operationalized by variation in the heading above the question (left-wing or right-wing). So, in fact, these questions were manipulated following a 2 (question polarity: positive or negative) x 2 (heading: left-wing or right-wing) design. As the effect of question polarity did not interact with the effect of the headings, we decided to report the effect of the headings elsewhere (authors, under review).

It is impossible to check whether all visitors are unique users, because monitoring IP-addresses would violate VAA users’ privacy concerns. Even if IP-addresses would be available, it would be impossible to distinguish unique users based on IP-address because multiple users may access the tool from the same IP-address and the same user may access the tool from various IP-addresses. If the same users filled out the VAA twice (or more), they would again be assigned randomly to a VAA version and receive a VAA consisting of positive and negative questions. We expect that multiple usage might decrease effect sizes, but will not affect the direction of the effects.

Please note that the model implies that there is variance due to the interaction between respondent and item. However, because the dependent variable in the model is binomial, this variance is not estimated as it is fixed if the mean proportions are known. The interaction variance can be approximated applying the formula p * (1-p), in which p represents the estimated proportion of no-opinion answers for positive and negative questions, respectively.