Background

In 2021, the number of people in need of humanitarian assistance worldwide reached almost a quarter of a billion, constituting more than 2.5% of the global population. Between August 2020 and July 2021, 80 million surveys were submitted to just one of the most popular data collection platforms in the humanitarian sector.[1] Obtaining accurate information from affected populations is crucial, as the results of these surveys directly impact how implementing agencies provide what kind of aid and to whom.

This research forms part of Ground Truth Solutions’ continued engagement with crisis-affected communities in Bangladesh and took place in the Kutupalong–Balukhali expansion site in Cox’s Bazar. Surveys among the Rohingya refugee population, including humanitarian needs assessments, are mainly conducted by Bangladeshi interviewers native to the area. Given considerable evidence that perception surveys of Rohingya refugees can exhibit significant bias (ACAPS / IOM 2021; Ground Truth Solutions 2020; REACH 2021), this study aims to examine whether the ethnicity of the interviewer biases responses, and thus whether choosing an interviewer of the same ethnicity as the respondent would produce results more closely corresponding to realities on the ground.

Literature review

Ethnicity-of-interviewer effects have been well-studied in the United States, mainly investigating effects between black and white interviewers and interviewees. Evidence from other parts of the world is much more limited, with only a few studies published in the last decade, for example on a selection of African countries (Adida et al. 2016), Timor Leste (Himelein 2015) and the Arabian Peninsula (Gengler, Le, and Wittrock 2019). To our knowledge, no systematic study has been undertaken in a refugee or humanitarian context.

Ethnicity-of-interviewer effects have been shown to be more pronounced for sensitive or taboo topics, such as sexual activity, fraud, and racism (Krumpal 2013). Specifically considering race, studies find that the extent of bias depends largely on the racial sensitivity of the question (Cotter, Cohen, and Coulter 1982; Heelsum 2013; Holbrook, Johnson, and Krysan 2019; Kappelhof 2017; Schaeffer 1980). United States-based studies indicate that both black and white respondents adjust their answers relative to the ethnicity of the interviewer to avoid offence (Campbell 1981; West and Blom 2017). Questions relating to ethnicity may see larger interviewer effects as “respondents relate questions about interethnic relations directly to the relationship with the interviewer” (Heelsum 2013). Thus, such questions cause the interviewee to stress similarities and to avoid criticisms. Little attention has been paid thus far to ethnicity-of-interview effects on questions not directly related to ethnicity (Holbrook, Green, and Krosnick 2003).

Social desirability is the tendency for people to offer responses that are based upon the perceived cultural expectations of others, or refers to conditions in which respondents feel pressure to answer survey questions in socially acceptable ways (Dillman and Christian 2005). Overall, the presence of an interviewer has long been shown to elicit more socially desirable answers and acquiescence (a tendency to agree with the interviewer) on the part of the interviewee (Dillman and Christian 2005).

The cross section between social desirability and race has also been studied, with respondents often anticipating the interviewer’s opinion based on their race and adjusting their responses accordingly. For example, Adida et al. (2016) report in a study focusing on 14 African countries that respondents interviewed by non-co-ethnics consistently gave more socially desirable answers that presented their ethnic group in a positive light. Similar bias has also been found in studies on immigrant populations in Europe (Heelsum 2013). Indeed, one explanation for the presence of social desirability bias is that interviewer ethnicity provides a visual or audible indication of their opinion on certain questions (Kühne 2020; Wolford et al. 1995). Thus, we would expect social desirability bias only for questions on which the interviewer’s opinion can be assumed based on characteristics such as ethnicity, and not for neutral questions where such factors would not be informative. This is supported, for example, by a study from Timor Leste which found larger effects on subjective perception questions rather than objective, factual questions (Himelein 2015).

Of particular relevance for the refugee context are those studies that take into account the underprivileged minority position of a particular ethnic group. Ethnicity-of-interviewer effects may be particularly strong here, since discrimination that minority groups face increases pressure to socially conform to the interviewer (Holbrook, Green, and Krosnick 2003). Mistrust or uneven power relations may also affect the answers respondents are comfortable giving (Heelsum 2013), which is why qualitative field research in particular emphasises relationship building as a key research step (Funder 2005). Adida et al. (2016) found that politically salient non-co-ethnic dyads—that is, groups that have a history of conflict—show larger interviewer effects than dyads that are not caught up in political tensions. This is a particularly important consideration in this study, given Rohingya refugees often experience strained relations with Bangladeshi host communities.

Hypotheses and Research Objectives

Following West and Blom’s (2017) application of the total survey error framework in the context of interviewer effects, we can distinguish three areas of bias when conducting surveys: coverage errors, stemming from how interviewers sample from the population; nonresponse bias, resulting from how successfully interviewers make contact with and elicit responses from interviewees; and measurement errors, which relate to the content of the responses provided. This research focuses on measurement errors, since coverage and nonresponse errors can largely be controlled in refugee camp settings where surveys are primarily conducted face-to-face and nonresponse rates are low.

Our hypotheses are as follows:

-

Rohingya refugees report more positive opinions about the humanitarian response to Bangladeshi interviewers compared to Rohingya interviewers.

-

The difference in perception scores given to Rohingya and Bangladeshi interviewers will be greatest on questions relating to ethnicity or race, such as questions on abuse or mistreatment by particular ethnic groups.

-

Rohingya respondents interviewed by an interviewer from the same ethnic group will be more likely to admit to behavior perceived to be socially undesirable, such as selling aid to buy other items.

Research design

This study compares survey results of Rohingya refugees reported to Bangladeshi and Rohingya interviewers. We demonstrate which questions have the largest differences between interviewer groups, identifying topics that may be particularly prone to bias.

The questionnaire was designed in collaboration with Rohingya interviewers and researchers from the International Organisation for Migration’s Communication with Communities team (IOM CwC), drawing from themes commonly explored by perception monitoring work in the humanitarian sector. The survey consisted of 40 questions, most of which were binary in nature.

Both Bangladeshi and Rohingya enumerators received the same training on the survey and how to use the data collection software. Bangladeshi interviewers were part of a 100-member enumeration team that regularly conducts surveys and assessments in the camps. Rohingya interviewers were less experienced in quantitative enumeration than their Bangladeshi counterparts, and so received training on both qualitative and quantitative data collection methods through IOM CwC. It is unclear to what extent, if at all, the Rohingya interviewers’ additional qualitative training impacted how the interviews were conducted and therefore the results of the research.

Sampling

We used purposive sampling to select 5 out of the 34 camps in the Kutupalong–Balukhali expansion site based on camp size, population density, and level of refugee community interaction with host communities. Given the high population density and lack of census data in the camps, a geo-information-systems-based sampling approach was used (Eckman and Himelein 2019). A minimum of 125 coordinates per camp and interviewer group were randomly generated, and interviews were conducted at these locations. With a minimum sample size of 125 per camp, the margin of error amounts to a maximum of 10 percentage points at a 95% confidence level per camp. The margin of error for overall estimates of all five camps combined amounts to a maximum of 4.5 percentage points at a 95% confidence level (Thompson 1987).

Analytical methods

Multivariate non-parametric tests were employed to test the significance of the differences in responses between the two interviewer groups. We used the npvm package in R which employs non-parametric versions of well-known multivariate tests[2] (Burchett et al. 2017).

Subsequently, univariate significance tests were employed to discern which individual questions show a significant difference and in what magnitude. For ordinal Likert scale questions, we used a Wilcoxon rank sum test, and for binary data, Fisher’s exact test. In both cases due to multiple comparisons, we used a Benjamini–Hochberg procedure to decrease false discovery rate.

Results

The effect sizes presented below are expressed as a probabilistic index P(x>y) (Acion et al. 2006); that is, the probability that a randomly selected individual interviewed by a Rohingya interviewer provides a higher rating than a randomly selected individual interviewed by a Bangladeshi interviewer. If there was no effect of interviewer ethnicity, we would expect these probabilities to be around 50%, meaning neither group is more likely to give a higher score than the other.

All four non-parametric multivariate tests show significant p-values, as presented in Table 2. Thus, our results show a clear significant difference between responses elicited by different interviewer groups.

Univariate results

Satisfaction / Dissatisfaction with aid services

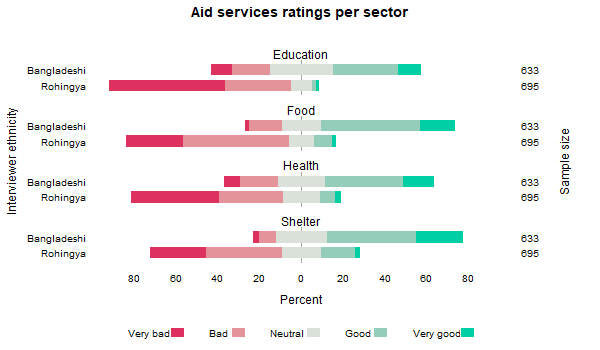

We see statistically significant and substantively large gaps between the perceptions collected by Bangladeshi and Rohingya interviewers for questions relating to satisfaction with aid services. People interviewed by Rohingya interviewers provide considerably more negative responses than people interviewed by Bangladeshi interviewers. Regarding education, food, health, and shelter services, the majority of people asked by Rohingya interviewers rated the quality of aid services either very bad or bad (between 63% and 87%), while the majority of those asked by Bangladeshi interviewers rated them good or very good.

Univariate Wilcoxon rank sum tests on each aid quality question show significant differences between the two interviewer groups (Table 3). Substantively, the differences between the groups are large—a randomly chosen person interviewed by a Rohingya interviewer has only between a 15% and 21% probability of giving a higher score (i.e., reporting higher levels of quality), compared to a 79–85% probability for a randomly chosen individual interviewed by a Bangladeshi interviewer.

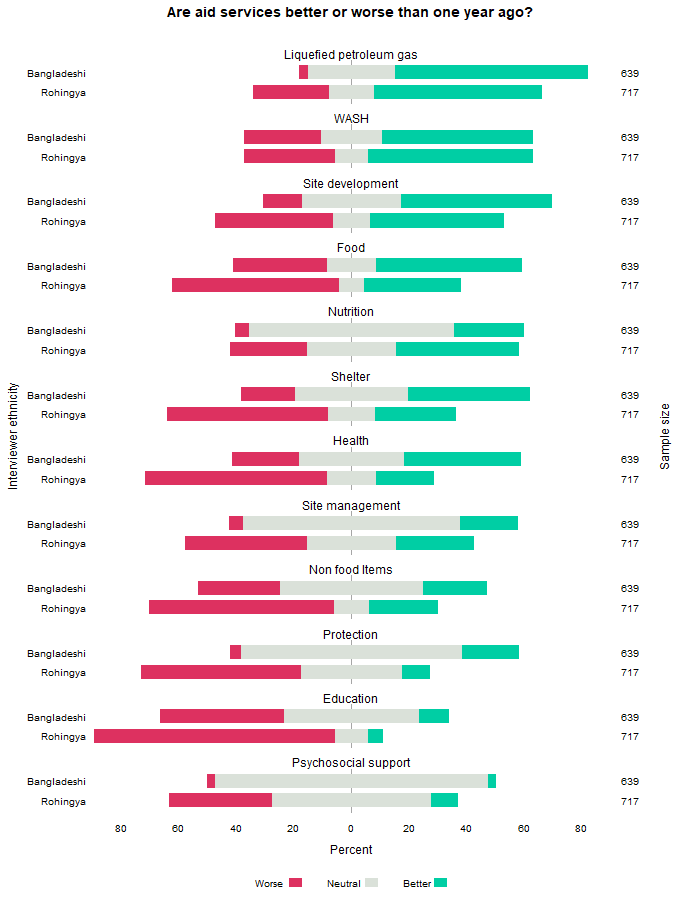

Level of improvement of aid services

Similarly, on questions addressing degradation or improvements in aid services, people asked by Bangladeshi interviewers are more positive than people interviewed by Rohingya (Figure 3) and are more likely to select the neutral option (except for water and sanitation [WASH] and nutrition services). These response patterns indicate a reluctance of the respondent to give a negative answer to Bangladeshi interviewers.

Univariate Wilcoxon rank sum tests on each improvement question show significant differences between the two interviewer groups for all but two questions (Table 4). Substantively, the question on improvements in protection services exhibits the largest difference between the groups—here, a randomly chosen individual interviewed by a Bangladeshi interviewer has a 76% probability of giving a higher score, compared to 24% for a randomly chosen individual interviewed by a Rohingya interviewer.

General perception questions

On general binary perception questions, the direction of the difference between interviewer groups depends on the sensitivity of the question, and whether certain responses would be more socially desirable than others vis-à-vis a Bangladeshi interviewer. Bangladeshi interviewers recorded higher levels of agreement (P(x>y)[3]=25–45%, p<0.0001) for questions which present humanitarian actors and the humanitarian response in a positive light.[4] The opposite is true for questions which examine issues reflecting negatively on the humanitarian response.[5] These questions show higher levels of agreement from individuals interviewed by Rohingya interviewers (P(x>y)=51—77%, p<0.0001). Univariate Fisher’s exact tests (Table 5) confirm that the difference is significant for 20 out of 24 questions.

The magnitude of the difference between the groups is particularly pronounced for questions relating to threatening or abusive behavior by humanitarians (see Figure 4). While 17% of those interviewed by a Rohingya interviewer (119 individuals) reported having witnessed someone in their community be threatened by a Bangladeshi humanitarian, no one reported this behavior to Bangladeshi interviewers. Similarly, 32% of people interviewed by a Rohingya interviewer witnessed rude behavior by Bangladeshi humanitarians, compared to only 2% of those interviewed by Bangladeshis. Contrary to our expectations, people interviewed by Rohingya were also more likely to report rude or threatening behavior by Rohingya humanitarians, though as seen in Figure 4, reports of threatening behavior by Rohingya humanitarians are few. This could be understood as a partial contradiction to hypothesis 2, as those interviewed by Rohingya were more likely to report rude or threatening behavior by both Bangladeshi and Rohingya humanitarians. Alternatively, this may show increased comfort in reporting abuse to interviewers of the same ethnicity, regardless of the source of this behavior.

In summary, a clear difference between answers provided to the two interviewer groups can be observed. Out of the 40 questions asked, only six do not show significant difference by interviewer ethnicity.[6]

Discussion and Conclusion

This study examines whether evidence of ‘ethnicity-of-interviewer’ effects can be found among survey responses by Rohingya refugees in Bangladesh —that is, whether responses to surveys collected by Bangladeshi interviewers are significantly different to those collected by Rohingya interviewers.

We find considerable evidence to support hypothesis 1, that Rohingya refugees report more positive opinions to Bangladeshi interviewers compared to Rohingya interviewers. When looking at perceived quality of humanitarian services (Figure 2), Rohingya refugees express far more positive views to Bangladeshi interviewers than to interviewers of their own ethnic group. Our findings are in line with previous research showing that questions which respondents relate directly to the interviewer may exhibit larger ethnicity-of-interviewer effects (see Adida et al. 2016; Heelsum 2013). The magnitude of the bias being greatest for questions relating to service quality is a clear indication that Rohingya refugees are less willing or comfortable criticizing services to Bangladeshi interviewers who can be thought of as representing the humanitarian response.

Hypothesis 2 that the difference in perception scores given to Rohingya and Bangladeshi interviewers will be greatest on questions relating to ethnicity or race, such as questions on abuse or mistreatment by particular ethnic groups receives some support as well: we see large differences between groups for questions on negative relations between Bangladeshi humanitarians and Rohingya refugees, and no significant difference on questions relating to relations within the Rohingya community.[7] However, many questions which do not relate to race or ethnicity exhibited particularly large differences (see Table 5), and we see a significant difference on questions about rude or threatening behavior from Rohingya humanitarians as well. As such, hypothesis 2 cannot be confirmed.

Hypothesis 3, that Rohingya respondents interviewed by an interviewer from the same ethnic group will be more likely to admit to behavior perceived to be socially undesirable, such as selling aid to buy other items, can be confirmed. Some of the largest differences can be seen for questions relating to behavior that respondents may want to conceal, such as receiving or selling unwanted food items, and reporting issues with humanitarian aid (see Table 5).

Despite the statistically significant differences for many of the questions, it should be noted that the size of the effect, and therefore, its substantive significance is small for some questions. Particularly on the questions “Do you receive shelter materials you don’t need?” and questions about rudeness or threatening behavior from Rohingya humanitarians, people interviewed by Rohingya interviewers have only between a 52% and 57% probability of giving a higher score than someone interviewed by a Bangladeshi interviewer. That is, we should be conservative about any conclusions derived regarding these questions.

The stark difference in responses to the majority of the survey questions demonstrates the extent to which response biases have impacted our understanding of the needs, preferences, and experiences of Rohingya refugees in Cox’s Bazar thus far. In addition to protection risks given the clear reluctance to speak out to Bangladeshi interviewers on abuse or mistreatment by humanitarian staff, humanitarians should also be concerned that Rohingya refugees appear less comfortable or willing to criticize the response to Bangladeshi interviewers. Ethnic strife in refugee contexts is not unique to Bangladesh but threatens to significantly affect the accuracy of data collected from refugee communities around the world. In order to collect accurate information, properly and systematically considering ethnicity as an influencing factor is key.

Acknowledgements

Contributors:

Christian Els conceptualized the study, devised the sampling strategy, conceptualised the statistical analysis plan, analysed the data, drafted and revised the paper.

Hannah Miles conceptualized the statistical analysis plan, analysed the data, and wrote and revised the paper.

Cholpon Ramizova conceptualized the study, designed data collection tools with Rohingya partners, and monitored data collection.

All authors worked for Ground Truth Solutions at the time of publication. This research was undertaken as part of Ground Truth Solutions’ mission to make humanitarian aid more people-centred and to include the views of people affected by crisis in humanitarian programming.

We thank our partners from the International Organization for Migration’s Communication with Communities team, including Danny Coyle, who were instrumental in designing the survey questions. We also thank their Needs and Population Monitoring Unit for providing logistical and enumeration support. Their collective insight into the Rohingya community, including their linguistic and cultural norms, was crucial in creating a survey that would best capture their feedback.

We also thank our partners from Statistics without Borders, Tracey Di Lascio-Martinuk and Shalini Gopalkrishnan, who supported us in our analysis techniques. The time you took reviewing our approach and suggesting further avenues of analysis was greatly appreciated.

Kobo Toolbox, hosting in the same period 100,000 user accounts on their humanitarian server.

ANOVA type, Wilks’ Lambda type, Lawley Hotelling type, and Bartlett Nanda Pillai type

x = Rohingya interviewer, y = Bangladeshi interviewer

Including questions such as whether refugees would approach aid workers to report sensitive complaints, can ask aid workers about aid services or believe their opinions are considered in programming.

This includes whether refugees observed extortion by aid workers, filed a complaint against aid agencies, or sold excess food aid.

Two of these questions refer to whether an individual can borrow food or money from members of their own community in times of need. Despite the large difference we see concerning selling food aid, there was no significant difference on whether people sell shelter materials. Finally, there was no significant difference on the question “Last month, has anyone come to ask whether you have any problems with receiving aid?”.

For example, whether Rohingya can borrow food or money from people within their community.