One of the most challenging aspects of polling public opinion on policy issues is crafting the actual questions. They must be able to elicit a range of responses without affecting the pre-existing knowledge base of the survey sample. For most policy questions, the concern is that the question will be skewed to one side or another. In many cases, “explanatory” wording is included to balance perceived bias in one part of the question.

For example, a question gauging support for a “jobs bill” may be seen as inherently positive. After all, who can be against job creation? A pollster can attempt to balance that by including information on the cost, e.g., “$450 billion jobs bill.” Introducing that factual information, though, can have another unintended impact on responses. It introduces factual information to the survey sample that the full population does not necessarily know. It is then unclear whether the pollster is measuring extant support for a particular policy or hypothetical support if the full population had this information at hand.

A question wording experiment run during the 2008 U.S. Senate race in New Jersey provides evidence that including factual information in a poll question can be consequential when trying to portray where the public stands on an issue. While this particular experiment did not focus on a policy issue per se, the implications for measuring public attitudes on policy issues are apparent.

Background

When incumbent U.S. Senator Frank Lautenberg decided to run for re-election in 2008, the candidate’s age was expected to be an issue in the campaign. He would turn 84 years old during the course of the campaign, making him the third oldest sitting senator at the time. So, it was not surprising that pollsters tracking the race included questions about perceptions of the candidate’s age in their surveys.

Interestingly, two polling organizations painted very different pictures of how salient this issue was to voters during the course of the campaign. A June 2008 Quinnipiac University Poll headline declared “Lautenberg Tops Zimmer, But Most Say He’s Too Old.” The survey analysis in the press release stated that “most voters say Sen. Lautenberg is too old to begin another six-year term” and that his age “continues to be a big issue.”

A Monmouth University Poll conducted in January 2008 presented a seemingly contrary result, specifically that “most voters don’t feel that the senator’s age is getting in the way of his job performance.”

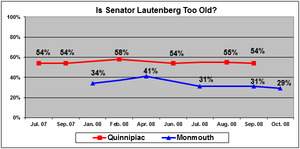

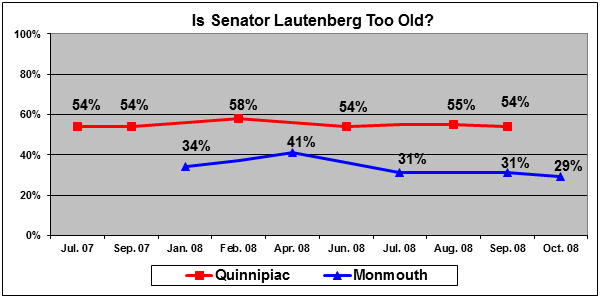

The difference between these two polling organizations was consistent throughout the entire campaign. Quinnipiac asked its “age” question six times from July 2007 to September 2008. The percentage who saw Lautenberg as too old had a very narrow range of 54% to 58%. The Monmouth question was asked five times from January to October 2008. The results were significantly lower and also had a wider range, from 29% to 41%.

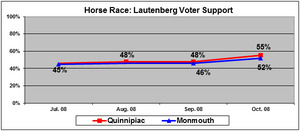

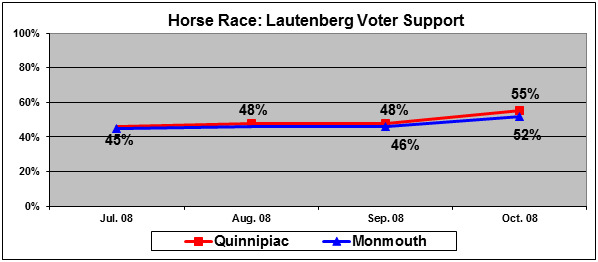

One possible source of this discrepancy is that the two polling organizations had different partisan samples. However, results for the standard “horse race” question showed that both had nearly identical vote shares for Lautenberg in each poll conducted.

The difference on the age question, therefore, was not simply a matter of house effects. Both polls claimed to give evidence of how Sen. Lautenberg’s age factored into voters’ thought process. But they presented starkly different pictures – either a majority felt Lautenberg was too old or a majority felt he was not.

There was something more significant at work. The source of the discrepancy seemed to lie in the question wording. Quinnipiac asked: “At age 84, do you think Frank Lautenberg is too old to effectively serve another six year term as United States Senator, or not?” Monmouth asked: “Do you agree or disagree that Frank Lautenberg is too old to be an effective senator?”

Other than differences in response options, the Quinnipiac question included two elements that the Monmouth question did not. It specifically directed respondents to think about the senator’s effectiveness throughout a six year term and it informed respondents of the senator’s age.

Methods

Beginning in July 2008, the Monmouth University Polling Institute began a series of question wording experiments to determine what, if any, impact the pollsters’ differences in question wording elements had on the results. The polls were conducted using Monmouth’s standard statewide election polling methodology at the time – RDD sample of at least 700 self-reported registered voters.

Each experiment focused on a different element of the questions – specifically mentioning Lautenberg’s age and asking poll respondents to think about his age in relation to serving out a six-year term. The question variants were asked of a random-split half of the sample. Each half was balanced demographically to account for any differences in partisan leanings.

Results

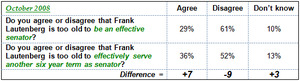

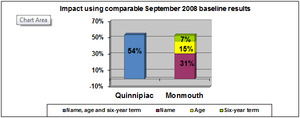

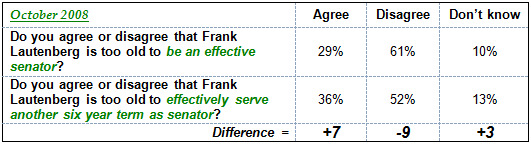

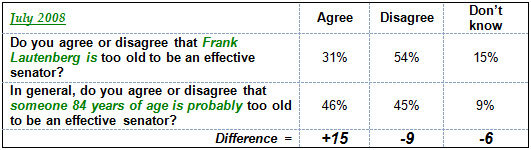

In one poll, Monmouth asked half the sample its standard Lautenberg age question, i.e., whether Lautenberg was too old to be an effective senator. The other half was asked whether Lautenberg was too old to effectively serve another six year term. The effect of directing respondents to consider Lautenberg’s ability to serve out a full senate term increased agreement that he was too old by a net 7 percentage points.

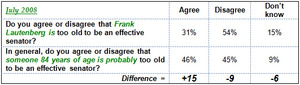

In a separate poll, Monmouth again asked half the sample its standard Lautenberg age question. The other half, however, was asked to evaluate whether a generic 84 year old candidate would be too old. In this instance the net effect of mentioning the actual age was a sizable 15 percentage points.

The 2008 election provided another opportunity to test the impact of including a candidate’s age . The Republican nominee for President, John McCain, also faced questions about his age. Asking about a generic 72 year old presidential candidate versus the candidate with no age mentioned produced a 7 point difference on the age question – half the effect for an 84 year old senate candidate.

Perhaps including a candidate’s actual age in the question wording becomes more problematic the older the candidate is? It is also possible that more voters simply had pre-existing knowledge of presidential candidate John McCain’s age than they did of incumbent Senator Frank Lautenberg’s – one was discussed as an issue throughout the campaign while the other was not.

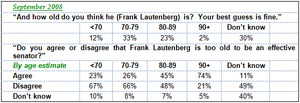

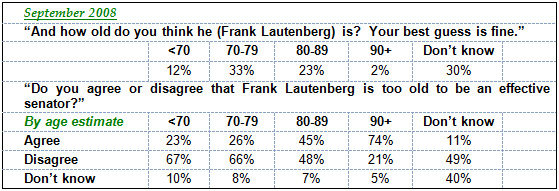

To assess this pre-existing knowledge base, we conducted a separate poll that did not include any split half questions. However, after posing the standard “Lautenberg is too old” question to the entire sample, we asked respondents to estimate Lautenberg’s age. Only 1-in-4 came close, estimating him to be at least 80 years old. The average estimate was 75 years old, nearly a decade younger than the senator’s actual age. And 3-in-10 admitted they did not know.

Importantly, those who thought the senator was 80 or older were nearly twice as likely as those who thought he was younger to tell us they felt Lautenberg was too old to be an effective senator. The level of concern was even lower for those voters who said they had no idea what his age was.

Conclusions

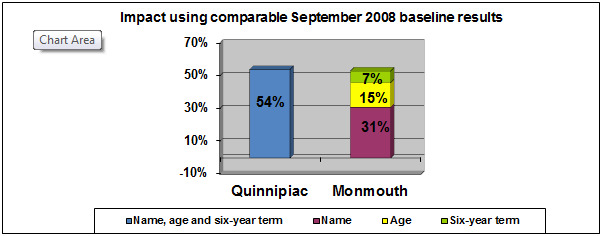

The cumulative effect of directing respondents to consider the length of a Senate term and including Lautenberg’s age in the question wording appears to produce the false result that a “majority” of New Jersey voters felt Lautenberg was too old.

There can be reasonable differences on whether some voters may actually consider the full length of a senate term before casting their vote. However, this framing issue only counted for one-third of the net difference between the Quinnipiac and Monmouth results. More importantly, informing the sample of Lautenberg’s actual age skewed the results in a way that no longer reflected what the population of voters actually felt about Lautenberg’s age, but rather how they may have felt if everyone was aware of his age. In reality, most voters did not consider his age to be an issue, either because they underestimated his age or simply did not know what it was and the issue was not salient.

As noted earlier, the trend for the Monmouth poll question demonstrated more variance than the Quinnipiac question. This was largely attributable to a higher 41% reading in April 2008. At the time, Lautenberg was facing an unusual primary challenge from a Democratic Congressman who was making Lautenberg’s age an issue in the race. Lautenberg won that contest in June, and his Republican opponent decided not to make the incumbent’s age a campaign issue. The slight increase measured in the Spring Monmouth poll seems to have captured an actual increase in voter awareness of Lautenberg’s age in response to campaign messages. Once the primary was over and those messages disappeared from the public debate, voter concern about Lautenberg’s age returned to a stable 3-in-10 level. The Quinnipiac question did not capture these shifts in public attitudes.

While this experiment did not focus on public support for a policy issue, the implications for polling on that subject matter are clear. During the 2008 New Jersey campaign, media reported both polls’ results, but tended to give greater weight to the more sensational storyline that Lautenberg’s age was “a big issue” for voters, when in fact most voters did not know how old he was. Similarly, gauging public support for “the $450 billion jobs bill” is different than asking about “the jobs bill.” Is the purpose to measure what the population currently thinks about the proposed jobs bill based on what they know about it? [Which should include a question about how much they have actually heard about it, by the way.] Or is the purpose to measure what public opinion might be if everyone knew about its cost?

There are a number of strategies for obtaining measures of both extant and informed opinion, but they require using a lot of real estate on survey questionnaires. This requires resources which few media-oriented pollsters have at their disposal or are willing to expend on a single issue. In any event, the media that disseminates these results also lacks the expertise – and sometimes willingness – to cast a critical eye over how questions are worded. They take pollsters at their word that the questions mean what we claim them to mean. Thus, it is crucial that pollsters carefully phrase the analysis of their findings based on the way they framed the questions and to honestly disclose the limitations of what they asked.

The bottom line is if you are measuring the potential salience of factual information on opinion formation then be forthright about what you are doing. If, on the other hand, you wish to tap extant opinion representative of a larger population, make sure your question does just that. How pollsters present their findings has as much, if not more, of an impact on the public debate as the questions and results themselves.

Suggested Citation

Murray, Patrick. 2011. “A Question of Age: Measuring Attitudes about an Unknown Fact” Survey Practice, December: www.surveypractice.org.

Acknowledgements

Data in this paper was originally included as a part of a poster presentation at the 64th annual AAPOR conference, prepared with the assistance of Tim MacKinnon, Bloustein Center for Survey Research, Rutgers University.