Background

The US presidential election polls were widely criticized for “getting it wrong” in 2016. Moreover, polling errors in the national estimates of Democratic candidate support were not corrected in 2020 despite significant changes in polling techniques (AAPOR Task Force on 2020 Pre-Election Polling 2021). Indeed, 93% of national polls overstated the Democratic candidate’s support among voters in 2020, compared to 88% in 2016 (Kennedy et al. 2021).

One explanation offered for the 2016 errors was that some Trump voters did not reveal themselves as Trump voters in the pre-election polls, and they outnumbered late-revealing Clinton voters (Enten 2016). This is similar to the “shy voter” explanation for a similar underestimate of the Tory vote in British pre-election polls (Mellon and Prosser 2017). The so-called Shy Trump effect assumes a misrepresentation of voting intent by Trump-leaning respondents in pre-election polls. However, tests of this theory yielded little evidence to support it (AAPOR Ad Hoc Committee on 2016 Election Polling 2017; Dropp 2016; Kennedy et al. 2018).

A similar underestimation of Trump support occurred in 2020 election polling (Cohn 2020; Matthews 2020; Page 2020). While an AAPOR review of election polling concluded the error was not caused by respondents’ reluctance to admit they supported Trump (Byler 2020), the report noted it was plausible that Trump supporters were less likely to participate in polls overall. Considering the decreasing trust in institutions and polls especially among Republicans (Cramer 2016), the AAPOR report concluded that self-selection bias against Trump voters in polls was plausible but could not be directly evaluated without knowing how nonresponders voted.

The present study explores whether another type of “shy respondent” may be responsible for the underrepresentation of Trump support in these pre-election polls. The original purpose of the project from which we draw our data was to study the motivations and barriers to survey participation rather than explore “shy respondents” as the source of error in the pre-election polling estimates. However, the data allows us to explore three elements which would seem, collectively, to provide a preliminary answer to that question. First, is there a segment of the adult population who avoid participating in surveys (“shy respondents”) but who do participate in elections, large enough to make a difference in a pre-election poll? Second, is the underrepresentation of this segment likely to be corrected by post-stratification weights? Third, although we do not know the presidential candidate preference of these respondents, do their characteristics suggest that they would be more likely to support a populist, anti-establishment candidate rather than a more traditional one? Collectively, the answers to these questions should provide preliminary evidence of whether shy respondents might explain the underrepresentation of Trump voters in the 2016 and 2020 pre-election polls.

We posit a nonresponse bias associated with a psychological or behavioral predisposition, rather than demographics or party affiliation. Hence, it is not likely to be corrected by demographic weighting. Our first research question is whether a segment of the population report a predisposition to avoid surveys, but usually vote in elections. Further, if that segment is underestimated in pre-election polls, is it large enough to make a difference in estimates of candidate support?

The three elements in this “shy respondent” hypothesis are testable using surveys even if we assume the predisposition affects the propensity to respond to surveys. However, the suggestion that decreasing trust in institutions and polls may be a factor in the underrepresentation of Trump voters in polls should influence the choice of methods for this study. The traditional approach of disclosing sponsorship in pre-election polls by news media, universities, and other institutions, as well as the topic and purpose of the surveys, may contribute to a selection bias against more alienated and anti-institutional segments of the public. Further, such individuals might be less likely to respond to typical survey appeals based on social utility and civic responsibility.

To explore motivations and barriers to survey participation among the public, we chose a sample from a commercial nonprobability panel which relies on financial incentives rather than social utility or civic appeals to obtain respondents. Hence, the sample is less likely to be self-selected towards the civic minded and against more alienated and anti-institutional individuals. Moreover, panelists do not expect the disclosure of survey topic or sponsorship, which allows us to avoid a self-selection bias related to topic or sponsor.

Our research focus is on the relationship of “survey shyness” to survey participation and voting, not population estimates of the size of a “shy” population. We believe that a nonprobability sample is “fit for purpose” as a proof-of-concept study of the “shy respondent” and its relationship to survey participation and voting. This approach provides preliminary findings necessary to determine whether more extensive research is warranted.

Survey Instrument

The web survey instrument was designed to explore propensity to respond to surveys under a variety of conditions, but not specifically the “shy” respondent problem. These conditions included the importance of the survey topic to the respondent, the sponsor, the mode of the survey, survey length, and incentives. The survey also covered potential covariates of propensity to respond to surveys identified in the research literature. All measures including likelihood of participating in surveys and frequency of voting are based on respondent self-reports.

The attitude questions were drawn from the 2009 Census Barriers, Attitudes and Motivators Survey (CBAMS), which was designed to optimize audience targeting, messaging and creative development for the 2010 Census Integrated Communications campaign (Macro International 2009) but adapted for a study of survey motivators and barriers. One of these items was “I prefer to stay out of sight and not be counted in government surveys.” This measure has at least face validity as a proxy for a psychosocial predisposition against survey participation.

We avoided including “not sure” and “prefer not to answer” as response categories in most of the measures used for this study. This includes the “shyness” measure and the other 13 items in the psychographic battery, the survey participation questions, the voting frequency questions, the topic importance questions, and the demographics other than household income. These items all have valid answers for all 1,937 adults who completed the survey. The questions concerning participation in surveys by topic importance was limited to respondents who rated at least one topic as important (n=1,880) or not important (n=924).

Sample and Target Population

Respondents were drawn from a highly rated consumer panel (Mfour) including more than 2 million persons, recruited from an ongoing multisource process with panel authentication and fraud-detection measures. The panel does not provide a comprehensive population frame or support probability samples for the general population due to the nature of panel enrollment. Nonetheless, the panel is designed to provide national nonprobability samples of adults that are geographically and demographically diverse.

The panel profile includes panel members’ zip code, age, gender, race/ethnicity, and education of the panel member. Consequently, the panel methodology allows efficient targeting of a geographically and demographically representative sample from the total panel. A census balanced sample of 6,095 adult panel members was drawn, and a survey notification was sent to their MFour smartphone app. Three reminders were sent to nonrespondents. Respondents received four dollars for completing the survey. A total of 1,937 interviews were completed between July 28, 2018, and August 31, 2018. The interview conducted in English, averaged approximately 20 minutes in length. Respondents were given a “Prefer not to Answer” button for sensitive questions. The survey participation rate was 31.8% (AAPOR RR 1).

Statistical Analyses

Statistical analysis was conducted using SAS and IBM SPSS Statistics 22 software. Bivariate analyses of the key dependent and independent variables include mean ordinal scores and domain proportions. Comparisons of means were based on nonparametric statistical tests to determine significance. Confidence intervals were calculated based on nonparametric bootstrap using 1,000 replicate samples selected with replacement. Correlations between the psychographic ordinal scales were calculated based on Spearman’s rank correlation. Three-way tables were used to analyze the relationship between shyness, likelihood of survey participation, and frequency of voting. Other multivariate analyses did not seem to be necessary for this study given our specific research questions and the available measures.

Findings: Survey Shyness as a Predisposition

Respondents were asked a series of 14 agree/disagree questions about survey participation (Table 1). One item seemed to provide an appropriate measure of a “shyness” in the context of survey participation: “I prefer to stay out of sight and not be counted in government surveys.” More than a third of the sample agreed (30%) or agreed strongly (7%). The average score on the four-point scale was 2.34.

Bivariate correlations (Spearman) show a significant association between shyness and the other 13 attitudinal measures. The strongest correlations were with “the government already has my personal information” (0.52); “I don’t see if it matters if I answer” (0.48); "Government surveys are an invasion of privacy (0.48); “It takes too long to fill out government surveys. I don’t have the time” (0.46). Staying out of sight is also moderately correlated with “I am concerned that the information I provide will be misused” (0.37) and “refusing to fill out government surveys is a way for people to show that they don’t like what the government is doing” (0.29). The correlations with these items suggest that staying out of sight might be associated with a sense of government distrust and alienation. The correlations with the other attitudes toward surveys were weak (0.24 or less).

Shyness and Survey Participation

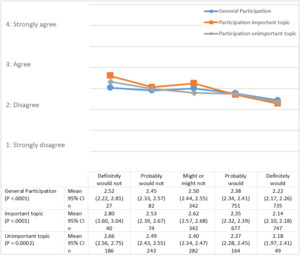

Respondents were asked a series of questions about their likeliness to participate in surveys with response options of “definitely would,” “probably would,” “might or might not,” “probably would not,” and “definitely would not.” First, respondents were asked a general question: “Not counting Surveys on the Go, if you were contacted to conduct a survey on an interesting topic that was not unreasonably burdensome, how likely would you be to participate?” Seventy-eight percent of respondents said they definitely or probably would respond.

Subsequently, they were asked about a topic they rated as “important” previously in the survey: “If you were contacted this week to participate in a government survey about [important topic], how likely would you be to participate?” Seventy-six percent of respondents said they were likely to respond to a survey on the important topic. The same question was then repeated but substituting a topic that the respondent previously rated as unimportant. Fewer people stated they were likely to participate in a survey for a topic they reported as unimportant (63%).

For all three questions, the mean “shyness” score trends downward from a range of 2.5–2.8 for those who “definitely would not” to a range of 2.1–2.2 for those who “definitely would” participate (Figure 1).

Topic Importance

As seen above, willingness to participate in specific surveys may be modified by survey topic. Respondents were asked how important it would be to participate in government surveys on a range of topics (Groves et al. 2004). Among all respondents, Voting and Elections has the lowest mean topic importance score (3.07) compared to Child Care (3.12), Nutrition and Physical Activity (3.19), Medicare and Aging (3.29), Issues facing the Nation (3.29), Health and Disease (3.30), and Education (3.37). The topic importance of Voting is even lower among those with higher shyness scores. Moreover, the importance of surveys of Voting and Elections is substantially lower for “shy respondents” (2.81) than others (3.23), and the difference in importance of Voting and Elections compared to other topics increases with shyness (Figure 2). This suggests that “shy respondents” would be less likely, not more likely, to participate in a survey on elections and voting, such as a pre-election poll.

Shyness and Voting

Shyness is also associated with reported voting frequency. When asked how often they vote in presidential elections, the shyness score declines from 2.50 among those who “never” vote to 2.24 for those who “always” vote in them. A similar pattern is found for congressional and party primary elections (Figure 3).

Although “shyness” is associated with the frequency of voting, a very substantial proportion of those who are likely to vote in elections are “shy” respondents. Half of those who agree or strongly agree that they prefer to stay out of sight and not be counted in surveys (51%) say that they “always” vote in presidential elections. Among the 1,436 “likely voters” who say that they always or nearly always vote in presidential election, 474 strongly agree or agree that they prefer to stay out of sight and not be counted in surveys. So, “shy respondents” represent a third (33%) of “likely voters” for presidential elections in this sample. However, only 138 of these “likely voters” are “shy respondents” who would not participate in a survey on an interesting topic that was not unreasonably burdensome (Table 2). Hence, “shy respondents” with a low propensity to respond to surveys represent about 10% of “likely voters” for presidential elections in this sample. Further, “shy respondents” represent nearly half (46%) of “likely voters” who are not likely to participate in a survey.

Demographic and Psychographic Characteristics of Shy Voters

Survey design can correct for some forms of nonresponse error by poststratification weighting. However, this requires the source of the error to be highly correlated with known population parameters, such as demographics. Unfortunately, among “likely voters,” there are few significant demographic differences between “shy respondents” who are unlikely to do surveys (our shy voters) and other voters. Educational differences (fewer college graduates) is the only difference large enough to be statistically significant in this sample (Table 3). Small differences may not be significant as a result of the size of the “shy respondent” subsample (n=138) in this survey, but only large differences would suggest demographic weighting would correct underrepresentation.

By contrast, there is a substantial difference between shy voters and other “likely voters” on attitudes other than shyness. Shy voters are less likely than other “likely voters” to agree that it is important for everyone’s opinion to be counted in surveys, that surveys show they are proud of who they are, that it is their civic responsibility, and informing government about what their community needs. Unfortunately, these are among the most common appeals for survey participation.

Additionally, our shy voters are more likely to agree with measures suggesting powerlessness, political distrust, and alienation. They are significantly more likely than other “likely voters” to agree that government surveys are an invasion of privacy, that it does not matter if they respond to government surveys, and refusing to participate in government surveys is a way to show that they do not like what the government is doing. They are also less likely to agree that government agencies’ promises of confidentiality can be trusted.

Discussion

The underestimation of the Trump vote in pre-election polls has led to concerns about the accuracy and reliability of surveys. A nonresponse problem with voters who preferred Trump has been cited as a plausible explanation. If psychosocial predispositions were the source of a self-selection bias in these samples, then it might not be corrected by traditional demographic and party identification weighting.

This analysis was conducted first to explore whether there are “shy respondents” who prefer to stay out of sight, who have a lower propensity to participate in surveys but are still likely to vote. The study used a paid nonprobability panel as a more likely method to be able to recruit “shy respondents” to explore this hypothesis. This proof-of-concept study was designed to explore whether there was sufficient evidence from a fit-for-purpose convenience sample to justify the resources for more rigorous but expensive studies.

More than a third of respondents in this survey agree that they prefer to stay out of sight and not be counted in surveys. This shyness measure was related to a lower stated propensity to participate in surveys, including surveys on voting and elections. Nonetheless, a majority of these “shy respondents” say that they always or almost always vote in presidential elections. Overall, we find that about one in ten “likely voters” in this sample are “shy respondents” who are not likely to participate in surveys—the true shy voter. Consequently, the size of the population segment who avoid surveys, but regularly vote, is large enough to impact estimates from pre-election polls, satisfying our first condition. The differences in demographics between shy voters and other “likely voters” is generally slight, so demographic weighting is unlikely to correct this underrepresentation, satisfying our second condition.

Unfortunately, we do not know respondents’ candidate preferences, so we cannot directly test the association between shyness and Trump support. However, these “shy respondents” are suspicious of government, not motivated by civic engagement, and less likely than others to participate in surveys sponsored by government or universities. The relationship between survey shyness and alienated and anti-institutional attitudes suggests these respondents are likely to be disproportionately more populist than traditionalist in their political preferences, which satisfies our third condition. Collectively, these findings suggest that it is plausible that shy respondents might explain the underrepresentation of Trump voters in the 2016 and 2020 pre-election polls.

This is a proof-of-concept study which has a number of limitations. The questionnaire was designed to study motivations and survey participation, not shy voters specifically. Hence, we have a single measure available as a proxy for survey shyness. Additional measures related to survey shyness would permit greater understanding of the construct and exploration of its dimension. Further, the response categories for our key measure are structured as an agree/disagree scale. Research suggests that measures are more reliable using an item specific response rather than an agreement scale (Dykema et al. 2022; Saris et al. 2010). An additional limitation is that we do not have a direct measure of candidate preference, so we had to rely on indirect measures of populist preference.

We recognize that survey estimates of voting are usually higher than official turnout figures, which is usually attributed to social desirability bias. An experiment to test that hypothesis found that an “item count technique” significantly reduced voting estimates in a national telephone survey compared to direct self-reports in the survey. However, in eight national Internet surveys, the item count technique did not significantly reduce voting reports (Holbrook and Krosnick 2010). Hence, while we do not know whether shy voters might be more or less vulnerable to social desirability bias, we would expect the self-administered mode of the survey to minimize the bias for all respondents.

Finally, we used a commercial nonprobability panel in order to increase the likelihood of recruiting disaffected and shy respondents, who may be more likely to respond to financial incentives than appeals to social utility. A limitation of nonprobability samples is that projection of survey estimates to the general population is not supported by statistical models based on probability samples. However, survey estimates based on nonprobability samples are not necessarily biased or unrepresentative.

We believe the approach was appropriate for a proof-of-concept study to examine the relationship of shyness to survey participation and voting. We believe that the results support further research on “shy respondents” ideally using larger, probability samples but with appropriate appeals and incentives necessary to capture socially and politically alienated respondents, including more comprehensive measures of “shyness” as well as political orientation and candidate preference. This would also permit more sophisticated multivariate analyses of the impact of shy respondents on survey participation. This research is potentially important to the broader issue of nonresponse bias in surveys, generally, not just pre-election polls.

_by_election_behavior.png)

_by_election_behavior.png)