Introduction

The direction in which a response scale is presented to respondents is found to affect the resultant answers, leading to scale direction effects (Yan and Keusch 2015). Scale direction effects are observed in telephone surveys (e.g., Yan and Keusch 2015), face-to-face surveys (e.g., Carp 1974), web surveys (e.g., Garbarski, Schaeffer, and Dykema 2015; Keusch and Yan 2018), and paper questionnaires (e.g., Höhne and Krebs 2018). Research on scale direction effects demonstrates a stronger impact of scale direction for longer scales than shorter scales (Yan, Keusch, and He 2018), for items asked early in a survey than later in the survey (Carp 1974; Yan, Keusch, and He 2018), and for items that cannot be easily coded as either attitudinal or behavioral than for items that are clearly attitudinal (Yan, Keusch, and He 2018). In addition, respondents with less knowledge of the question topic and speeders who went through the survey too fast tend to show stronger scale direction effects than those with more knowledge (Keusch and Yan 2018; Yan and Keusch 2015).

This paper attempts to examine which scale direction is more difficult and cognitively more burdensome for respondents to process and to use, using eye-tracking technology, for two specific scales. The answer to this question has important implications for questionnaire design. If the findings indicate that a particular direction is more difficult for respondents, question writers should avoid using that direction. Furthermore, this paper illustrates how eye-tracking and, in particular, pupil dilation measures can be used to investigate cognitive difficulty and burden associated with answering survey questions.

Eye-tracking Methodology

In an eye-tracking study, respondents’ eye movements are tracked by infrared cameras while they are reading and answering survey questions. These cameras record where people look and how long they look. As a result, eye-tracking provides a direct window into respondents’ survey response process (Galesic and Yan 2011). Fixation count and fixation duration are typical measures of attention and cognitive processing in eye-tracking studies (Galesic and Yan 2011). Survey questions incurring more fixations and longer fixations indicate that these questions have a higher cognitive demand on respondents and require a longer survey response process than questions incurring less and shorter fixations, signaling that these questions are burdensome and difficult to answer. For example, Lenzer and colleagues (2011) showed that text features that reduce readability of survey questions (such as complex syntax, ambiguous nouns) incurred longer fixation times and more fixations. Clifton, Staub, and Rayner (2017) reported longer fixations on difficult words than on easy ones. Kamoen et al. (2011, 2017) combined fixation count and duration with reread—also captured by eye-tracking—and found that negative questions are reread longer and more often, indicating that negative questions pose more burden for respondents.

Task-evoked pupillary responses (TEPRs) captured by eye-tracking can also be used to understand difficulty and cognitive burden of answering survey questions. Typically, pupils begin dilating immediately after a stimulus is presented and constricts after the task is completed either gradually or immediately (Beatty 1982; Eckstein et al. 2017). This process is automatic and involuntary. Larger pupil dilations are found for more difficult tasks across a wide range of tasks such as short-term memory (e.g., Kahneman and Beatty 1966), arithmetic operations (e.g., Hossain and Yeasin 2014), sentence comprehension and document editing (e.g., Just and Carpenter 1993), and visual search (Attar, Schneps, and Pomplun 2016). Despite its great potential as a tool to evaluate cognitive burden of survey items, TERPs are not widely used in the survey context. There are only two studies that empirically explored the use of pupil dilation in evaluating cognitive burden. Neuert (2020) compared pupil dilation, fixation time, and fixation count between problematic versions and improved versions of six survey items, hypothesizing larger dilations, longer fixations, and more fixations for the problematic survey items than for the improved versions. Although dilations were generally larger for the problematic items than for the improved versions, the difference between the two question versions achieved statistical significance at the .10 level for only three items. By contrast, significantly longer durations were found under the problematic condition for all six items at the .10 level. Furthermore, the problematic items induced significantly more fixations than the improved versions for three items. She concluded that pupil dilation was less effective than the two fixation measures in identifying problematic survey items. Yan et al. (2016) compared average pupil dilations and peak dilations across 24 questions. These questions are classified into “hard” or “easy” questions based on breakoff rates observed in earlier web surveys. Yan et al. (2016) found that hard questions that elicited higher breakoff rates in earlier web surveys elicited larger dilations than easy questions. In addition, questions that respondents self-reported to be more difficult produced larger dilations than questions that had a lower rating on difficulty. Yan et al. (2016) also showed that peak dilations were more likely to occur at response options for hard questions and at question stems for easy questions.

This paper used two dilation measures (average dilation and peak dilation) together with two fixation measures (fixation count and fixation duration) to examine which scale direction is more difficult for survey respondents and to identify specific components of scales posing the greatest burden for respondents. Since survey literature has shown that scale direction plays a crucial role in the formation of answers to attitudinal items but not to behavioral items (Carp 1974; Keusch and Yan 2019), I examined two types of scales in this paper—a satisfaction scale commonly used for attitudinal items and a frequency scale for behavioral items. An experiment was implemented to randomly assign respondents to scales that run either in an ascending order (e.g., from “very dissatisfied” to “very satisfied” for the satisfaction scale and from “never” to “very often” for the frequency scale) or in a descending order (from “very satisfied” to “very dissatisfied” or from “very often” to “often”). Everything else about the scale (e.g., scale labels and number of scale points) as well as the interview setting were held constant across the experimental conditions. Given the experimental set-up, I hypothesize that the scale direction eliciting larger dilations, more fixations, and longer fixations are more difficult for respondents to process and to use than the scale direction incurring smaller dilations, fewer fixations, and shorter fixations.

Data and Methods

Data

Data used in this paper are from an eye-tracking study conducted in January 2016. A total of 20 respondents from the Washington DC metropolitan area were invited to come to the Westat lab in Rockville. Respondents had a mixed background with regard to age, gender, education, and race and ethnicity. Upon arriving in the lab, respondents were asked to complete a web survey while wearing the ASL mobile eye-XG eye-tracking glasses, which record eye-tracking videos. The web survey includes 34 target questions and displays one question per screen (see Figure 1 in the Supplementary Materials for an example of how survey questions are displayed on a computer screen).

A scale direction experiment was embedded in the web survey, varying the direction of two scales—a satisfaction scale and a frequency scale. Both scales are presented vertically on the computer screen. The satisfaction scale is a 5-point fully labeled scale, starting from “very satisfied” to “very dissatisfied” for a random half of the respondents (descending order) and from “very dissatisfied” to “very satisfied” for the other random half (ascending order). Five survey items used this satisfaction scale, asking respondents to rate their satisfactions with health, diet, the grocery store where they shop the most often, their neighborhood, and the city they live in.

The frequency scale is also a 5-point fully labeled scale, ranging from “Very Often” to “Never” for a random half (descending order) and “Never” to “Very Often” for the other half (ascending order). Eight items employed this scale and asked respondents how often in the past 12 months they drank beer, wine or wine coolers, liquor or mixed drinks, coffee (caffeinated or decaffeinated), iced tea (caffeinated or decaffeinated), milk, orange juice, or apple juice.

Eye-tracking Measures

As a standard practice for eye-tracking research, I first created four areas of interest (AOIs) on each computer screen: question stem, top two response options, middle response option, and bottom two response options (see Figure 2 of the Supplementary Materials for how AOIs were designated). For each survey item involved in the scale direction experiment, I computed two fixation measures. Fixation count is the number of fixations on a specific AOI and one fixation is approximately 100 or more milliseconds of attention. Fixation duration is the total duration of all fixations (in seconds) on a specific AOI. Fixation count and fixation duration are computed over the full question-answering process (fixations on all four AOIs), on the full scale (fixations on the three scale-related AOIs), and on specific parts of the scale—the top two scale points, the bottom two scale points, and the scale midpoint.

To compute dilation measures, I compared the pupil diameter recorded at each fixation to a base pupil diameter and then divided the difference by the base pupil size, resulting in a relative dilation expressed as the percentage of increase in pupil size over the base pupil size. For each respondent, the base pupil size is the minimum pupil diameter recorded throughout the web survey. Although this base pupil size could potentially inflate dilation measures, it makes it possible to compare relative dilation across all survey items and across all individuals. For each respondent, average dilation is the mean of relative dilations over all fixations on an AOI. I computed average dilations for the full question-answering process for each survey item, and for fixations on the full scale, and on specific parts of the scale. Peak dilation is the maximum dilation observed during the period of answering each survey item for each respondent.

Results on Satisfaction Scale

Table 1 displays the means of average dilations, fixation counts, and fixation durations by scale direction. The descending satisfaction scale starting with “very satisfied” incurred marginally significantly larger dilations than the ascending scale beginning with “very dissatisfied” for the overall question-answering process (p=.08) and fixations on the full scale (p=.07). The average number and duration of fixations did not differ by scaled direction for the overall question-answering process and for the full scale.

In terms of differential attention to and processing of specific scale AOIs, the satisfied options incurred significantly larger dilation (p=.003) and more fixations (p=.01) when they were presented first than when they came last. Respondents had significantly more fixations (p=.03) and longer fixations (p=.04) on the dissatisfied options under the ascending condition than the descending condition.

Regardless of scale direction, dissatisfied options incurred significantly larger dilations and received significantly more and longer fixations than the other parts of the scale. Take the ascending scale as an example. Average dilation is 17% when respondents processed the dissatisfaction options, but only 6% for neutral and 4% for satisfied options. Respondents had 5.6 fixations on dissatisfied options, and 1.0 on neutral and 2.4 on satisfied options. Respondents also spent two more seconds on dissatisfied options than on neutral and satisfied options. It seems that dissatisfied options are cognitively more difficult for respondents than neutral and satisfied options. The same trend is observed for the descending scale condition.

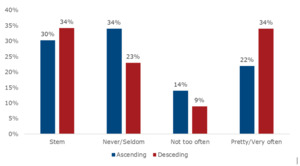

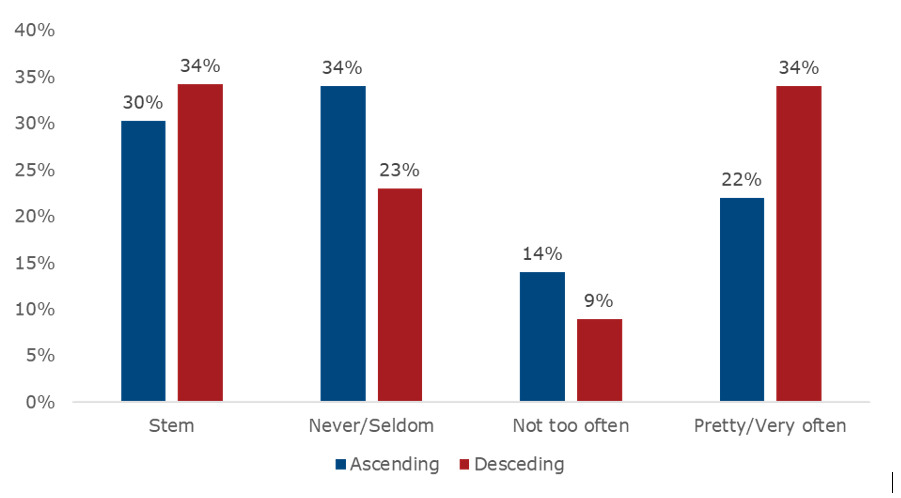

Peak dilation pinpoints the location where respondents struggled the most. Figure 1 plots the percentage of respondents who had peak dilation on different AOIs. About 60% of respondents had peak dilation when fixating on dissatisfied options regardless of scale direction. By contrast, around 10% of peak dilations occurred at the question stem. This again suggests that the dissatisfied options posed the greatest challenge for respondents. Furthermore, the satisfied options incurred more peak dilations (20%) when they were presented earlier in the descending order than in the ascending order (4%).

Results on Frequency Scale

Unlike the satisfaction scale, the amount of dilation does not differ by the direction of the frequency scale for the overall question-answer process and for the processing and use of the full scale, as shown in Table 2. The ascending scale condition elicited significantly longer and more fixations than the descending scale condition for the overall question-answering process (fixation count: p=.001, fixation duration: p=.0004) and processing of the full scale (fixation count: p=.003, fixation duration: p=.03).

In addition, the “Never” and “Seldom” options incurred significantly larger dilations (p=.02), more fixations (p<.0001), and long fixations (p<.0001) when they are presented first in the ascending order than in the descending order. The two high frequency options (“Pretty Often” and “Very Often”) incurred significantly larger dilation (p=.002) under the descending condition than under the ascending condition.

Regardless of scale direction, scale options closer to the start of the frequency scale incurred significantly larger dilation, received significantly more and longer fixations than those in the latter part of the scale.

Furthermore, about one third of peak dilations occurred on a question stem, whereas another one third peaked at the first two scale options regardless of scale direction, as shown in Figure 2. This is very different from the satisfaction scale. For questions using satisfaction scales, two thirds of peak dilations occurred at dissatisfaction options. In addition, the first two scale options had 10% more peak dilations than the last two scale options, regardless of scale direction.

Discussion

This paper illustrates how eye-tracking can be used to explore which scale direction is more difficult for respondents to process and to use, holding other scale features constant. Four eye-tracking measures are examined. Average pupil dilation and peak dilation are used to assess the amount of cognitive effort required of respondents; larger dilations and more peak dilations indicate larger cognitive demand and greater cognitive difficulty. Fixation count and fixation duration are used to quantify the amount of attention and cognitive processing with longer fixations and more fixations speaking to longer processing. Two scales were examined in this eye-tracking study. Both scales are fully labeled 5-point scales that are presented vertically on the computer screen.

For the satisfaction scale, there is no difference in fixation count and fixation duration in the processing of the full scale by scale direction. However, pupils dilated more for the descending satisfaction scale than for the ascending order, suggesting that the descending scale required more effort and presented greater difficulty for respondents. In particular, the two satisfaction options incurred larger dilations and more fixations when presented first in the descending order than in the ascending order. As a result, an ascending order is recommended for the satisfaction scale.

By contrast, average pupil dilation when processing the full frequency scale did not differ by scale direction. However, the descending frequency scale led to fewer fixations and shorter fixations than the ascending scale. Furthermore, the differences in attention to and effort on both ends of the scale are smaller for the descending order than on the ascending order. These findings collectively indicate that the descending frequency scale is somewhat easier for people to use than the ascending scale; consequently, a descending order is recommended for the frequency scale.

More importantly, this paper found that scale points required different amounts of effort and attracted varying amounts of attention. For the satisfaction scale, regardless of scale direction, the two dissatisfaction options incurred larger dilations, more peak dilations, more fixations, and longer fixations than the two satisfaction options. These findings indicate that dissatisfaction options took more effort and longer time to process than the other parts of the scale. However, for the frequency scale, scale points incurred larger dilations, more fixations, and longer fixations when they were presented earlier than when they came later, consistent with predictions by the satisficing notion. It seems that respondents processed and used the two scales differently.

This paper demonstrates again the utility of eye-tracking to provide a direct window to the survey response process. Eye-tracking technology records eye movements as respondents undergo the question-answering process. Fixation measures are commonly used to understand response mechanisms, to explain observed responses, and to identify problematic questions. This paper illustrates the use of a less commonly used metric that is readily obtained from eye-tracking software. The results support the potential and benefits of using pupil dilation measures to learn what is difficult and cognitively burdensome for respondents and to pinpoint where exactly respondents struggle in the survey response process. Pupil dilation measures complement the usual fixation measures in understanding how respondents process and use response scales. Consequently, the dilation measures have a great potential for question testing and evaluation and are recommended to measure and monitor response burden (Yan and Williams 2022).

Pupil dilation measures can be used alone to identify questions or response options susceptible to problems and warranting further investigation. As shown earlier, the dissatisfaction options elicited much larger dilations and more peak dilations than the other options of the scale, which calls for a revisit to these scale labels. Pupil dilation measures can also be combined with other question testing and evaluation techniques. For instance, cognitive interviews could be conducted to understand the root cause of the difficulty respondents seemed to have with the dissatisfaction options—is it because respondents had trouble understanding the meaning of dissatisfaction or it is because respondents had difficulty mapping their experience to the dissatisfaction options?

This study only looked at two particular scales—a satisfaction scale and a frequency scale, and the findings do not extend to other scales such as the commonly used agreement scale. However, the eye-tracking technique and pupil dilations can be readily and easily applied to other types of response scales, other questions, other question formats, and even other design formats (e.g., a grid, a scroll design where multiple questions are displayed on one screen). Future research should continue exploring the effectiveness of dilation measures to test and evaluate survey questions. The paper has two limitations. First, the study was conducted on 20 respondents. Although the small sample size is not uncommon for qualitative research, it did limit the study’s power to detect small differences by scale direction or by different parts of a scale. Second, this paper only examined which scale direction required longer processing. I did not try to link processing with the quality of answers provided. As longer processing does not automatically or necessarily lead to answers of better quality (e.g., Yan et al. 2015), I encourage future research to examine the relationship between fixations, dilations, and data quality.