INTRODUCTION

In questionnaire evaluation research, cognitive testing is often viewed as the gold standard methodology. It is a generally low-cost method that can reliably predict problems in the field (Tourangeau et al. 2019), and as a result, it is often the default method chosen when evaluating new and existing survey questions. However, there are benefits to conducting a multimethod evaluation. Multiple methods can be used to draw on the strengths of different methodologies in providing corroborating evidence of problems (Blair et al. 2007; Creswell and Poth 2018; Forsyth, Rothgeb, and Willis 2004; McCarthy et al. 2018) or in producing different, but complementary findings (Maitland and Presser 2018; Presser and Blair 1994; Tourangeau et al. 2019; Willimack et al. 2023). Selection of an evaluation method is often driven by the time and costs associated with the method (Tourangeau et al. 2019). Conducting a multimethod evaluation is typically expensive and can require multiple years of testing (McCarthy et al. 2018; Tuttle, Morrison, and Galler 2007), which can prohibit its use.

In 2020, the National Agricultural Statistics Service (NASS) received feedback from stakeholders regarding the validity of data collected for the Grain Stocks publication. This is a Principal Federal Economic Indicator publication of grain and oilseed stocks stored in each state and by position (on and off-farm) (National Agricultural Statistics Service 2023). Data for this publication are collected from two multimode surveys, with two different populations. At the time, NASS had limited staff and budget for testing. Additionally, travel was prohibited due to the COVID-19 pandemic. Although it was not feasible to do a large-scale multimethod evaluation, NASS still recognized the benefit of using multiple methods to address stakeholder concerns.

To determine which methods to use, NASS considered three factors: the goals of the research, survey modes, and the costs associated with different evaluation methods. Two surveys are used to produce the Grain Stocks publication: the Agricultural Survey and the Grain Stocks Report (GSR). The Agricultural Survey is a sample survey of farm producers and collects data on crop acreage, yield and production, and quantities of grains stored on farms. The GSR is a census of commercial facilities with rated storage capacity, which measures grains stored off-farms. Stakeholders were concerned that stored grain was being double counted in these two surveys. If double-counting were occurring, it would be more likely to be due to misreporting in the Agricultural Survey. However, NASS felt it was important to evaluate the GSR as well.

It is important to consider the survey mode(s) when evaluating questionnaires as different modes can contribute to different types of response error. Data for the Agricultural Survey are primarily collected via paper and computer-assisted telephone interviews (CATI), whereas data for the GSR are primarily collected via the web and electronic submission of files. Cognitive testing is an effective method for identifying semantic problems in survey questions in any mode, but it is not effective at identifying interviewer problems, which are more likely to be uncovered using behavior coding (Presser and Blair 1994). In web surveys, visual design and functionality (e.g., edit checks) can impact respondents’ understanding of the survey questions and their ability to provide valid responses, and usability testing is an effective method for evaluating this mode (Hansen and Couper 2004; Geisen and Romano Bergstrom 2017). Combined usability and cognitive testing is an efficient way to identify issues with questionnaire wording and design (Romano Bergstrom et al. 2013).

NASS had resources in place that made it cost effective to select methodologies that would permit questionnaire evaluation of different survey modes. Prior to the pandemic, NASS established procedures for conducting cognitive and usability testing remotely. Additionally, all CATI calling is recorded in NASS call centers, making behavior coding a feasible option. Four methods were selected to evaluate these two surveys: cognitive testing, usability testing, behavior coding, and expert review. The following paper will demonstrate the benefits of this small-scale multimethod survey evaluation.

METHODS

Agricultural Survey

Cognitive Interviewing

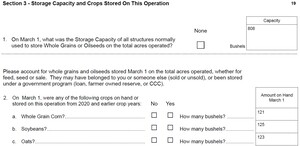

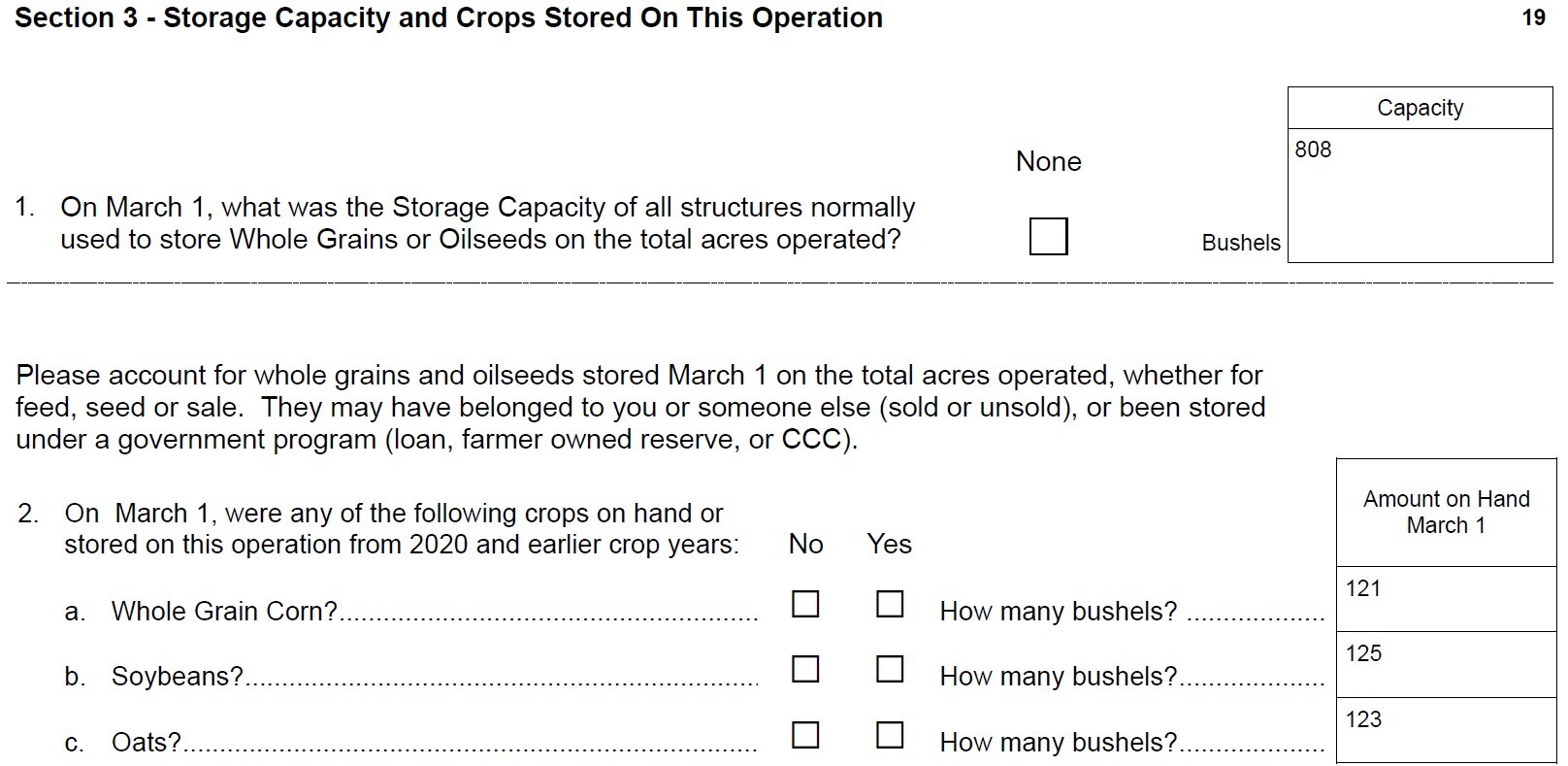

In March 2021, 16 cognitive interviews were conducted remotely with farms that had on-farm grain storage. Testing focused on the Storage Capacity and Crops Stored on This Operation section (see Figure 1). Interviewers used retrospective probing to understand respondents’ response processes (Willis 2005). Data were analyzed using the constant comparative method of analysis (Strauss and Corbin 1990).

Behavior Coding

Behavior coding was performed using recordings from the December 2019 and March 2020 Agricultural Surveys. Due to the closing of centralized call centers during the COVID-19 pandemic, these were the most current recordings available. Twenty interviews from the December 2019 and 40 interviews from the March 2020 Agricultural Surveys were selected. Questions in the Storage Capacity and Crops Stored on This Operation and Unharvested Crops section were coded, resulting in the coding of 201 question administrations for December and 333 for March.

Six possible codes were assigned to the interviewers’ behavior (see Table 1). The codes failure to verify, major change, and question omitted were considered problematic interviewer behavior. Ten possible codes were assigned to respondent behavior (see Table 2). Problematic respondent behavior included the codes qualified answer, clarification, verification no response, incorrect format, interrupted, response to intro text, and refused. Problematic behavior that occurs more than 15% of the time is indicative of a problem (Fowler 2011). Two researchers trained in behavior coding coded the interviews. Cohen’s kappa was calculated to ensure consistency across coding. The overall kappa was 0.9394, indicating there was substantial agreement between the two coders (Landis and Koch 1977).

GSR

Combined Usability and Cognitive Testing

In July 2021, NASS conducted five remote combined usability and cognitive testing interviews with commercial grain facilities. During the interviews, interviewers observed respondents completing the web survey and used retrospective probing to understand respondents’ response processes (Willis 2005). Cognitive interview data were analyzed using the constant comparative method (Strauss and Corbin 1990).

Review of Electronic Submission of Files

Methodologists met with statisticians in the regional field offices (RFOs) to learn more about the electronic submission process. During these meetings, methodologists gained insight into the need for this mode and reviewed files submitted electronically to NASS and procedures used to process these data.

RESULTS

Agricultural Survey

Cognitive Testing Results

Given that grain and oilseeds are moved off-farm throughout the year for sale or storage in off-farm facilities, respondents must comprehend and adhere to the instructions and key clauses in the questions regarding the reference date and the type and location of the storage facilities to provide accurate answers. In cognitive testing, no major issues were found with the comprehension of survey questions. Respondents reported on-farm grain storage capacity and quantities stored, and properly excluded grain stored off-farm in commercial facilities. Although all respondents understood the questions in this survey as asking about grain stored on-farm, some respondents indicated that the phrase “on hand or stored” in the grain storage question could be interpreted as stored on- and off-farm and having the phrase “on this operation” was essential to understanding the question. Respondents adhered to the reference date in the questions, but some noted that their answer would be different had they not. Finally, a few respondents did not read the introductory text and incorrectly omitted grain that was stored for others on their operation.

Cognitive testing demonstrated that respondents were interpreting the survey questions as intended. It also demonstrated the importance of reading survey questions in full. If response error was present in the survey data, it was not likely due to question wording. However, a limitation of cognitive testing is that it does not reflect how respondents complete questionnaires during production. Establishment respondents are often responding to surveys during working hours, when they have competing demands and limited time. Additionally, the cognitive testing was only performed on the paper questionnaire and given that a large portion of the data are collected via CATI, an examination of the CATI questionnaire was warranted. Cognitive testing of CATI questionnaires is not always ideal as cognitive interviewers are trained to read survey questions exactly as worded, which may not occur in production. Behavior coding provided an opportunity to evaluate the CATI instrument and substantiate or identify different problems with the questions during production (Fowler 2011).

Behavior Coding Results

In December 2019 and March 2020, interviewers made major changes to survey questions in half of the question administrations and omitted entire survey questions at high rates (21% and 25%, respectively). NASS interviewers are trained to use conversational interviewing. Therefore, it is not surprising that interviewers did not precisely adhere to the survey script. However, the behavior coding revealed that key phrases in the survey questions, such as the reference date and “on the acres operated” were often omitted. Given the interviewers were using conversational interviewing, it is possible they established that the survey would be asking about grain stored on-farm on the reference date before asking the questions. However, a closer examination revealed that in some interviews, interviewers never read these keys phrases in any of the question administrations, nor were these criteria conveyed earlier in the survey (20% of interviews in 2019 and 33% of interviews in 2020). Despite the high levels of problematic interviewer behavior, there was little indication that respondents were having difficulty answering the survey questions. The only indication of a problem was in December 2019, where respondents provided a response in an incorrect format at a high rate (30%). This was due to interviewers changing crop storage questions from open-ended numeric questions to yes/no questions.

Behavior coding provided an opportunity to evaluate the CATI questionnaire, performance of the questionnaire in a production setting, and interviewer effects. The behavior coding revealed no respondent issues; however, a limitation of behavior coding is that it can be difficult to identify respondent issues when responses appear plausible, as was the case in this study. The large number of changes interviewers made to the survey questions was concerning; however, interviewer changes to survey questions do not necessarily lead to response error (Dykema, Lepkowski, and Blixt 1997). Additional research would be needed to determine whether interviewer changes led to response error in the survey data. Additionally, without talking to the interviewers, it is difficult to know why they made certain changes to the survey questions.

GSR

Combined Usability and Cognitive Testing

No usability issues were found with the GSR web instrument. Respondents navigated the instrument, read the survey instructions and questions, and entered and submitted their responses with no issues. Respondents felt the instructions were clear and took the time to read them before proceeding through the survey questions, and some returned to the instruction screen when questions arose. No major comprehension issues were found with the survey questions, and most respondents indicated they refer to their records when responding to the survey.

Conducting combined usability and cognitive testing allowed NASS to efficiently evaluate both the user experience with the web questionnaire and respondents’ comprehension of the survey questions. Recruitment for this population was challenging and only five interviews were completed. Five interviews are sufficient for usability testing (Geisen and Romano Bergstrom 2017), and cognitive interviewing best practices state that a minimum of five interviews should be completed (Willis 2005) or interviews should be conducted until saturation is reached (U.S. Office of Management and Budget 2016). Given that no new issues were uncovered after five interviews, we felt these interviews provided sufficient information on the performance of the GSR. However, combined testing limits the number of cognitive probes that can be asked, and additional cognitive issues may have been uncovered had more interviews been conducted.

Review of Electronic Submission of Files

The review of the GSR electronic submission procedures revealed that some large businesses prefer to submit data exports from their records rather than completing survey questionnaires. Often these businesses are reporting for multiple grain storage facilities across multiple states and would need to complete a separate questionnaire for each facility. Files submitted vary in format (e.g., pdfs, Excel spreadsheets) and in content (e.g., different column labels, omission of data) or specificity of data (e.g., unit of measurement). RFO staff hand-edit the data and perform manual data entry. Data processing procedures varied slightly across the RFOs.

The expert review of the GSR electronic submission procedures provided insight into challenges large entities face when responding to the GSR and potential measurement error associated with processing electronic submission of files; however, no direct feedback from respondents was gathered. Interviews with respondents would have been beneficial to better understand the challenges large businesses face when responding to the GSR and to identify ways to ease burden while collecting more standardized data.

DISCUSSION

In 2020, stakeholders raised concern that stored grain was being doubled-counted in the Agricultural Survey and GSR. If double-counting was occurring, it was likely due to misreporting in the Agricultural Survey. Therefore, it would have been easy to limit this evaluation to cognitive testing of the Agricultural Survey. However, given the numerous documented advantages of multimethod testing, NASS decided to evaluate these two surveys using multiple methods with the limited resources available, allowing issues that would not have been discovered with cognitive testing alone to be uncovered and ultimately providing a better understanding of potential sources of measurement error in these two surveys.

Cognitive testing of the two surveys revealed that both surveys were performing well, and respondents understood that grain stored on-farm was to be reported on the Agricultural Survey and grain stored off-farm was to be reported on the GSR. Usability testing revealed no issues with the GSR web form. However, additional testing revealed that error may be present in the other modes. Behavior coding revealed interviewers made major changes to the Agricultural Survey in CATI, which may result in respondents reporting grain that has been moved off-farm either for storage or sale, possibly inflating estimates of grain stored on farm. The expert review of the electronic data submissions revealed that businesses that report for multiple facilities prefer to submit electronic records rather than use the web questionnaire. Submission of non-standardized data files opens the possibility for the introduction of measurement and processing errors.

Based on these findings, several recommendations were made to improve data collection, including improvements to the Agricultural Survey CATI instrument to ease interviewer administration, retraining and monitoring of interviewers and developing standardized ways for businesses to report for multiple facilities at one time in the GSR, whether it be modifying the web instrument or providing standardized templates for data uploads.

There were a few limitations to this research. Although behavior coding revealed significant issues with the administration of the CATI survey, without talking to interviewers, there is no way to know for sure why major changes were made to the survey questions. Interviewer debriefings could provide much needed insight to improving the instrument and interviewer training. It is also not possible to know if interviewer deviation in the Agricultural Survey led to response error. Additional research, such as a reinterview study could help determine this. It may have been beneficial to conduct more cognitive interviews with GSR respondents to ensure no major cognitive problems existed and to better understand how the web form could be improved for large operations.

NASS had the benefit of having several resources in place that helped limit the cost of this research. Prior experience with remote testing and the standard practice of recording CATI interviews allowed NASS to easily employ cognitive testing, usability testing, and behavior coding at no additional cost. Others who do not have these practices in place may still find multimethod testing cost prohibitive. In these cases, methodologists may want to consider using multiple lower costs methodologies such as expert review and ex-ante methodologies (Maitland and Presser 2018).

In summary, this research provides further evidence of the benefit of using multiple methods and demonstrates that it can be done on a small scale with lower costs. Multimethod pretesting allowed NASS to produce different but complementary findings and ultimately a better understanding of possible sources of measurement error in these two surveys.

Lead author

Heather Ridolfo, Office of Statistical Methods and Research, U.S. Energy Information Administration, (202) 586-6240, Heather.Ridolfo@eia.gov

Disclaimers

The lead author conducted this research while employed for the National Agricultural Statistics Service.

The analysis and conclusions contained in this paper are those of the authors and do not represent the official position of the U.S. Energy Information Administration (EIA) or the U.S. Department of Energy (DOE).

The findings and conclusions in this presentation are those of the authors and should not be construed to represent any official USDA or U.S. government determination or policy.