Introduction

Most U.S. based surveys assess racial-ethnic identities and are increasingly asked to better capture skin color as an aspect of racialized appearance (Telles 2018). Such survey data can importantly inform how skin color relates to social and health outcomes (Adams, Kurtz-Costes, and Hoffman 2016; Dixon and Telles 2017). Doing so depends on reliable measurement of skin color, however. The typical approach to skin color measurement in survey data has been interviewer or respondent ratings using categorical skin color scales (e.g., Campbell et al. 2020). The potential for using mechanical instruments to assess skin color has grown as handheld devices have become increasingly affordable and user-friendly (e.g. Gordon et al. 2022). We compared these two strategies for skin color measurement— a) handheld devices and b) rating scales— offering empirical findings and practical guidance for future survey efforts to collect skin color data.

Handheld devices, including colorimeters and spectrophotometers, measure color via light reflectance. Historically, such instruments were used primarily by bench scientists in biology and chemistry fields because they were too expensive and too large and delicate for easy transportation outside of laboratory settings. These instruments are now small and inexpensive enough to be feasible for a wide range of in-person survey contexts. Handheld devices measure consistently across varying lighting conditions, but technical settings, such as the size of the opening (aperture) through which light passes, can affect readings. Survey methodologists need evidence regarding: a) the reliability of new low-cost devices relative to well-established yet larger and more expensive devices, b) where on the body and how to take color readings using these devices, and c) whether and how field staff can be effectively trained to use the devices.

The current study builds on prior research by comparing three devices, examining how consistent their readings are across repeated measures at four locations (forehead, cheek, inner arm, outer arm) and varying technical settings (size of aperture for light transmission; simulated lighting conditions). In prior work (Gordon et al. 2022) we compared two devices at a single location with a single device setting. The new results offer comparison with a more sophisticated and expensive instrument certified to perform at industry standards for reliability and validity (Konica Minolta 2007). The new results also inform survey methodologists about where on the body to take readings with what device settings. Results are also translated into practical guidance, including lessons learned for creating measurement protocols and training staff.

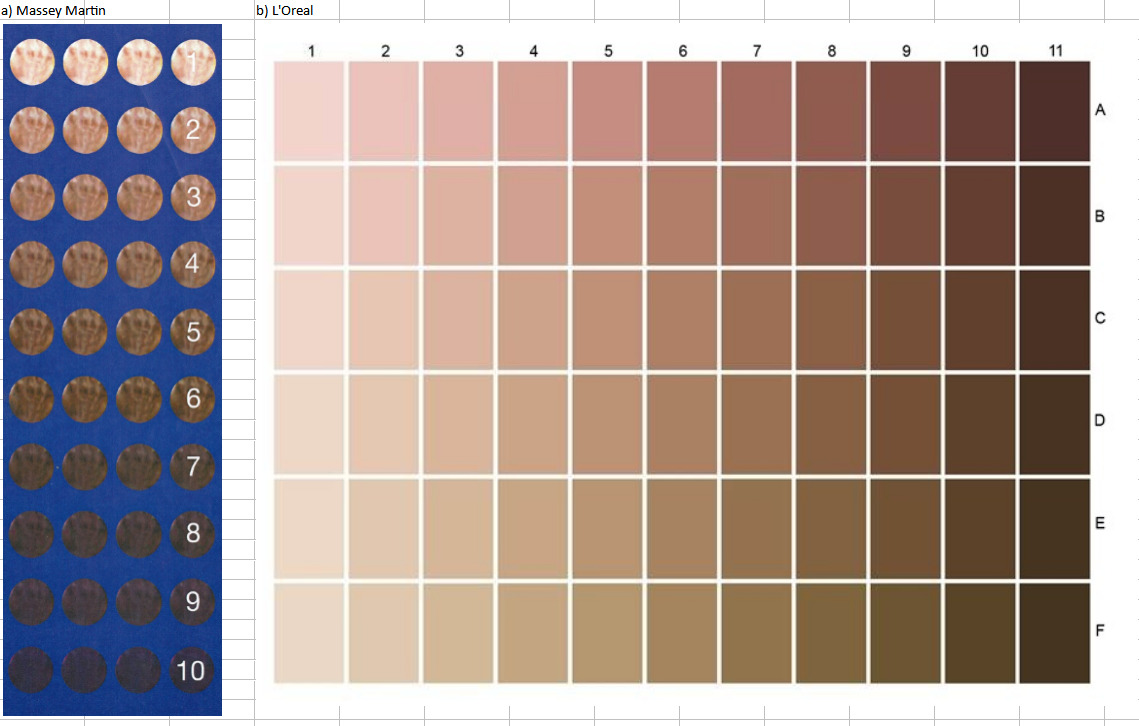

Skin color rating scales build on a long tradition asking people to select from images (e.g., colored porcelain tiles) or words (e.g., lightest, lighter, darker, darkest). Scales developed based on color science emerged only recently, however. The widely used Massey-Martin scale (Massey and Martin 2003) was developed in the early 2000s for interviewers to rate participants’ skin shade (lightness-darkness) and has been used in many large surveys (see Figure 1). The L’Oreal scale was developed for the cosmetics industry using color science (De Rigal et al. 2007) and has since been used in surveys (e.g., Campbell et al. 2020; Garcia and Abascal 2016; Khan et al. 2023).

The current study builds on prior research by offering evidence regarding how reliably interviewers and participants can choose from the more numerous L’Oreal versus fewer Massey-Martin options. In prior work (Gordon et al. 2022) we compared the Massey-Martin with another scale, the PERLA. The new results are important because the Massey-Martin and the PERLA offer ten or eleven choices arrayed along a single dimension primarily reflecting lightness-to-darkness. In contrast, the L’Oreal offers sixty-six choices arrayed along eleven levels of lightness-to-darkness each within six levels of redness-to-yellowness. Consideration of the L’Oreal choices for skin undertone (redness, yellowness) is important given skin undertone has been less studied than skin darkness. Adding to a recent study examining undertone in photographs (Branigan et al. 2023)[1], we compared in-person human ratings of undertone to handheld device readings of redness and yellowness. The current study also extends prior results by using a specialized room with equalized conditions such as lighting.

Method

Sample. Undergraduate students (n = 102) were recruited through flyers, emails, and class visits by pairs of undergraduate research assistants. Consistent with the university’s designation as an Asian American Native American Pacific Islander and Hispanic Serving Institution, the majority of study participants identified as Asian (54%) and about one-fifth each identified as Latinx (23%) and White (18%); 3% each identified as Black and Other race-ethnicities (see Table 1). Over two-thirds of participants identified as Cisgender Woman (71%), over one-quarter as Cisgender Man (27%); two participants identified as Transgender Man and Nonbinary. Most participants were ages 18 to 21 (12% to 30% each single age). Study team members also represented multiple genders and race-ethnicities, including Black, White, Latino, and Asian.

Procedures. A dedicated room equalized background and lighting conditions for each participant’s ratings. In consultation with a color measurement expert, we selected an interior room (to reduce temperature fluctuation), determined the appropriate number (four) and placement of luminaire lighting fixtures for the room size and shape, and selected grey paint color, furniture, and covering for participants’ clothing. The luminaires simulated outdoors mid-day light during data collection. Participants sat in a chair at a desk across from two interviewers.

Handheld devices are based on color science which aims to understand and replicate how humans see color. One widely used color space separates three dimensions of darkness-lightness (L*), greenness-redness, (a*), and blueness-yellowness (b*). The devices operate by emitting light out of a small opening, placed flush against the area of measurement, and recording the light reflected by the object. Spectrophotometers capture the full light spectrum whereas colorimeters focus on certain wavelengths. The instruments are used in a range of applications from house painting to constructing craniofacial prosthetics.

An advantage of handheld devices for survey methodologists is consistency of measurement across many conditions—e.g., a single reading can simulate various illuminants (lighting conditions) from outdoors mid-day (known as D65), to outdoors sunrise/sunset (known as D50), to indoors incandescent (known as A). [2] Formulas translate to various illuminants using recorded values that reference industry standard “black” (no light) and “white” (all wavelengths visible to humans). Technical features can affect readings, however, and each device uses a somewhat different design, often proprietary. Some devices allow for changing technical settings, such as the size of the aperture letting light pass through. Our protocol used two devices’ options to compare aperture size and lighting conditions (see Appendix A).

The first of three devices we used is a spectrophotometer from the commercial company Konica Minolta. The Konica Minolta CM-700d has been widely used for a range of applications yet is expensive and cumbersome to maneuver due to size. At the time of our study, the device cost about $14,000, was just over 8 inches tall, and weighed about 1 pound. We compared two aperture options which could be readily toggled (a larger aperture, labelled MAV; a smaller aperture, labelled SAV). For survey purposes, the device is sturdy with a built-in screen, easy-to-use calibration checks, and computer connected software to take and export multiple readings at once. Yet, at the time of our study, the device required wired connection to a computer and required wall plugin when batteries ran low.

We also considered two less expensive and smaller devices. Nix, like Konica Minolta, is a commercial company. Nix has specialized in small colorimeters intended for everyday use in painting and design (a spectrophotometer is now also available). The Nix device had no built-in screen, but was sturdily encased to resist fall damage and worked wirelessly with a user-friendly smart phone app. The device was inexpensive, small, and light. We used a $349 Nix Pro 2 approximately 2 x 2 inches in size and weighing 1.5 ounces. The device arrived pre-calibrated and reliability-tested but possessed no built-in features for users to run calibration checks.

The Labby spectrophotometer was developed for low-resource contexts with open-source specifications and readily purchased components, including assembly in a 3D printed case. The company built the device used in our study, costing about $1,200. The version of Labby we used lacked a built-in screen, had a single aperture, and had limited pre-programmed readings for a single illuminant. The open-source nature of the device made fully transparent the hardware and calculations used to obtain final outputs but presented a steeper learning curve, greater potential for human error, and less protection from accidental damage.

We took readings in the L*a*b* color space from each device. L* readings can range from 0 to 100, with higher scores indicating lighter skin. For human skin tone, a* and b* values are positive with higher scores indicating darker shades of redness (a*) or yellowness (b*). In our sample, L* (lightness) values ranged from about 25 to 80, averaging around 60 with a standard deviation of about 6 (see Appendix B). The b* (yellowness) values ranged from about 5 to 25, averaging about 16 with a standard deviation of about 3. The a* (redness) values ranged from about 2 to 20 with an average of around 10 and standard deviation of about 2. We had 10 fewer readings from Labby than the other devices due to missing data when we waited for a replacement device. One participant also refused use of the Konica Minolta when informed of the brief flash it would emit during readings. We also excluded one set of outlying L*a*b* readings for three participants for Labby and for one participant for Nix.

The original Massey-Martin (2003) rating scale included 11 images of lighter to darker colored hands, each with visible cuffs. We followed recent studies using a circular portion extracted from 10 images (see again Figure 1). The 66-color L’Oreal palette was created using color science readings taken from the faces of over 1,000 women worldwide (France, United States, Mexico, Brazil, Japan, Korea, China, Thailand, Africa; De Rigal et al. 2007). L’Oréal scientists selected the colors using color science’s definition of the minimum difference that the human eye can detect. The resulting palette includes 11 levels of lightness-darkness and 6 levels of redness-yellowness. Respondents used 8 of the 10 Massey-Martin color swatches, all but the top 2 values (see Appendix B). Respondents used nearly all of the 66 L’Oreal color swatches, with values covering the full range of 1 to 11 for lightness-darkness and all but 1 (reddest) of the 6 levels of redness-yellowness.

Analyses. We considered absolute agreement of individual scores (i.e., were two readings or two ratings identical in value?) using the intraclass correlation (ICC; Koo and Li 2016). For survey methodologists, absolute agreement is important for studies considering mean differences in skin color. We also presented Pearson correlations (r; i.e., were scores higher on one reading/rating when higher on another?), which are important for studies considering correlations of other variables with skin color. We considered ICCs above .60 as good and above .75 as excellent agreement (Cicchetti 1994; Lance, Butts, and Michels 2006). We used similar guidance for Pearson correlations, which will be equal to or larger than ICCs and also have a shared variation interpretation (r = .75 reflects 56% shared variation).

Findings and Implications for Best Practices

We organized key findings around focal questions of interest to survey methodologists.

How many handheld device readings are needed? Additional readings take time, yet that time may be warranted if test-retest reliability is low and averaging extra readings could thus considerably reduce measurement error.

Our results showed that test-retest reliability was excellent (see Table 2). Konica Minolta edged out the other two devices, with its repeated readings nearly identical, especially for lightness (L*) and yellowness (b*). Nix and Labby showed slightly more variation between their repeated readings, and each had some outlying values.

For practice, our results indicated that one reading would generally be sufficient. Given a second reading took little time, however, two readings could protect against the few instances of outlying readings.

To achieve these results in practice, however, training is recommended. Our staff training supported consistent device use, such as about how much pressure to apply and how to avoid skin features such as veins and freckles (notes available from authors). Some of the difference between repeated readings seen for Nix and Labby may also reflect their technical construction. Using a more recently developed Nix attachment may reduce sensitivity of readings to varying pressure applied by field staff during readings.

How do technical settings affect readings? Survey methodologists are faced with many choices for device technical settings, yet, the impact of such choices for measuring skin color has not been well documented to date.

Our findings, shown in Table 3, indicate that these choices matter the least for assessments of lightness (L*). Yet, their impact is somewhat greater for assessing undertones of yellowness (b*) and particularly important for redness (a*). Consistently higher redness values of readings taken with a larger, rather than smaller, aperture are illustrated in Figure 2.

In practice, when undertone is focal to a study’s research questions, taking readings with multiple technical settings may be advised. Ensuring that surveys’ documentation and publications clearly report what settings they used would also facilitate comparisons across studies. Encouraging device manufacturers to be transparent about relevant technical details for scientific communities might also counterbalance their proprietary interests for commercial applications.

How much does body location matter? Medical and anthropological uses of spectrophotometry have long recognized the importance of body location, such as sun-exposed (facultative) and sun-protected (constitutive) skin (Neville, Palmieri, and Young 2021). Participants in large scale field surveys may also decline measurements in private body locations.

We documented the importance of body location for skin undertone, in addition to its recognized importance for skin shade. Within face and arm, readings were highly correlated, but differed somewhat in absolute levels, being somewhat lighter (higher L*) on the cheek than forehead and on the inner versus outer arm (Table 4). Readings were also redder and yellower (higher a* and b*) on the outer than inner arm, but more consistent between cheek and forehead. Comparing cheek and inner arm, although lightness (L*) and yellowness (b*) were fairly consistent, redness (a*) was considerably higher on the cheek. Figure 3 illustrates the consistently redder readings on the outer than inner arm.

In practice, survey methodologists would want to carefully consider the substantive goals of a project when choosing body locations. For example, for questions about implicit bias due to colorism, the forehead location might be chosen as the front of the face is generally visible across day-to-day interactions. For a different question, such as an individual’s biochemical vulnerability to seasonal affective disorder, sun-protected skin, such as the inner arm location, might be chosen (e.g., Stewart et al. 2014).

How well do recent handheld devices work relative to well-established yet larger and more expensive devices? Smaller size and lower cost facilitate taking devices into the field when budgets are limited.

Our results showed that although values were highly correlated between readings taken by different devices at the same body location, absolute levels differed (Table 5, Figure 4). Labby tended to produce lighter (L*) and yellower (b*) readings than Konica Minolta. The reverse was true for Nix. Aperture size seemed important, including for the longer wavelengths of redness, as illustrated in Figure 4. The Nix aperture size was closest to Konica Minolta’s larger aperture, where consistency was highest (bottom right, Figure 4).

In practice, substantive goals should inform survey methodologists’ choices. Studies focused on questions correlating skin color with other variables would expect similar results regardless of device choice. Here, smaller and less expensive devices may be sufficient. Results for absolute levels of skin color would be more sensitive to device choice, including aperture size, warranting more research into when and where these differences matter most.

How do rating scale scores relate to handheld device readings? Collecting both rating scale and device readings increases respondent burden and survey cost, making important evidence about the relative similarity and difference of their scores.

Our findings showed that correlations were considerably higher between device readings and human ratings of skin shade (lightness-darkness) than skin undertone (redness, yellowness; Table 6). Single ratings correlated with darkness nearly as highly as three-rating averages. However, these correlations were somewhat lower for participants than interviewers.

For practice, if skin darkness is the focus, our findings suggest that correlational results would be similar if either a handheld device or a rating scale were used. At the same time, for studies aiming to distinguish how humans assess skin color from its color science calculated value, both human ratings and device readings would be needed. These studies could further examine self and other perceptions by having multiple ratings (including from photographs of participants; Khan et al. 2023). Cognitive interviews might also inform why humans are better at choosing swatches that align with color science calculated skin darkness than its redness or yellowness.

Conclusion

Modern technology offers survey methodologists new options for responding to calls to better capture skin color in surveys (Telles 2018). Our findings document the advantages of using handheld devices to reliably assess skin color, supporting substantive questions about how skin shade and skin undertone affect social inequalities in human health and well-being. We offer guidance to survey methodologists for such uses. In some cases, recommendations are clear—e.g., just one or two device readings at any given location; any device can similarly capture the relative darkness of skin. In other cases, recommendations are less certain—e.g., skin undertones of redness and yellowness being sensitive to device choices and body locations. We encourage future studies that pursue why such variability exists and for which substantive questions it matters most.

Acknowledgments

This material is based upon work supported by the National Science Foundation under Grant No. 1921526. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation. The authors thank the Skin Tone Identities and Inequalities Project team, including Dahlya El-Adawe and Hai Nguyen. Branigan, Khan, and Nunez are listed in alphabetical order to denote their equal authorship contributions.

Lead author contact information

Rachel A. Gordon, Associate Dean for Research and Administration, College of Health and Human Sciences, Northern Illinois University, 370 Wirtz Drive, DeKalb, IL 60115, rgordon@niu.edu.

Branigan et al. (2023) drew upon prior theory and research to conceptualize the importance of undertone for colorism research. Skin redness and yellowness, for instance, can be perceived as signals of attractiveness and health, although these colors’ momentary fluctuations due to emotions, diet, and sleep may mean that their social signaling is less stable than is skin’s darkness. Color science assessments of skin color commonly use dimensions of darkness-lightness, greenness-redness, and blueness-yellowness. Measured values for skin color fall within the red and yellow ranges of the latter dimensions.

Color science aims to understand and reproduce the ways humans see color (Logvinenko and Levin 2022). One important construct is illumination, the relative intensity of light across the spectrum of wavelengths. How people perceive an object’s color depends on its illumination. Various illuminants have been defined to represent different scenarios, such as those listed in the narrative (i.e., outdoors mid-day, known as D65; outdoors sunrise/sunset, D50; and, indoors incandescent, A).