Introduction

Declining response rates in random-digit dialing (RDD) surveys have given rise to discussions on new modes of data collection (Couper 2011). Some large scale surveys have moved from RDD samples to address-based samples (ABS) in order to ensure coverage (Shook-Sa et al. 2014) and allow respondents to access surveys by web (McMaster et al. 2017). While ABS offer some advantages, one drawback is that often apartment numbers are not included, resulting in mail delivery to a common mail drop location shared by multiple residents of a single address. Drop point locations are problematic in large cities, with many potential respondents residing in multifamily building and may also be problematic in rural areas where many families share a common delivery point. Multifamily drop point may bias the sample by reducing the chances of persons with such addresses to receive invitations to participate (American Association of Public Opinion Research 2016).

Researchers have tried to reach respondents using multiple modes, using either sequential or concurrent research designs (Mauz et al. 2018). Web-based surveys offer quicker and less expensive options to collect data (Campbell, Venn, and Anderson 2018), but response rates are often lower (Cook, Heath, and Thompson 2000; Sauermann and Roach 2013; Manfreda et al. 2008). The selection of frame may limit the ability to use some modes. This is due to the fact that ABS frames do not include accurate telephone numbers for all addresses (Harter et al. 2018; Kali and Cervantes 2016), and RDD frames do not include accurate addresses for all telephone numbers (Biemer and Lyberg 2003). In the past, landline RDD samples were often matched to addresses in order to send advance letters to potential respondents (Biemer and Lyberg 2003) or, in some cases, to push RDD sample respondents to the web. Traditionally, landline telephone numbers could be accurately matched to addresses, and cell phones were not able to be matched at all. Recently cell phone matches have improved but remain low (at approximately 20%). The ability to match addresses to telephone numbers in an RDD has declined over time as cell phone numbers become larger proportions of samples while the ability to match telephone numbers to addresses in an ABS is improving. This improvement may offer the potential to use telephone interviews as a mode with an ABS. Likewise the improvement in matching addresses to cell phone numbers may offer future capacity to use RDD samples for mail or push to web modes. Using a questionnaire from a long standing health survey, this research examines the effectiveness of two samples (ABS and RDD) and three modes (web-based, mail, and telephone) for groups of adults in a single state.

Background

The Behavioral Risk Factor Surveillance System (BRFSS) is a system of health-related telephone surveys administered in each of the states and participating U.S. territories. Traditionally, a telephone survey, the BRFSS has used an RDD sample of over 450,000 adults annually (Centers for Disease Control and Prevention 2018). Approximately half of the sample is cell phone and half landline. Landline telephone numbers have been matched to addresses by most states in order to send advance letters to households with sampled numbers. However, prior to the administration of the 2019 BRFSS, cell phone numbers had not been matched to addresses. The declining response rates of RDD samples have been observed for some time (Link et al. 2008), although the BRFSS has retained relatively high response rates, with a median (among the states) of 45.3% in 2017 (Centers for Disease Control and Prevention 2017). As the proportion of cell phone numbers has increased on the BRFSS, questions have arisen as to the feasibility of matching addresses to cell phone numbers. Similarly, there has been an improvement in the ability to match ABS to telephone numbers (American Association of Public Opinion Research 2016). The BRFSS conducts pilots of potential new methods and data collection protocols. Since there are a number of questions on effectiveness of both samples and modes, a pilot was designed to test the effectiveness (in terms of cost, practicality, timeliness, and response rate) for multiple modes using both ABS and RDD samples. The pilot was to focus on the following research questions:

-

Can telephone numbers and addresses for ABS and for RDD be matched accurately?

-

Can ABS and RDD samples be used to divert respondents to a web-based survey?

-

Can sequential modes be used as a cost savings methodology?

-

Can we improve response rates for ABS drop box locations?

Methods

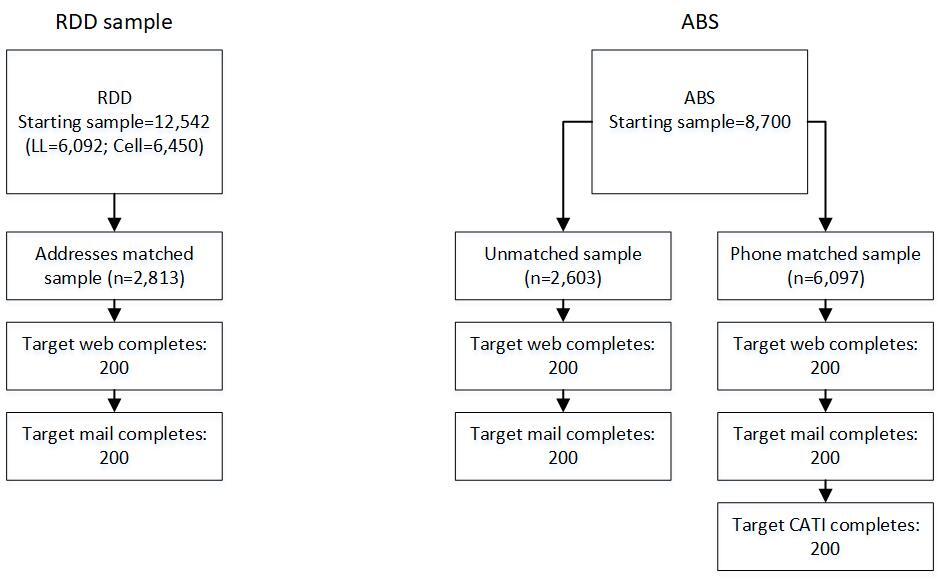

Two samples (one ABS and one RDD) were drawn of adults who reside in the state of New York. New York was chosen because it includes both urban and rural populations and includes a large number of mail drop locations. Both samples underwent address-telephone matching. Originally, a target number of 200 completes was set for each mode/sample using the BRFSS core questionnaire (Centers for Disease Control and Prevention 2018). The ABS respondents were first pushed to web by a mailed invitation, followed by a mailed questionnaire and then telephone interviews for those addresses with matched telephone numbers. The RDD sample, comprised of both landline and cell phone numbers, was invited to participate online by a mailed invitation. A second mode using mailed questionnaires followed. The RDD sample did not include telephone interviews due in part to project budget issues, but also because the effectiveness of calling on the BRFSS RDD sample is known (Centers for Disease Control and Prevention 2017). Calling protocols and response rate calculations followed standard procedures set by the BRFSS. Figure 1 illustrates the method for achieving the targeted number of completes.

An experiment was undertaken to determine whether ABS drop point response rates could be improved if research on specific addresses was undertaken to identify apartment numbers. After the telephone matching was completed, the sample was split between non-drop point addresses and drop point addresses (where multiple housing units share a mail receptacle).

Drop point records were split into two experimental groups. For Group 1, research (e.g., Internet searching of realty websites) was conducted to determine the naming conventions associated with the units at a given address (such as Unit 1, Apt. B, Suite 3, etc.). After these naming conventions were determined, each record was copied to create a new record containing the original drop point street address and unique secondary addresses. For Group 2, a record’s “drop count” variable (i.e., the number of units associated with a given drop point address) was used to determine the number of copies to make of that record.

Data collection went from February 2018–May 2018. All mailings and telephone interviews originated from the ICF survey operations center in Martinsville, VA. Costs per complete were calculated strictly using data collection costs and eliminating administrative, programming, and overhead costs.

Results and Discussion

Of the 12,542 numbers in the RDD sample, only 22.4% matched to addresses. Of those that were matched and responded, 59% verified that the address was correct. Response rates among the RDD sample to participate in the web were very low (2.1%) and the invitation to participate by mail about a month later (see Table 1) only improved the response rate to 8.4%. Table 1 indicates that the mail questionnaires ($51.67) were less expensive than web-based responses ($56.06). This is due to the fact that so many mailings pushing respondents to web were required to get each response.

The ABS respondents were provided with three opportunities to participate. As Table 1 illustrates, the initial push to the web resulted in high costs and low response rates. As would be anticipated, response rates were higher when the telephone numbers associated with the addresses were listed and not identified as drop point locations. Response rates improved (5.6%) when the entire questionnaire was mailed to the addresses, but costs per complete among some portions of the sample (notably those matched to unlisted telephone numbers) increased ($78.07). In the final mode, respondents were contacted by telephone. Response rates improved again (to 11.7%), and costs for landline completes dropped to $52.57 but rose for cell phone respondents ($92.06). Table 1 includes a comparable cost for BRFSS interviews (not part of the pilot) as illustrations of what completed interviews might have cost from the RDD sample. Cell phone interviews are typically more expensive as they must be hand dialed, and the number of dialings per complete is higher. For the BRFSS, although cooperation rates (measured as proportions of persons who complete the questionnaire after screening) are higher for cell phone than landline (median of 83.5% and 65.0%, respectively), there is a much higher rate of unknown eligibility of dialed numbers for cell phone than for landline (46.7% to 15.6%, respectively; Centers for Disease Control and Prevention 2017).

In the mail survey, respondents were asked whether anyone in their household had the telephone number listed in the sample file. In total, 48% of ABS respondents said that was the correct telephone number (compared to 59% of RDD mail respondents). An additional 7% of ABS respondents reported that the number was correct for another person in their household, compared to 9% of RDD mail respondents. ABS computer-assisted telephone interviewing (CATI) respondents were also asked to confirm the address in the sample file. While the majority of respondents (60%) confirmed their address (indicating a correct telephone and address match in the sample file), 28% of ABS CATI respondents answered that the address in the sample file was incorrect. This indicates that in addition to the 9% of addresses returned as undeliverable, a relatively high percentage of surveys may have been completed by unintended recipients. The targeted number of completes was not achieved in each mode, especially falling short for RDD respondents pushed to the web (n=57).

Of the 8,700 addresses in the ABS, 359 were identified as drop point addresses. As the results in Table 1 indicate, the presence of the drop point increased the costs per complete. Of those, 111 matched to a landline telephone number, and 214 matched to a cell phone number. Of the 359 drop points, 180 were assigned to Group 1 (where unit/apartment numbers were researched and added), and 179 were assigned to Group 2 (where the number of records was inflated to account for the number of units at each address. The drop point experiment result (for a portion of the ABS) is presented in Table 2. As this table indicates, the 359 addresses actually represented 833 households. Outcomes for responses were relatively equal among the two groups, with the exception that a larger number of surveys were returned as incorrect addresses for Group 1. This indicated that the research on naming convention for each address resulted in misidentification of the unit/apartment number.

Conclusions

This project compared costs and response rates across two samples and three modes. There were a number of limitations to this research effort. The telephone mode was removed from the RDD sample due to project budget, and the sample itself was limited to a single state. In addition, due to budgetary constraints, only one reminder was sent for each of the modes. Reminders might have increased response rates for both mail and/or web respondents. Of particular concern was whether addresses and telephone numbers could accurately be cross-matched for each of the samples. Telephone and address matching was higher for the ABS but with less accuracy than for the RDD sample. Although there is some literature to suggest that a move from RDD to ABS would be cost effective, the result was not evident in this experiment. The initial push to web for each of the samples resulted in low response rates for a relatively high cost per complete. There was no evidence that either of the samples performed more efficiently than the other.

The drop point experiment illustrated that the ABS underestimated the number of households at drop point addresses. The 359 drop point addresses represented over 800 households when unit and apartment numbers were researched. The research itself was time-consuming and substantially increased costs per complete. The experiment did not find a positive outcome associated with efforts to supplement drop point addresses.

Although address matching for the RDD cell phone sample was not high, sending advance letters to cell phone numbers may increase response rates. Advance letters have an established history of increasing response rates among landline respondents (Biemer and Lyberg 2003), and there is no reason to suspect that cell phone respondents would behave differently when presented with advance notification of selection. Beginning January 2019, the BRFSS protocols allowed states to opt in to using advance letters on their cell phone samples. The last four digits of the number were provided in the advance letter to account for households with multiple cell phone numbers. Letters also specified that the survey was being conducted among adult residents, and data collectors report respondents calling into the survey centers to report that the number selected was assigned to a minor. This had the effect of cost reduction in that those numbers could be dispositioned as out-of-sample without dialing.

The BRFSS is not subject to one concern of researchers who use RDD samples: In many cases, targeting states and substate areas using cell phone samples result in inefficient data collection and potential bias. Time may be wasted contacting persons who live outside the targeted geographic jurisdiction and who have sampled telephone numbers. Loss of coverage is problematic for persons who have moved into the area and who have out-of-sample area codes. However, for the BRFSS such persons are interviewed, and data are transferred to appropriate locations at the end of each year, thereby adjusting for both undercoverage and calling out of sample. Despite this advantage to the data collection system used in this case, there was little evidence to support a change from RDD to ABS, as response rates for telephone interview respondents were higher in the ABS, and the use of telephone interviews with an ABS would be limited by the percentage of correctly matched telephone numbers. Costs were not lower for the ABS respondents.